After the driver of a speeding bus ran over and killed two college students in Dhaka in July, student protesters took to the streets. They forced the ordinarily disorganized local traffic to drive in strict lanes and stopped vehicles to inspect license and registration papers. They even halted the vehicle of the Chief of Bangladesh Police Bureau of Investigation and found that his license was expired. And they posted videos and information about the protests on Facebook.

The fatal road accident that led to these protests was hardly an isolated incident. Dhaka, Bangladesh’s capital, which was ranked the second least livable city in the world in the Economist Intelligence Unit’s 2018 global liveability index, scored 26.8 out of 100 in the infrastructure category included in the rating. But the regional government chose to stifle the highway safety protests anyway. It went so far as raids of residential areas adjacent to universities to check social media activity, leading to the arrest of 20 students. Although there were many images of Bangladesh Chhatra League, or BCL men, committing acts of violence on students, none of them were arrested. (The BCL is the student wing of the ruling Awami League, one of the major political parties of Bangladesh.)

Students were forced to log into their Facebook profiles and were arrested or beaten for their posts, photographs, and videos. In one instance, BCL men called three students into the dorm’s guestroom, quizzed them over Facebook posts, beat them, and then handed them over to police. They were reportedly tortured in custody.

A pregnant school teacher was arrested and jailed for just over two weeks for “spreading rumors” due to sharing a Facebook post about student protests. A photographer and social justice activist spent more than 100 days in jail for describing police violence during these protests; he told reporters he was beaten in custody. And a university professor was jailed for 37 days for his Facebook posts.

A Dhaka resident who spoke on the condition of anonymity out of fear for their safety said that the crackdown on social media posts essentially silenced student protesters, many of which removed photos, videos, and status updates about the protests from their profiles entirely. While the person thought that students were continuing to be arrested, they said, “nobody is talking about it anymore — at least in my network — because everyone kind of ‘got the memo’ if you know what I mean.”

This isn’t the first time Bangladeshi citizens have been arrested for Facebook posts. As just one example, in April 2017, a rubber plantation worker in southern Bangladesh was arrested and detained for three months for liking and sharing a Facebook post that criticized the prime minister’s visit to India, according to Human Rights Watch.

Bangladesh is far from alone. Government harassment to silence dissent on social media has occurred across the region and in other regions as well — and it often comes hand-in-hand with governments filing takedown requests with Facebook and requesting data on users.

Facebook has removed posts critical of the prime minister in Cambodia and reportedly “agreed to coordinate in the monitoring and removal of content” in Vietnam. Facebook was criticized for not stopping the repression of Rohingya Muslims in Myanmar, where military personnel created fake accounts to spread propaganda which human rights groups say fueled violence and forced displacement. Facebook has since undertaken a human rights impact assessment in Myanmar, and it has also taken down coordinated inauthentic accounts in the country.

Facebook CEO Mark Zuckerberg arrives to testify before a joint hearing of the US Senate Commerce, Science and Transportation Committee and Senate Judiciary Committee on Capitol Hill, April 10, 2018 in Washington, DC. / AFP PHOTO / JIM WATSON (Photo credit should read JIM WATSON/AFP/Getty Images)

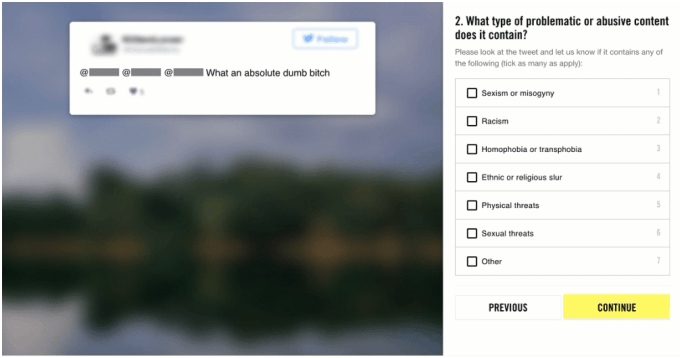

Protesters scrubbing Facebook data for fears of repercussions isn’t uncommon. Over and over again, authoritarian-leaning regimes have utilized low-tech strategies to quell dissent. And aside from providing resources related to online privacy and security, Facebook still has little in place to protect its most vulnerable users from these pernicious efforts. As various countries pass laws calling for a local presence and increased regulation, it is possible that the social media conglomerate doesn’t always even want to.

“In many situations, the platforms are under pressure,” said Raman Jit Singh Chima, policy director at Access Now. “Tech companies are being directly sent takedown orders, user data requests. The danger of that is that companies will potentially be overcomplying or responding far too quickly to government demands when they are able to push back on those requests,” he said.

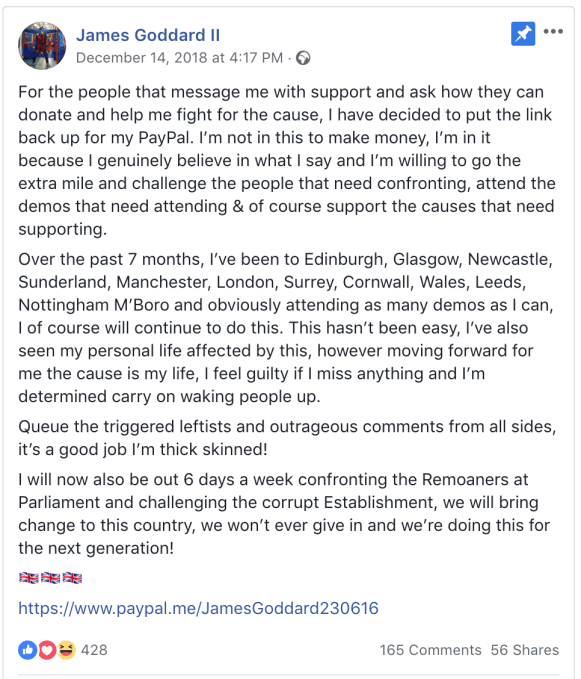

Elections are often a critical moment for oppressive behavior from governments — Uganda, Chad, and Vietnam have specifically targeted citizens — and candidates — during election time. Facebook announced just last Thursday that it had taken down nine Facebook pages and six Facebook accounts for engaging in coordinated inauthentic behavior in Bangladesh. These pages, which Facebook believes were linked to people associated with the Bangladesh government, were “designed to look like independent news outlets and posted pro-government and anti-opposition content.” The sites masqueraded as news outlets, including fake BBC Bengali, BDSNews24, and Bangla Tribune and news pages with photoshopped blue checkmarks, according to the Atlantic Council’s Digital Forensic Research Lab.

Still, the imminent election in Bangladesh doesn’t bode well for anyone who might wish to express dissent. In October, a digital security bill that regulates some types of controversial speech was passed in the country, signaling to companies that as the regulatory environment tightens, they too could become targets.

More restrictive regulation is part of a greater trend around the world, said Naman M. Aggarwal, Asia policy associate at Access Now. Some countries, like Brazil and India, have passed “fake news” laws. (A similar law was proposed in Malaysia, but it was blocked in the Senate.) These types of laws are frequently followed by content takedowns. (In Bangladesh, the government warned broadcasters not to air footage that could create panic or disorder, essentially halting news programming on the protests.)

Other governments in the Middle East and North Africa — such as Egypt, Algeria, United Arab Emirates, Saudi Arabia, and Bahrain — clamp down on free expression on social media under the threat of fines or prison time. And countries like Vietnam have passed laws requiring social media companies to localize their storage and have a presence in the country — typically an indication of greater content regulation and pressure on the companies from local governments. In India, WhatsApp and other financial tech services were told to open offices in the country.

And crackdowns on posts about protests on social media come hand-in-hand with government requests for data. Facebook’s biannual transparency report provides detail on the percentage of government requests the company complies within each country, but most people don’t know until long after the fact. Between January and June, the company received 134 emergency requests and 18 legal processes from Bangladeshi authorities for 205 users or accounts. Facebook turned over at least some data in 61 percent of emergency requests and 28 percent of legal processes.

Facebook said in a statement that it “believes people deserve to have a voice, and that everyone has the right to express themselves in a safe environment,” and that it handles requests for user data “extremely carefully.'”

The company pointed to its Facebook for Journalists resources and said it is “saddened by governments using broad and vague regulation or other practices to silence, criminalize or imprison journalists, activists, and others who speak out against them,” but the company said it also helps journalists, activists, and other people around the world to “tell their stories in more innovative ways, reach global audiences, and connect directly with people.”

But there are policies that Facebook could enact that would help people in these vulnerable positions, like allowing users to post anonymously.

“Facebook’s real names policy doesn’t exactly protect anonymity, and has created issues for people in countries like Vietnam,” said Aggarwal. “If platforms provide leeway, or enough space for anonymous posting, and anonymous interactions, that is really helpful to people on ground.”

BERLIN, GERMANY – SEPTEMBER 12: A visitor uses a mobile phone in front of the Facebook logo at the #CDUdigital conference on September 12, 2015 in Berlin, Germany. (Photo by Adam Berry/Getty Images)

A German court found the policy illegal under its decade-old privacy law in February. Facebook said it plans to appeal the decision.

“I’m not sure if Facebook even has an effective strategy or understanding of strategy in the long term,’ said Sean O’Brien, lead researcher at Yale Privacy Lab. “In some cases, Facebook is taking a very proactive role… but in other cases, it won’t.” In any case, these decisions require a nuanced understanding of the population, culture, and political spectrum in various regions — something it’s not clear Facebook has.

Facebook isn’t responsible for government decisions to clamp down on free expression. But the question remains: How can companies stop assisting authoritarian governments, inadvertently or otherwise?

“If Facebook knows about this kind of repression, they should probably have… some sort of mechanism to at the very least heavily try to convince people not to post things publicly that they think they could get in trouble for,” said O’Brien. “It would have a chilling effect on speech, of course, which is a whole other issue, but at least it would allow people to make that decision for themselves.”

This could be an opt-in feature, but O’Brien acknowledges that it could create legal liabilities for Facebook, leading the social media giant to create lists of “dangerous speech” or profiles on “dissidents,” and could theoretically shut them down or report them to the police. Still, Facebook could consider rolling a “speech alert” feature to an entire city or country if that area becomes volatile politically and dangerous for speech, he said.

O’Brien says that social media companies could consider responding to situations where a person is being detained illegally and potentially coerced into giving their passwords in a way that could protect them, perhaps by triggering a temporary account reset or freeze to prevent anyone from accessing the account without proper legal process. Some actions that might trigger the reset or freeze could be news about an individual’s arrest — if Facebook is alerted to it, contact from the authorities, or contact from friends and loved ones, as evaluated by humans. There could even be a “panic button” type trigger, like Guardian Project’s PanicKit, but for Facebook — allowing users to wipe or freeze their own accounts or posts tagged preemptively with a codeword only the owner knows.

“One of the issues with computer interfaces is that when people log into a site, they get a false sense of privacy even when the things they’re posting in that site are widely available to the public,” said O’Brien. Case in point: this year, women anonymously shared their experiences of abusive coworkers in a shared Google Doc — the so-called “Shitty Media Men” list, likely without realizing that a lawsuit could unmask them. That’s exactly what is happening.

Instead, activists and journalists often need to tap into resources and gain assistance from groups like Access Now, which runs a digital security helpline, and the Committee to Protect Journalists. These organizations can provide personal advice tailored to their specific country and situation. They can access Facebook over the Tor anonymity network. Then can use VPNs, and end-to-end encrypted messaging tools, and non-phone-based two-factor authentication methods. But many may not realize what the threat is until it’s too late.

The violent crackdown on free speech in Bangladesh accompanied government-imposed Internet restrictions, including the throttling of Internet access around the country. Users at home with a broadband connection did not feel the effects of this, but “it was the students on the streets who couldn’t go live or publish any photos of what was going on,” the Dhaka resident said.

Elections will take place in Bangladesh on December 30.

In the few months leading up to the election, Access Now says it’s noticed an increase in Bangladeshi residents expressing concern that their data has been compromised and seeking assistance from the Digital Security hotline.

Other rights groups have also found an uptick in malicious activity.

Meenakshi Ganguly, South Asia director at Human Rights Watch, said in an email that the organization is “extremely concerned about the ongoing crackdown on the political opposition and on freedom of expression, which has created a climate of fear ahead of national elections.”

Ganguly cited politically motivated cases against thousands of opposition supporters, many of which have been arrested, as well as candidates that have been attacked.

Human Rights Watch issued a statement about the situation, warning that the Rapid Action Battalion, a “paramilitary force implicated in serious human rights violations including extrajudicial killings and enforced disappearances,” and has been “tasked with monitoring social media for ‘anti-state propaganda, rumors, fake news, and provocations.'” This is in addition to a nine-member monitoring cell and around 100 police teams dedicated to quashing so-called “rumors” on social media, amid the looming threat of news website shutdowns.

“The security forces continue to arrest people for any criticism of the government, including on social media,” Ganguly said. “We hope that the international community will urge the Awami League government to create conditions that will uphold the rights of all Bangladeshis to participate in a free and fair vote.”

(@MikeStuchbery_)

(@MikeStuchbery_)

to foster engagement

to foster engagement  (@romaindillet)

(@romaindillet)  (@DOMXXXTOP)

(@DOMXXXTOP)

(@bIackprincessa)

(@bIackprincessa)  (@hani_farisha22)

(@hani_farisha22)

(@DaRealSeguncool)

(@DaRealSeguncool)

(@xliamstanxx)

(@xliamstanxx)