Suse, the newly independent open-source company behind the eponymous Linux distribution and an increasingly large set of managed enterprise services, today announced a bit of a new strategy as it looks to stay on top of the changing trends in the enterprise developer space. Over the course of the last few years, Suse put a strong emphasis on the OpenStack platform, an open-source project that essentially allows big enterprises to build something in their own data centers akin to the core services of a public cloud like AWS or Azure. With this new strategy, Suse is transitioning away from OpenStack . It’s ceasing both production of new versions of its OpenStack Cloud and sales of its existing OpenStack product.

“As Suse embarks on the next stage of our growth and evolution as the world’s largest independent open source company, we will grow the business by aligning our strategy to meet the current and future needs of our enterprise customers as they move to increasingly dynamic hybrid and multi-cloud application landscapes and DevOps processes,” the company said in a statement. “We are ideally positioned to execute on this strategy and help our customers embrace the full spectrum of computing environments, from edge to core to cloud.”

What Suse will focus on going forward are its Cloud Application Platform (which is based on the open-source Cloud Foundry platform) and Kubernetes-based container platform.

Chances are, Suse wouldn’t shut down its OpenStack services if it saw growing sales in this segment. But while the hype around OpenStack died down in recent years, it’s still among the world’s most active open-source projects and runs the production environments of some of the world’s largest companies, including some very large telcos. It took a while for the project to position itself in a space where all of the mindshare went to containers — and especially Kubernetes — for the last few years. At the same time, though, containers are also opening up new opportunities for OpenStack, as you still need some way to manage those containers and the rest of your infrastructure.

The OpenStack Foundation, the umbrella organization that helps guide the project, remains upbeat.

“The market for OpenStack distributions is settling on a core group of highly supported, well-adopted players, just as has happened with Linux and other large-scale, open-source projects,” said OpenStack Foundation COO Mark Collier in a statement. “All companies adjust strategic priorities from time to time, and for those distro providers that continue to focus on providing open-source infrastructure products for containers, VMs and bare metal in private cloud, OpenStack is the market’s leading choice.”

He also notes that analyst firm 451 Research believes there is a combined Kubernetes and OpenStack market of about $11 billion, with $7.7 billion of that focused on OpenStack. “As the overall open-source cloud market continues its march toward eight figures in revenue and beyond — most of it concentrated in OpenStack products and services — it’s clear that the natural consolidation of distros is having no impact on adoption,” Collier argues.

For Suse, though, this marks the end of its OpenStack products. As of now, though, the company remains a top-level Platinum sponsor of the OpenStack Foundation and Suse’s Alan Clark remains on the Foundation’s board. Suse is involved in some of the other projects under the OpenStack brand, so the company will likely remain a sponsor, but it’s probably a fair guess that it won’t continue to do so at the highest level.

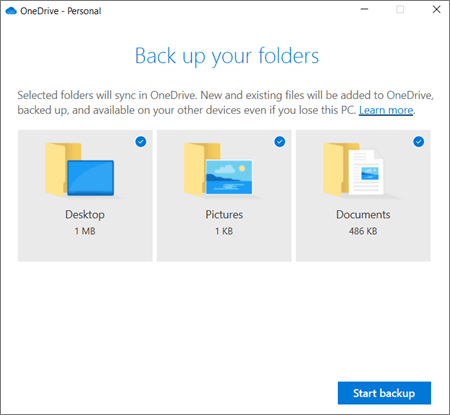

In addition to the global launch of Personal Vault, Microsoft also today introduced new storage options for One Drive, plus new features like PC Folder backup and dark mode.

In addition to the global launch of Personal Vault, Microsoft also today introduced new storage options for One Drive, plus new features like PC Folder backup and dark mode.