ByteDance has long been celebrated as an “app factory” for its proven model of churning out apps and monetizing them through a robust backend of shared resources, from engineering to marketing support. The result is a rank of household apps — Douyin and Toutiao in China and TikTok in the rest of the world.

In the meantime, the firm has prided itself on its “flat” internal organization with a flurry of self-governing products. As of September 2020, Zhang had 14 executives reporting to him, according to data compiled by The Information.

But as the firm continues to flourish, its stewards recognized that a structural shakeup is needed to fit its ballooning size. That change has come. ByteDance will group its apps and operations under six new “business units”, according to an internal document seen by TechCrunch Tuesday.

Notably, Shou Zi Chew, currently chief executive at TikTok, will no longer be ByteDance’s chief financial officer. Chew, a banking executive who previously worked at Xiaomi as CFO, joined ByteDance in March as its CFO and was made TikTok’s CEO in May.

At the time of his appointment as the new finance boss, speculation was rife that Chew was brought in to work on ByteDance’s initial public offering.

But the debacle of Ant Group’s planned IPO and later Didi’s regulatory overhaul have dimmed the prospects of Chinese internet firms seeking public listings. The other and perhaps more difficult question for ByteDance is: Which of the company’s units will be listed, and where?

Rubo Liang, a co-founder of ByteDance, has taken over Zhang Yiming as the firm’s CEO.

The six newly minted business units are useful indicators of ByteDance’s strategic focuses in the foreseeable future. They are:

TikTok: This unit will manage the video-sharing app and any business spawned by it, such as the firm’s e-commerce operations outside China.

Douyin: The eponymous app is the Chinese version of TikTok and is now officially the name of a standalone business unit overseeing ByteDance’s lucrative ad-powered content businesses in China. Xigua, which features longer videos, and Toutiao, the firm’s popular news aggregator, will be folded under the unit.

Dali Education: Dali was created in 2020 as ByteDance’s foray into the online learning sector. It now oversees the firm’s vocational learning, education hardware (like a lamp that allows busy parents to remotely keep their kids’ company during homework time), and campus learning initiatives.

Lark: Lark, a workplace collaboration software, is ByteDance’s ambition to put Slack and G Suite in one and part of the company’s B2B bet.

BytePlus: This is essentially the infrastructure piece of ByteDance’s B2B endeavor. The unit sells AI and data tools to enterprise clients.

Nuverse: It’s ByteDance’s game development and publishing unit, which also manages titles intended for overseas markets. Gaming companies in China are increasingly seeking growth abroad amid regulatory uncertainties.

TikTok’s identity

The inception of a TikTok business unit is noteworthy. ByteDance has been distancing TikTok from the rest of its Chinese businesses ever since concerns from the West rose over the video app’s links to the Chinese government. TikTok says it stores all its data in the U.S. with backup servers in Singapore, rather than Beijing where its parent company is headquartered.

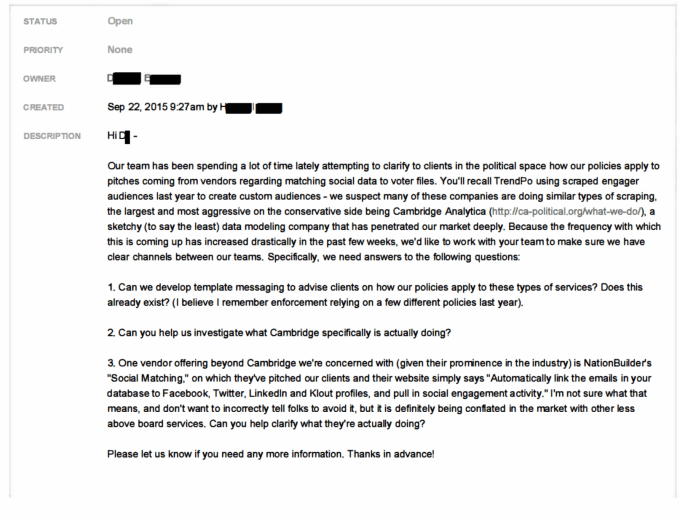

These measures aren’t enough to appease U.S. regulators’ worries. In its first-ever congressional hearing in the United States, TikTok faced tough questions and was repeatedly asked to clarify its semantics.

Senator Ted Cruz pressed the firm to address whether Beijing ByteDance Technology is “part of” TikTok’s “corporate group,” a term used in TikTok’s privacy policy, which states that the app “may share all of the information we collect with a parent, subsidiary, or other affiliate of our corporate group.”

TikTok’s representative maintained that TikTok has “no affiliation” with Beijing ByteDance Technology, ByteDance’s Chinese entity in which the government took a stake and board seat this year. That wording did not satisfy the Senator.

(@profcarroll)

(@profcarroll)