Remote work is no longer a new topic, as much of the world has now been doing it for a year or more because of the COVID-19 pandemic.

Companies — big and small — have had to react in myriad ways. Many of the initial challenges have focused on workflow, productivity and the like. But one aspect of the whole remote work shift that is not getting as much attention is the culture angle.

A 100% remote startup that was tackling the issue way before COVID-19 was even around is now seeing a big surge in demand for its offering that aims to help companies address the “people” challenge of remote work. It started its life with the name Icebreaker to reflect the aim of “breaking the ice” with people with whom you work.

“We designed the initial version of our product as a way to connect people who’d never met, kind of virtual speed dating,” says co-founder and CEO Perry Rosenstein. “But we realized that people were using it for far more than that.”

So over time, its offering has evolved to include a bigger goal of helping people get together beyond an initial encounter –– hence its new name: Gatheround.

“For remote companies, a big challenge or problem that is now bordering on a crisis is how to build connection, trust and empathy between people that aren’t sharing a physical space,” says co-founder and COO Lisa Conn. “There’s no five-minute conversations after meetings, no shared meals, no cafeterias — this is where connection organically builds.”

Organizations should be concerned, Gatheround maintains, that as we move more remote, that work will become more transactional and people will become more isolated. They can’t ignore that humans are largely social creatures, Conn said.

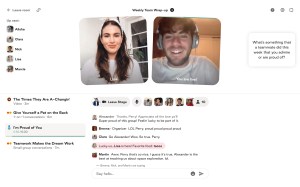

The startup aims to bring people together online through real-time events such as a range of chats, videos and one-on-one and group conversations. The startup also provides templates to facilitate cultural rituals and learning & development (L&D) activities, such as all-hands meetings and workshops on diversity, equity and inclusion.

Gatheround’s video conversations aim to be a refreshing complement to Slack conversations, which despite serving the function of communication, still don’t bring users face-to-face.

Image Credits: Gatheround

Since its inception, Gatheround has quietly built up an impressive customer base, including 28 Fortune 500s, 11 of the 15 biggest U.S. tech companies, 26 of the top 30 universities and more than 700 educational institutions. Specifically, those users include Asana, Coinbase, Fiverr, Westfield and DigitalOcean. Universities, academic centers and nonprofits, including Georgetown’s Institute of Politics and Public Service and Chan Zuckerberg Initiative, are also customers. To date, Gatheround has had about 260,000 users hold 570,000 conversations on its SaaS-based, video platform.

All its growth so far has been organic, mostly referrals and word of mouth. Now, armed with $3.5 million in seed funding that builds upon a previous $500,000 raised, Gatheround is ready to aggressively go to market and build upon the momentum it’s seeing.

Venture firms Homebrew and Bloomberg Beta co-led the company’s latest raise, which included participation from angel investors such as Stripe COO Claire Hughes Johnson, Meetup co-founder Scott Heiferman, Li Jin and Lenny Rachitsky.

Co-founders Rosenstein, Conn and Alexander McCormmach describe themselves as “experienced community builders,” having previously worked on President Obama’s campaigns as well as at companies like Facebook, Change.org and Hustle.

The trio emphasize that Gatheround is also very different from Zoom and video conferencing apps in that its platform gives people prompts and organized ways to get to know and learn about each other as well as the flexibility to customize events.

“We’re fundamentally a connection platform, here to help organizations connect their people via real-time events that are not just really fun, but meaningful,” Conn said.

Homebrew Partner Hunter Walk says his firm was attracted to the company’s founder-market fit.

“They’re a really interesting combination of founders with all this experience community building on the political activism side, combined with really great product, design and operational skills,” he told TechCrunch. “It was kind of unique that they didn’t come out of an enterprise product background or pure social background.”

He was also drawn to the personalized nature of Gatheround’s platform, considering that it has become clear over the past year that the software powering the future of work “needs emotional intelligence.”

“Many companies in 2020 have focused on making remote work more productive. But what people desire more than ever is a way to deeply and meaningfully connect with their colleagues,” Walk said. “Gatheround does that better than any platform out there. I’ve never seen people come together virtually like they do on Gatheround, asking questions, sharing stories and learning as a group.”

James Cham, partner at Bloomberg Beta, agrees with Walk that the founding team’s knowledge of behavioral psychology, group dynamics and community building gives them an edge.

“More than anything, though, they care about helping the world unite and feel connected, and have spent their entire careers building organizations to make that happen,” he said in a written statement. “So it was a no-brainer to back Gatheround, and I can’t wait to see the impact they have on society.”

The 14-person team will likely expand with the new capital, which will also go toward helping adding more functionality and details to the Gatheround product.

“Even before the pandemic, remote work was accelerating faster than other forms of work,” Conn said. “Now that’s intensified even more.”

Gatheround is not the only company attempting to tackle this space. Ireland-based Workvivo last year raised $16 million and earlier this year, Microsoft launched Viva, its new “employee experience platform.”