Industrial robotics is on track to be worth around $20 billion by 2020, but while it may something in common with other categories of cutting-edge tech — innovative use of artificial intelligence, pushing the boundaries of autonomous machines that are disrupting pre-existing technology — there is one key area where it differs: each robotics firm uses its own proprietary software and operating systems to run its machines, making programming the robots complicated, time-consuming and expensive.

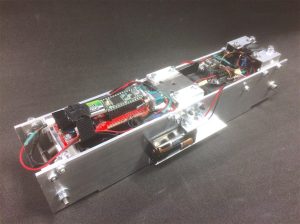

A startup out of Germany called Wandelbots (a portmanteau of “change” and “robots” in German) has come up with an innovative way to skirt around that challenge: it has built a bridge that connects the operating systems of the 12 most popular industrial robotics makers with what a business wants them to do, and now they can be trained by a person wearing a jacket kitted with dozens of sensors.

“We are providing a universal language to teach those robots in the same way, independent of the technology stack,” said CEO Christian Piechnick said in an interview. Essentially reverse engineering the process of how a lot of software is built, Wandelbots is creating what is a Linux-like underpinning to all of it.

With some very big deals under its belt with the likes of Volkwagen, Infineon and Midea, the startup out of Dresden has now raised €6 million ($6.8 million), a Series A to take it to its next level of growth and specifically to open an office in China. The funding comes from Paua Ventures, EQT Ventures and other unnamed previous investors. (It had previously raised a seed round around the time it was a finalist in our Disrupt Battlefield last year, pre-launch.)

Notably, Paua has a bit of a history of backing transformational software companies (it also invests in Stripe), and EQT, being connected to a private equity firm, is treating this as a strategic investment that might be deployed across its own assets.

Piechnick — who co-founded Wandelbots with Georg Püschel, Maria Piechnick, Sebastian Werner, Jan Falkenberg and Giang Nguyen on the back of research they did at university — said that typical programming of industrial robots to perform a task could have in the past taken three months, the employment of specialist systems integrators, and of course an extra cost on top of the machines themselves.

Someone with no technical knowledge, wearing one of Wandelbots’ jackets, can bring that process down to 10 minutes, with costs reduced by a factor of ten.

“In order to offer competitive products in the face of the rapid changes within the automotive industry, we need more cost savings and greater speed in the areas of production and automation of manufacturing processes,” said Marco Weiß, Head of New Mobility & Innovations at Volkswagen Sachsen GmbH, in a statement. “Wandelbots’ technology opens up significant opportunities for automation. Using Wandelbots offering, the installation and setup of robotic solutions can be implemented incredibly quickly by teams with limited programming skills.”

Wandelbots’ focus at the moment is on programming robotic arms rather than the mobile machines that you may have seen Amazon and others using to move goods around warehouses. For now, this means that there is not a strong crossover in terms of competition between these two branches of enterprise robotics.

However, Amazon has been expanding and working on new areas beyond warehouse movements: it has, for example, been working ways of using computer vision and robotic arms to identify and pick out the most optimal fruits and vegetables out of boxes to put into grocery orders.

Innovations like that from Amazon and others could see more pressure for innovation among robotics makers, although Piechnick notes that up to now we’ve seen very little in the way of movement, and there may never be (creating more opportunity for companies like his that build more usability).

“Attempts to build robotics operating systems have been tried over and over again, and each time it’s failed,” he said. “But robotics has completely different requirements, such as real time computing, safety issues and many other different factors. A robot in operation is much more complicated than a phone.” He also added that Wandelbots itself has a number of innovations of its own currently going through the patent process, which will widen its own functionality too in terms of what and how its software can train a robot to do. (This may see more than jackets enter the mix.)

As with companies in the area of robotic process automation — which uses AI to take over more mundane back-office features — Piechnick maintains that what he has built, and the rise of robotics overall, is not going to replace workers, but put them on to other roles, while allowing businesses to expand the scope of what they can do that a human might never have been able to execute.

“No company we work with has ever replaced a human worker with a robot,” he said, explaining that generally the upgrade is from machine to better machine. “It makes you more efficient and cost reductive, and it allows you to put your good people on more complicated tasks.”

Currently, Wandelbots is working with large-scale enterprises, although ultimately, it’s smaller businesses that are its target customer, he said.

“Previously the ROI on robots was too difficult for SMEs,” he said. “With our tech this changes.”

“Wandelbots will be one of the key companies enabling the mass-adoption of industrial robotics by revolutionizing how robots are trained and used,” said Georg Stockinger, Partner at Paua Ventures, in a statement. “Over the last few years, we’ve seen a steep decline in robotic hardware costs. Now, Wandelbots’ resolves the remaining hurdle to disruptive growth in industrial automation – the ease and speed of implementation and teaching. Both factors together will create a perfect storm, driving the next wave of industrial revolution.”