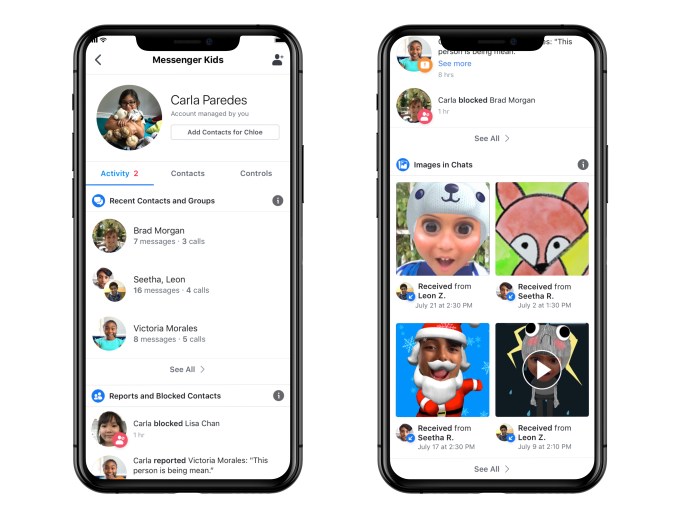

Facebook’s messaging app for families with children, Messenger Kids, is being updated today with new tools and features to give parents more oversight and control over their kids’ chats. Now, parents will be able to see who a child is chatting with and how often, view recent photos and videos sent through chat, access the child’s reported and block list, remotely log out of the app on other devices, and download the child’s chats, images and videos, both sent and received. The company is also introducing a new blocking mechanism and is updated the app’s Privacy Policy to include additional information about data collection, use and deletion practices.

The Messenger Kids app was first introduced in late 2017, as a way to give kids a way to message friends and family with parental oversight. It arrived at a time when kids were already embracing messaging — but were often doing so on less controlled platforms, like Kik, which attracted predators. Messenger Kids instead allows the child’s parents to determine who the child can chat with and when, through built-in parental controls.

In our household, for example, it became a convenient tool for chatting with relatives, like grandparents, aunts, uncles, and cousins, as well as few trusted friends, whose parents I knew well.

But when it came time to review the chats, a lot of scrolling back was involved.

The new Messenger Kids features will help with the oversight aspects for those parents who allow their kids to online chat. That decision, of course, is a personal one. Some parents don’t want their kids to have smartphones and outright ban apps, particularly ones that allow interactions. Others, myself included, believe that teaching kids to navigate the online world is part of your parental responsibility. And despite Facebook’s reputation, there aren’t other chat apps offering these sort of parental controls — or the convenience of being able to add everyone in your family to a child’s chat list with ease. (After all, Grandma and grandpa are already on Facebook and Messenger, but getting them to download new apps remains difficult.)

In the updated app, parents will be able to see who a child has been chatting with, and whether that’s text or video chat, over the past 30 days. This can save parents’ time, as they may not feel the need to review chat with trusted family members, for instance, so can redirect their focus their energy on reviewing the chats with friends. A log of images will help parents to see if all images and videos being sent and received are appropriate, and remove them or block them if not.

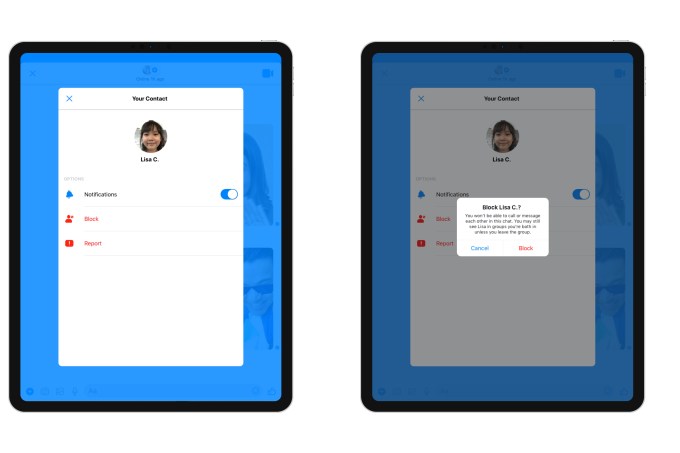

Parents can also now see if a child has blocked or reported a user in the app, or if they’ve unblocked them. This could be useful for identifying those problematic friends — the kind who sometimes cause trouble, but are later forgiven, then unblocked. (Anyone who’s dealt with tween-age drama can attest to the fact that there’s always one in every group!) By gaining access to this information, parents can sit down with te child to talk about when to take that step and block someone, and when a disagreement with a friend can instead be worked out. These are decisions that a child will have to make on their own one day, so being able to use this as a teaching moment is useful.

With the update, unblocking is supported and parents are still able to review chats with blocked contacts. However, blocked contacts will remain visible to one another and will stay in shared group chats. They just aren’t able to message one-on-one. Kids are warned if they return to or are added to chats with blocked contacts. (If parents want a full block, they can just remove the blocked contact from the child’s contact list, as before.)

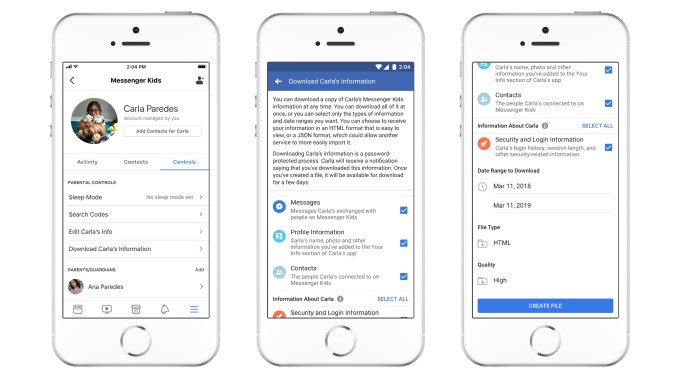

Remote device logout lets you make sure the child is logged out of Messenger Kids on devices you can’t physically access and control — like a misplaced phone. And the option to download the child’s information, similar to Facebook’s feature, lets you download a copy of everything — messages, images, and videos. This could be a way to preserve their chat history when the child outgrows the app.

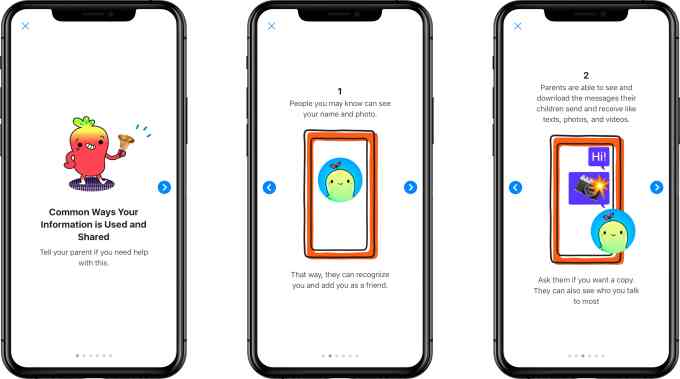

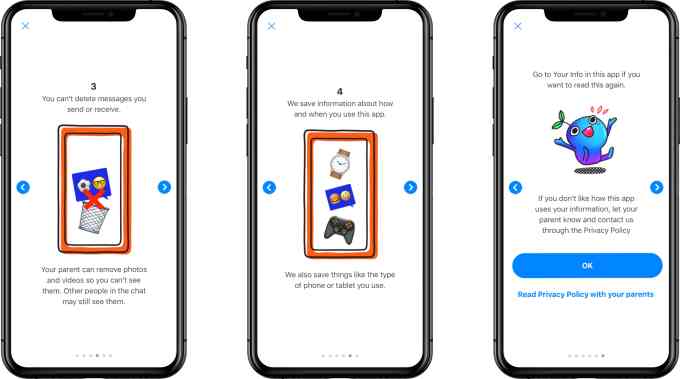

The Messenger Kids’ privacy policy was updated, as well, to better detail the information being collected. The app also attempts to explain this in plain language to the kids, using cute photos. In reality, parents should read the policy for themselves and make a decision, accordingly.

The app collects a lot of information — including names, profile photos, demographic details (gender and birthday), a child’s connection to parents, contacts’ information (like most frequent contacts), app usage information, device attributes and unique identifiers, data from device settings (like time zones or access to camera and photos), network information, and information provided from things like bug reports or feedback/contact forms.

To some extent, this information is needed to help the app properly operate or to alert parents about a child’s activities. But the policy includes less transparent language about the collected information being used to “evaluate, troubleshoot, improve, create, and develop our products” or being shared with other Facebook Companies. There’s a lot of wiggle room there for extensive data collection on Facebook’s part. Service providers offering technical infrastructure and support, like a content delivery network or customer service, may also gain access to collected information, but must adhere to “strict data confidentiality and security obligations,” the policy claims, without offering further details on what those are.

Despite its lengthiness, the policy leaves plenty of room for Facebook to collect private information and share it. If you have a Facebook account, you’ve already agreed to this sort of “deal with the devil” for yourself, in order to benefit from Facebook’s free service. But parents need to strongly consider if they’re comfortable making the same decision for their children.

The policy also describes things Facebook plans to roll out later, when Messenger Kids is updated to support older kids. As kids enter tween to teen years, parents may want to loosen the reigns a bit. The new policy will cover those changes, as well.

It’s unfortunate that the easiest tool, and the one with the best parental controls, is coming from Facebook. The market is ripe for a disruptor in the kids’ space, but there’s not enough money in that, apparently. Facebook, of course, sees the potential of getting kids hooked early and can invest in a product that isn’t directly monetized. Few companies can afford to do this, but Apple would be the best to take Facebook on in this area.

Apple’s iMessage is a large, secure and private platform — but it lacks these advanced parental controls, as well as the other bells and whistles (like built-in AR filters) that makes the Messenger Kids app fun. Critically, it doesn’t work across non-Apple devices, which will always be a limiter when it comes to finding an app that the extended family can use together.

To be clear, there is no way to stop Facebook from vacuuming up the child’s information except to delete the child’s Messenger Kids Account through the Facebook Help Center. So consider your choices wisely.

Ireland (4,9 M) has 176 judges (1 per 28k)

Ireland (4,9 M) has 176 judges (1 per 28k) Austria (8,5 Mio) has 1700 judges (1 per 5k)

Austria (8,5 Mio) has 1700 judges (1 per 5k) Germany (83 Mio) has 21339 judges (1 per 3,8k)

Germany (83 Mio) has 21339 judges (1 per 3,8k)

(@maxschrems)

(@maxschrems)