The UK government has taken the next step in its grand policymaking challenge to tame the worst excesses of social media by regulating a broad range of online harms — naming the existing communications watchdog, Ofcom, as its preferred pick for enforcing rules around ‘harmful speech’ on platforms such as Facebook, Snapchat and TikTok in future.

Last April the previous Conservative-led government laid out populist but controversial proposals to legislate to lay a duty of care on Internet platforms — responding to growing public concern about the types of content kids are being exposed to online.

Its white paper covers a broad range of online content — from terrorism, violence and hate speech, to child exploitation, self-harm/suicide, cyber bullying, disinformation and age-inappropriate material — with the government setting out a plan to require platforms to take “reasonable” steps to protect their users from a range of harms.

However digital and civil rights campaigners warn the plan will have a huge impact on online speech and privacy, arguing it will put a legal requirement on platforms to closely monitor all users and apply speech-chilling filtering technologies on uploads in order to comply with very broadly defined concepts of harm — dubbing it state censorship. Legal experts are also critical.

The (now) Conservative majority government has nonetheless said it remains committed to the legislation.

Today it responded to some of the concerns being raised about the plan’s impact on freedom of expression, publishing a partial response to the public consultation on the Online Harms White Paper, although a draft bill remains pending, with no timeline confirmed.

“Safeguards for freedom of expression have been built in throughout the framework,” the government writes in an executive summary. “Rather than requiring the removal of specific pieces of legal content, regulation will focus on the wider systems and processes that platforms have in place to deal with online harms, while maintaining a proportionate and risk-based approach.”

It says it’s planning to set a different bar for content deemed illegal vs content that has “potential to cause harm”, with the heaviest content removal requirements being planned for terrorist and child sexual exploitation content. Whereas companies will not be forced to remove “specific pieces of legal content”, as the government puts it.

Ofcom, as the online harms regulator, will also not be investigating or adjudicating on “individual complaints”.

“The new regulatory framework will instead require companies, where relevant, to explicitly state what content and behaviour they deem to be acceptable on their sites and enforce this consistently and transparently. All companies in scope will need to ensure a higher level of protection for children, and take reasonable steps to protect them from inappropriate or harmful content,” it writes.

“Companies will be able to decide what type of legal content or behaviour is acceptable on their services, but must take reasonable steps to protect children from harm. They will need to set this out in clear and accessible terms and conditions and enforce these effectively, consistently and transparently. The proposed approach will improve transparency for users about which content is and is not acceptable on different platforms, and will enhance users’ ability to challenge removal of content where this occurs.”

Another requirement will be that companies have “effective and proportionate user redress mechanisms” — enabling users to report harmful content and challenge content takedown “where necessary”.

“This will give users clearer, more effective and more accessible avenues to question content takedown, which is an important safeguard for the right to freedom of expression,” the government suggests, adding that: “These processes will need to be transparent, in line with terms and conditions, and consistently applied.”

Ministers say they have not yet made a decision on what kind of liability senior management of covered businesses may face under the planned law, nor on additional business disruption measures — with the government saying it will set out its final policy position in the Spring.

“We recognise the importance of the regulator having a range of enforcement powers that it uses in a fair, proportionate and transparent way. It is equally essential that company executives are sufficiently incentivised to take online safety seriously and that the regulator can take action when they fail to do so,” it writes.

It’s also not clear how businesses will be assessed as being in (or out of) scope of the regulation.

“Just because a business has a social media page that does not bring it in scope of regulation,” the government response notes. “To be in scope, a business would have to operate its own website with the functionality to enable sharing of user-generated content, or user interactions. We will introduce this legislation proportionately, minimising the regulatory burden on small businesses. Most small businesses where there is a lower risk of harm occurring will not have to make disproportionately burdensome changes to their service to be compliant with the proposed regulation.”

The government is clear in the response that Online harms remains “a key legislative priority”.

“We have a comprehensive programme of work planned to ensure that we keep momentum until legislation is introduced as soon as parliamentary time allows,” it writes, describing today’s response report “an iterative step as we consider how best to approach this complex and important issue” — and adding: “We will continue to engage closely with industry and civil society as we finalise the remaining policy.”

Incoming in the meanwhile the government says it’s working on a package of measures “to ensure progress now on online safety” — including interim codes of practice, including guidance for companies on tackling terrorist and child sexual abuse and exploitation content online; an annual government transparency report, which it says it will publish “in the next few months”; and a media literacy strategy, to support public awareness of online security and privacy.

It adds that it expects social media platforms to “take action now to tackle harmful content or activity on their services” — ahead of the more formal requirements coming in.

Facebook-owned Instagram has come in for high level pressure from ministers over how it handles content promoting self-harm and suicide after the media picked up on a campaign by the family of a schoolgirl who killed herself after been exposed to Instagram content encouraging self-harm.

Instagram subsequently announced changes to its policies for handling content that encourages or depicts self harm/suicide — saying it would limit how it could be accessed. This later morphed into a ban on some of this content.

The government said today that companies offering online services that involve user generated content or user interactions are expected to make use of what it dubs “a proportionate range of tools” — including age assurance, and age verification technologies — to prevent kids from accessing age-inappropriate content and “protect them from other harms”.

This is also the piece of the planned legislation intended to pick up the baton of the Digital Economy Act’s porn block proposals — which the government dropped last year, saying it would bake equivalent measures into the forthcoming Online Harms legislation.

The Home Office has been consulting with social media companies on devising robust age verification technologies for many months.

In its own response statement today, Ofcom — which would be responsible for policy detail under the current proposals — said it will work with the government to ensure “any regulation provides effective protection for people online”, and, pending appointment, “consider what we can do before legislation is passed”.

The Online Harms plan is not the online Internet-related work ongoing in Whitehall, with ministers noting that: “Work on electoral integrity and related online transparency issues is being taken forward as part of the Defending Democracy programme together with the Cabinet Office.”

Back in 2018 a UK parliamentary committee called for a levy on social media platforms to fund digital literacy programs to combat online disinformation and defend democratic processes, during an enquiry into the use of social media for digital campaigning. However the UK government has been slower to act on this front.

The former chair of the DCMS committee, Damian Collins, called today for any future social media regulator to have “real powers in law” — including the ability to “investigate and apply sanctions to companies which fail to meet their obligations”.

In the DCMS committee’s final report parliamentarians called for Facebook’s business to be investigated, raising competition and privacy concerns.

Jam is just starting to add users off its rapidly growing

Jam is just starting to add users off its rapidly growing

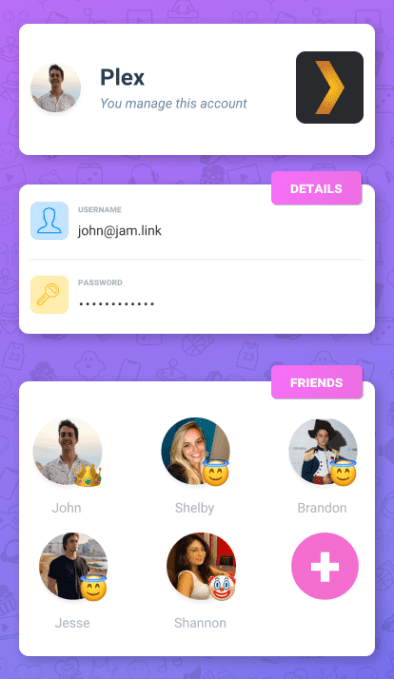

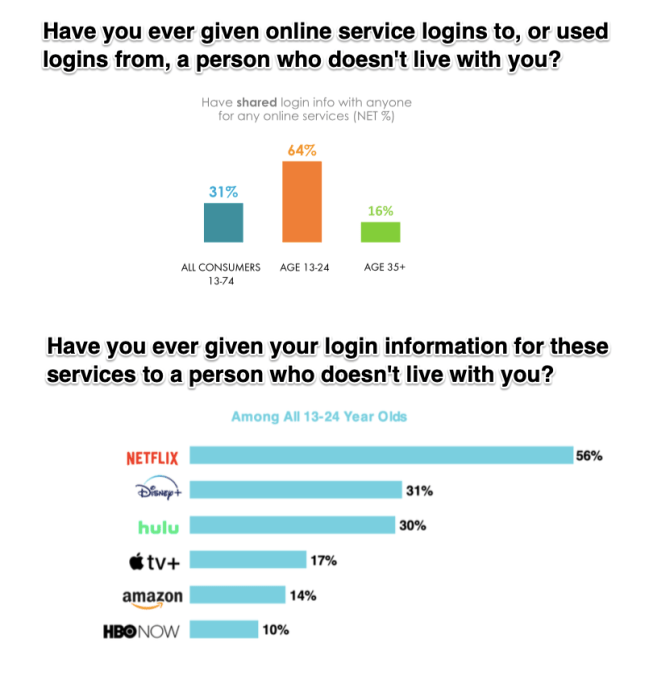

However, sharing is typically supposed to be amongst a customer’s own devices or within their household, or they’re supposed to pay for a family plan. We asked Netflix, Hulu, CBS, Disney, and Spotify for comment, and did not receive any on the record comments. However,

However, sharing is typically supposed to be amongst a customer’s own devices or within their household, or they’re supposed to pay for a family plan. We asked Netflix, Hulu, CBS, Disney, and Spotify for comment, and did not receive any on the record comments. However,