Just under a month ago Facebook switched on global availability of a tool which affords users a glimpse into the murky world of tracking that its business relies upon to profile users of the wider web for ad targeting purposes.

Facebook is not going boldly into transparent daylight — but rather offering what privacy rights advocacy group Privacy International has dubbed “a tiny sticking plaster on a much wider problem”.

The problem it’s referring to is the lack of active and informed consent for mass surveillance of Internet users via background tracking technologies embedded into apps and websites, including as people browse outside Facebook’s own content garden.

The dominant social platform is also only offering this feature in the wake of the 2018 Cambridge Analytica data misuse scandal, when Mark Zuckerberg faced awkward questions in Congress about the extent of Facebook’s general web tracking. Since then policymakers around the world have dialled up scrutiny of how its business operates — and realized there’s a troubling lack of transparency in and around adtech generally and Facebook specifically.

Facebook’s tracking pixels and social plugins — aka the share/like buttons that pepper the mainstream web — have created a vast tracking infrastructure which silently informs the tech giant of Internet users’ activity, even when a person hasn’t interacted with any Facebook-branded buttons.

Facebook claims this is just ‘how the web works’. And other tech giants are similarly engaged in tracking Internet users (notably Google). But as a platform with 2.2BN+ users Facebook has got a march on the lion’s share of rivals when it comes to harvesting people’s data and building out a global database of person profiles.

It’s also positioned as a dominant player in an adtech ecosystem which means it’s the one being fed with intel by data brokers and publishers who deploy tracking tech to try to survive in such a skewed system.

Meanwhile the opacity of online tracking means the average Internet user is none the wiser that Facebook can be following what they’re browsing all over the Internet. Questions of consent loom very large indeed.

Facebook is also able to track people’s usage of third party apps if a person chooses a Facebook login option which the company encourages developers to implement in their apps — again the carrot being to be able to offer a lower friction choice vs requiring users create yet another login credential.

The price for this ‘convenience’ is data and user privacy as the Facebook login gives the tech giant a window into third part app usage.

The company has also used a VPN app it bought and badged as a security tool to glean data on third party app usage — though it’s recently stepped back from the Onavo app after a public backlash (though that did not stop it running a similar tracking program targeted at teens).

Background tracking is how Facebook’s creepy ads function (it prefers to call such behaviorally targeted ads ‘relevant’) — and how they have functioned for years

Yet it’s only in recent months that it’s offered users a glimpse into this network of online informers — by providing limited information about the entities that are passing tracking data to Facebook, as well as some limited controls.

From ‘Clear History’ to “Off-Facebook Activity”

Originally briefed in May 2018, at the crux of the Cambridge Analytica scandal, as a ‘Clear History’ option this has since been renamed ‘Off-Facebook Activity’ — a label so bloodless and devoid of ‘call to action’ that the average Facebook user, should they stumble upon it buried deep in unlovely settings menus, would more likely move along than feel moved to carry out a privacy purge.

(For the record you can access the setting here — but you do need to be logged into Facebook to do so.)

The other problem is that Facebook’s tool doesn’t actually let you purge your browsing history, it just delinks it from being associated with your Facebook ID. There is no option to actually clear your browsing history via its button. Another reason for the name switch. So, no, Facebook hasn’t built a clear history ‘button’.

“While we welcome the effort to offer more transparency to users by showing the companies from which Facebook is receiving personal data, the tool offers little way for users to take any action,” said Privacy International this week, criticizing Facebook for “not telling you everything”.

As the saying goes, a little knowledge can be a dangerous thing. So a little transparency implies — well — anything but clarity. And Privacy International sums up the Off-Facebook Activity tool with an apt oxymoron — describing it as “a new window to the opacity”.

“This tool illustrates just how impossible it is for users to prevent external data from being shared with Facebook,” it writes, warning with emphasis: “Without meaningful information about what data is collected and shared, and what are the ways for the user to opt-out from such collection, Off-Facebook activity is just another incomplete glimpse into Facebook’s opaque practices when it comes to tracking users and consolidating their profiles.”

It points out, for instance, that the information provided here is limited to a “simple name” — thereby preventing the user from “exercising their right to seek more information about how this data was collected”, which EU users at least are entitled to.

“As users we are entitled to know the name/contact details of companies that claim to have interacted with us. If the only thing we see, for example, is the random name of an artist we’ve never heard before (true story), how are we supposed to know whether it is their record label, agent, marketing company or even them personally targeting us with ads?” it adds.

Another criticism is Facebook is only providing limited information about each data transfer — with Privacy International noting some events are marked “under a cryptic CUSTOM” label; and that Facebook provides “no information regarding how the data was collected by the advertiser (Facebook SDK, tracking pixel, like button…) and on what device, leaving users in the dark regarding the circumstances under which this data collection took place”.

“Does Facebook really display everything they process/store about those events in the log/export?” queries privacy researcher Wolfie Christl, who tracks the adtech industry’s tracking techniques. “They have to, because otherwise they don’t fulfil their SAR [Subject Access Request] obligations [under EU law].”

Christl notes Facebook makes users jump through an additional “download” hoop in order to view data on tracked events — and even then, as Privacy International points out, it gives up only a limited view of what has actually been tracked…

“For example, why doesn’t Facebook list the specific sites/URLs visited? Do they infer data from the domains e.g. categories? If yes, why is this not in the logs?” Christl asks.

We reached out to Facebook with a number of questions, including why it doesn’t provide more detail by default. It responded with this statement attributed to spokesperson:

We offer a variety of tools to help people access their Facebook information, and we’ve designed these tools to comply with relevant laws, including GDPR. We disagree with this [Privacy International] article’s claims and would welcome the chance to discuss them with Privacy International.

Facebook also said it’s continuing to develop which information it surfaces through the Off-Facebook Activity tool — and said it welcomes feedback on this.

We also asked it about the legal bases it uses to process people’s information that’s been obtained via its tracking pixels and social plug-ins. It did not provide a response to those questions.

Six names, many questions…

When the company launched the Off-Facebook Activity tool a snap poll of available TechCrunch colleagues showed very diverse results for our respective tallies (which also may not show the most recent activity, per other Facebook caveats) — ranging from one colleague who had an eye-watering 1,117 entities (likely down to doing a lot of app testing); to several with several/a few hundred apiece; to a couple in the middle tens.

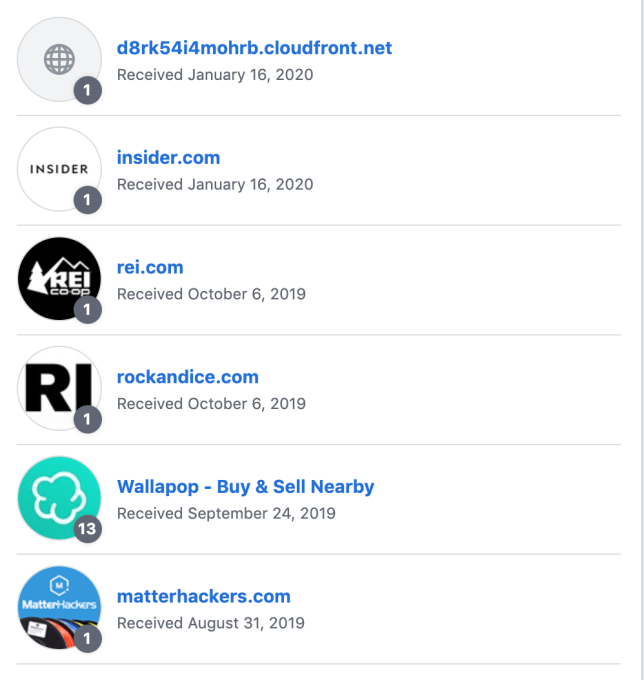

In my case I had just six. But from my point of view — as an EU citizen with a suite of rights related to privacy and data protection; and as someone who aims to practice good online privacy hygiene, including having a very locked down approach to using Facebook (never using its mobile app for instance) — it was still six too many. I wanted to find out how these entities had circumvented my attempts not to be tracked.

And in the case of the first one in the list who on earth it was…

Turns out cloudfront is an Amazon Web Services Content Delivery Network subdomain. But I had to go searching online myself to figure out that the owner of that particular domain is (now) a company called Nativo.

Facebook’s list provided only very bare bones information. I also clicked to delink the first entity, since it immediately looked so weird, and found that by doing that Facebook wiped all the entries — which meant I was unable to retain access to what little additional info it had provided about the respective data transfers.

Undeterred I set out to contact each of the six companies directly with questions — asking what data of mine they had transferred to Facebook and what legal basis they thought they had for processing my information.

(On a practical level six names looked like a sample size I could at least try to follow up manually — but remember I was the TechCrunch exception; imagine trying to request data from 1,117 companies, or 450 or even 57, which were the lengths of lists of some of my colleagues.)

This process took about a month and a lot of back and forth/chasing up. It likely only yielded as much info as it did because I was asking as a journalist; an average Internet user may have had a tougher time getting attention on their questions — though, under EU law, citizens have a right to request a copy of personal data held on them.

Eventually, I was able to obtain confirmation that tracking pixels and Facebook share buttons had been involved in my data being passed to Facebook in certain instances. Even so I remain in the dark on many things. Such as exactly what personal data Facebook received.

In one case I was told by a listed company that it doesn’t know itself what data was shared — only Facebook knows because it’s implemented the company’s “proprietary code”. (Insert your own ‘WTAF’ there.)

The legal side of these transfers also remains highly opaque. From my point of view I would not intentionally consent to any of this tracking — but in some instances the entities involved claim that (my) consent was (somehow) obtained (or implied).

In other cases they said they are relying on a legal basis in EU law that’s referred to as ‘legitimate interests’. However this requires a balancing test to be carried out to ensure a business use does not have a disproportionate impact on individual rights.

I wasn’t able to ascertain whether such tests had ever been carried out.

Meanwhile, since Facebook is also making use of the tracking information from its pixels and social plug ins (and seemingly more granular use, since some entities claimed they only get aggregate not individual data), Christl suggests it’s unlikely such a balancing test would be easy to pass for that tiny little ‘platform giant’ reason.

Notably he points out Facebook’s Business Tool terms state that it makes use of so called “event data” to “personalize features and content and to improve and secure the Facebook products” — including for “ads and recommendations”; for R&D purposes; and “to maintain the integrity of and to improve the Facebook Company Products”.

In a section of its legal terms covering the use of its pixels and SDKs Facebook also puts the onus on the entities implementing its tracking technologies to gain consent from users prior to doing so in relevant jurisdictions that “require informed consent” for tracking cookies and similar — giving the example of the EU.

“You must ensure, in a verifiable manner, that an end user provides the necessary consent before you use Facebook Business Tools to enable us to store and access cookies or other information on the end user’s device,” Facebook writes, pointing users of its tools to its Cookie Consent Guide for Sites and Apps for “suggestions on implementing consent mechanisms”.

Christl flags the contradiction between Facebook claiming users of its tracking tech needing to gain prior consent vs claims I was given by some of these entities that they don’t because they’re relying on ‘legitimate interests’.

“Using LI as a legal basis is even controversial if you use a data analytics company that reliably processes personal data strictly on behalf of you,” he argues. “I guess, industry lawyers try to argue for a broader applicability of LI, but in the case of FB business tools I don’t believe that the balancing test (a businesses legitimate interests vs. the impact on the rights and freedoms of data subjects) will work in favor of LI.”

Those entities relying on legitimate interests as a legal base for tracking would still need to offer a mechanism where users can object to the processing — and I couldn’t immediately see such a mechanism in the cases in question.

One thing is crystal clear: Facebook itself does not provide a mechanism for users to object to its processing of tracking data nor opt out of targeted ads. That remains a long-standing complaint against its business in the EU which data protection regulators are still investigating.

One more thing: Non-Facebook users continue to have no way of learning what data of theirs is being tracked and transferred to Facebook. Only Facebook users have access to the Off-Facebook Activity tool, for example. Non-users can’t even access a list.

Facebook has defended its practice of tracking non-users around the Internet as necessary for unspecified ‘security purposes’. It’s an inherently disproportionate argument of course. The practice also remains under legal challenge in the EU.

Tracking the trackers

SimpleReach (aka d8rk54i4mohrb.cloudfront.net)

What is it? A California-based analytics platform (now owned by Nativo) used by publishers and content marketers to measure how well their content/native ads performs on social media. The product began life in the early noughties as a simple tool for publishers to recommend similar content at the bottom of articles before the startup pivoted — aiming to become ‘the PageRank of social’ — offering analytics tools for publishers to track engagement around content in real-time across the social web (plugging into platform APIs). It also built statistical models to predict which pieces of content will be the most social and where, generating a proprietary per article score. SimpleReach was acquired by Nativo last year to complement analytics tools the latter already offered for tracking content on the publisher/brand’s own site.

Why did it appear in your Off-Facebook Activity list? Given it’s a b2b product it does not have a visible consumer brand of its own. And, to my knowledge, I have never visited its own website prior to investigating why it appeared in my Off-Facebook Activity list. Clearly, though, I must have visited a site (or sites) that are using its tracking/analytics tools. Of course an Internet user has no obvious way to know this — unless they’re actively using tools to monitor which trackers are tracking them.

In a further quirk, neither the SimpleReach (nor Nativo) brand names appeared in my Off-Facebook Activity list. Rather a domain name was listed — d8rk54i4mohrb.cloudfront.net — which looked at first glance weird/alarming.

I found this is owned by SimpleReach by using a tracker analytics service.

Once I knew the name I was able to connect the entry to Nativo — via news reports of the acquisition — which led me to an entity I could direct questions to.

What happened when you asked them about this? There was a bit of back and forth and then they sent a detailed response to my questions in which they claim they do not share any data with Facebook — “or perform ‘off site activity’ as described on Facebook’s activity tool”.

They also suggested that their domain had appeared as a result of their tracking code being implemented on a website I had visited which had also implemented Facebook’s own trackers.

“Our technology allows our Data Controllers to insert other tracking pixels or tags, using us as a tag manager that delivers code to the page. It is possible that one of our customers added a Facebook pixel to an article you visited using our technology. This could lead Facebook to attribute this pixel to our domain, though our domain was merely a ‘carrier’ of the code,” they told me.

In terms of the data they collect, they said this: “The only Personal Data that is collected by the SimpleReach Analytics tag is your IP Address and a randomly generated id. Both of these values are processed, anonymized, and aggregated in the SimpleReach platform and not made available to anyone other than our sub-processors that are bound to process such data only on our behalf. Such values are permanently deleted from our system after 3 months. These values are used to give our customers a general idea of the number of users that visited the articles tracked.”

So, again, they suggested the reason why their domain appeared in my Off-Facebook Activity list is a combination of Nativo/SimpleReach’s tracking technologies being implemented on a site where Facebook’s retargeting pixel is also embedded — which then resulted in data about my online activity being shared with Facebook (which Facebook then attributes as coming from SimpleReach’s domain).

Commenting on this, Christl agreed it sounds as if publishers “somehow attach Facebook pixel events to SimpleReach’s cloudfront domain”.

“SimpleReach probably doesn’t get data from this. But the question is 1) is SimpleReach perhaps actually responsible (if it happens in the context of their domain); 2) The Off-Facebook activity is a mess (if it contains events related to domains whose owners are not web or app publishers).”

Nativo offered to determine whether they hold any personal information associated with the unique identifier they have assigned to my browser if I could send them this ID. However I was unable to locate such an ID (see below).

In terms of legal base to process my information the company told me: “We have the right to process data in accordance with provisions set forth in the various Data Processor agreements we have in place with Data Controllers.”

Nativo also suggested that the Offsite Activity in question might have predated its purchase of the SimpleReach technology — which occurred on March 20, 2019 — saying any activity prior to this would mean my query would need to be addressed directly with SimpleReach, Inc. which Nativo did not acquire. (However in this case the activity registered on the list was dated later than that.)

Here’s what they said on all that in full:

Thank you for submitting your data access request. We understand that you are a resident of the European Union and are submitting this request pursuant to Article 15(1) of the GDPR. Article 15(1) requires “data controllers” to respond to individuals’ requests for information about the processing of their personal data. Although Article 15(1) does not apply to Nativo because we are not a data controller with respect to your data, we have provided information below that will help us in determining the appropriate Data Controllers, which you can contact directly.

First, for details about our role in processing personal data in connection with our SimpleReach product, please see the SimpleReach Privacy Policy. As the policy explains in more detail, we provide marketing analytics services to other businesses – our customers. To take advantage of our services, our customers install our technology on their websites, which enables us to collect certain information regarding individuals’ visits to our customers’ websites. We analyze the personal information that we obtain only at the direction of our customer, and only on that customer’s behalf.

SimpleReach is an analytics tracker tool (Similar to Google Analytics) implemented by our customers to inform them of the performance of their content published around the web. “d8rk54i4mohrb.cloudfront.net” is the domain name of the servers that collect these metrics. We do not share data with Facebook or perform “off site activity” as described on Facebook’s activity tool. Our technology allows our Data Controllers to insert other tracking pixels or tags, using us as a tag manager that delivers code to the page. It is possible that one of our customers added a Facebook pixel to an article you visited using our technology. This could lead Facebook to attribute this pixel to our domain, though our domain was merely a “carrier” of the code.

The SimpleReach tool is implemented on articles posted by our customers and partners of our customers. It is possible you visited a URL that has contained our tracking code. It is also possible that the Offsite Activity you are referencing is activity by SimpleReach, Inc. before Nativo purchased the SimpleReach technology. Nativo, Inc. purchased certain technology from SimpleReach, Inc. on March 20, 2019, but we did not purchase the SimpleReach, Inc. entity itself, which remains a separate entity unaffiliated with Nativo, Inc. Accordingly, any activity that occurred before March 20, 2019 pre-dates Nativo’s use of the SimpleReach technology and should be addressed directly with SimpleReach, Inc. If, for example, TechCrunch was a publisher partner of SimpleReach, Inc. and had SimpleReach tracking code implemented on TechCrunch articles or across the TechCrunch website prior to March 20, 2019, any resulting data collection would have been conducted by SimpleReach, Inc., not by Nativo, Inc.

As mentioned above, our tracking script collects and sends information to our servers based on the articles it is implemented on. The only Personal Data that is collected by the SimpleReach Analytics tag is your IP Address and a randomly generated id. Both of these values are processed, anonymized, and aggregated in the SimpleReach platform and not made available to anyone other than our sub-processors that are bound to process such data only on our behalf. Such values are permanently deleted from our system after 3 months. These values are used to give our customers a general idea of the number of users that visited the articles tracked.

We do not, nor have we ever, shared ANY information with Facebook with regards to the information we collect from the SimpleReach Analytics tag, be it Personal Data or otherwise. However, as mentioned above, it is possible that one of our customers added a Facebook retargeting pixel to an article you visited using our technology. If that is the case, we would not have received any information collected from such pixel or have knowledge of whether, and to what extent, the customer shared information with Facebook. Without more information, we are unable to determine the specific customer (if any) on behalf of which we may have processed your personal information. However, if you send us the unique identifier we have assigned to your browser… we can determine whether we have any personal information associated with such browser on behalf of a customer controller, and, if we have, we can forward your request on to the controller to respond directly to your request.

As a Data Processor we have the right to process data in accordance with provisions set forth in the various Data Processor agreements we have in place with Data Controllers. This type of agreement is designed to protect Data Subjects and ensure that Data Processors are held to the same standards that both the GDPR and the Data Controller have put forth. This is the same type of agreement used by all other analytics tracking tools (as well as many other types of tools) such as Google Analytics, Adobe Analytics, Chartbeat, and many others.

I also asked Nativo to confirm whether Insider.com (see below) is a customer of Nativo/SimpleReach.

The company told me it could not disclose this “due to confidentiality restrictions” and would only reveal the identity of customers if “required by applicable law”.

Again, it said that if I provided the “unique identifier” assigned to my browser it would be “happy to pull a list of personal information the SimpleReach/Nativo systems currently have stored for your unique identifier (if any), including the appropriate Data Controllers”. (“If we have any personal data collected from you on behalf of Insider.com, it would come up in the list of DataControllers,” it suggested.)

I checked multiple browsers that I use on multiple devices but was unable to locate an ID attached to a SimpleReach cookie. So I also asked whether this might appear attached to any other cookie.

Their response:

Because our data is either pseudonymized or anonymized, and we do not record of any other pieces of Personal Data about you, it will not be possible for us to locate this data without the cookie value. The SimpleReach user cookie is, and has always been, in the “__srui” cookie under the “.simplereach.com” domain or any of its sub-domains. If you are unable to locate a SimpleReach user cookie by this name on your browser, it may be because you are using a different device or because you have cleared your cookies (in which case we would no longer have the ability to map any personal data we have previously collected from you to your browser or device). We do have other cookies (under the domains postrelease.com, admin.nativo.com, and cloud.nativo.com) but those cookies would not be related to the appearance of SimpleReach in the list of Off Site Activity on your Facebook account, per your original inquiry.

What did you learn from their inclusion in the Off-Facebook Activity list? There appeared to be a correlation between this domain and a publisher, Insider.com, which also appeared in my Off-Facebook Activity list — as both logged events bear the same date; plus Insider.com is a publisher so would fall into the right customer category for using Nativo’s tool.

Given those correlations I was able to guess Insider.com is a customer of Nativo. (I confirmed this when I spoke to Insider.com) — so Facebook’s tool is able to leak relational inferences related to the tracking industry by surfacing/mapping business connections that might not have been otherwise evident.

Insider.com

What is it? A New York based business media company which owns brands such as Business Insider and Markets Insider

Why did it appear in your Off-Facebook Activity list? I imagine I clicked on a technology article that appeared in my Facebook News Feed or elsewhere but when I was logged into Facebook

What happened when you asked them about this? After about a week of radio silence an employee in Insider’com’s legal department got in touch to say they could discuss the issue on background.

This person told me the information in the Off-Facebook Activity tool came from the Facebook share button which is embedded on all articles it runs on its media websites. They confirmed that the share button can share data with Facebook regardless of whether the site visitor interacts with the button or not.

In my case I certainly would not have interacted with the Facebook share button. Nonetheless data was passed, simply by merit of loading the article page itself.

Insider.com said the Facebook share button widget is integrated into its sites using a standard set-up that Facebook intends publishers to use. If the share button is clicked information related to that action would be shared with Facebook and would also be received by Insider.com (though, in this scenario, it said it doesn’t get any personalized information — but rather gets aggregate data).

Facebook can also automatically collect other information when a user visits a webpage which incorporates its social plug-ins.

Asked whether Insider.com knows what information Facebook receives via this passive route the company told me it does not — noting the plug-in runs proprietary Facebook code.

Asked how it’s collecting consent from users for their data to be shared passively with Facebook, Insider.com said its Privacy Policy stipulates users consent to sharing their information with Facebook and other social media sites. It also said it uses the legal ground known as legitimate interests to provide functionality and derive analytics on articles.

In the active case (of a user clicking to share an article) Insider.com said it interprets the user’s action as consent.

Insider.com confirmed it uses SimpleReach/Nativo analytics tools, meaning site visitor data is also being passed to Nativo when a user lands on an article. It said consent for this data-sharing is included within its consent management platform (it uses a CMP made by Forcepoint) which asks site visitors to specify their cookie choices.

Here site visitors can choose for their data not to be shared for analytics purposes (which Insider.com said would prevent data being passed).

I usually apply all cookie consent opt outs, where available, so I’m a little surprised Nativo/SimpleReach was passed my data from an Insider.com webpage. Either I failed to click the opt out one time or failed to respond to the cookie notice and data was passed by default.

It’s also possible I did opt out but data was passed anyway — as there has been research which has found a proportion of cookie notifications ignore choices and pass data anyway (unintentionally or otherwise).

Follow up questions I sent to Insider.com after we talked:

1) Can you confirm whether Insider has performed a legitimate interests assessment?

2) Does Insider have a site mechanism where users can object to the passive data transfer to Facebook from the share buttons?

Insider.com did not respond to my additional questions.

What did you learn from their inclusion in the Off-Facebook Activity list? That Insider.com is a customer of Nativo/SimpleReach.

Rei.com

What is it? A California-based ecommerce website selling outdoor gear

Why did it appear in your Off-Facebook Activity list? I don’t recall ever visiting their site prior to looking into why it appeared in the list so I’m really not sure

What happened when you asked them about this? After saying it would investigate it followed up with a statement, rather than detailed responses to my questions, in which it claims it does not hold any personal data associated with — presumably — my TechCrunch email, since it did not ask me what data to check against.

It also appeared to be claiming that it uses Facebook tracking pixels/tags on its website, without explicitly saying as much, writing that: “Facebook may collect information about your interactions with our websites and mobile apps and reflect that information to you through their Off-Facebook Activity tool.”

It claims it has no access to this information — which it says is “pseudonymous to us” but suggested that if I have a Facebook account Facebook could link any browsing on Rei’s site to my Facebook’s identity and therefore track my activity.

The company also pointed me to a Facebook Help Center post where the company names some of the activities that might have resulted in Rei’s website sending activity data on me to Facebook (which it could then link to my Facebook ID) — although Facebook’s list is not exhaustive (included are: “viewing content”, “searching for an item”, “adding an item to a shopping cart” and “making a donation” among other activities the company tracks by having its code embedded on third parties’ sites).

Here’s Rei’s statement in full:

Thank you for your patience as we looked into your questions. We have checked our systems and determined that REI does not maintain any personal data associated with you based on the information you provided. Note, however, that Facebook may collect information about your interactions with our websites and mobile apps and reflect that information to you through their Off-Facebook Activity tool. The information that Facebook collects in this manner is pseudonymous to us — meaning we cannot identify you using the information and we do not maintain the information in a manner that is linked to your name or other identifying information. However, if you have a Facebook account, Facebook may be able to match this activity to your Facebook account via a unique identifier unavailable to REI. (Funnily enough, while researching this I found TechCrunch in MY list of Off-Facebook activity!)

For a complete list of activities that could have resulted in REI sharing pseudonymous information about you with Facebook, this Facebook Help Center article may be useful. For a detailed description of the ways in which we may collect and share customer information, the purposes for which we may process your data, and rights available to EEA residents, please refer to our Privacy Policy. For information about how REI uses cookies, please refer to our Cookie Policy.

As a follow up question I asked Rei to tell me which Facebook tools it uses, pointing out that: “Given that, just because you aren’t (as I understand it) directly using my data yourself that does not mean you are not responsible for my data being transferred to Facebook.”

The company did not respond to that point.

I also previously asked Rei.com to confirm whether it has any data sharing arrangements with the publisher of Rock & Ice magazine (see below). And, if so, to confirm the processes involved in data being shared. Again, I got no response to that.

What did you learn from their inclusion in the Off-Facebook Activity list? Given that Rei.com appeared alongside Rock & Ice on the list — both displaying the same date and just one activity apiece — I surmised they have some kind of data-sharing arrangement. They are also both outdoors brands so there would be obvious commercial ‘synergies’ to underpin such an arrangement.

That said, neither would confirm a business relationship to me. But Facebook’s list heavily implies there is some background data-sharing going on

Rock & Ice magazine

What is it? A climbing magazine produced by a California-based publisher, Big Stone Publishing

Why did it appear in your Off-Facebook Activity list? I imagine I clicked on a link to a climbing-related article in my Facebook feed or else visited Rock & Ice’s website while I was logged into Facebook in the same browser session

What happened when you asked them about this? After ignoring my initial email query I subsequently received a brief response from the publisher after I followed up — which read:

The Rock and Ice website is opt in, where you have to agree to terms of use to access the website. I don’t know what private data you are saying Rock and Ice shared, so I can’t speak to that. The site terms are here. As stated in the terms you can opt out.

Following up, I asked about the provision in the Rock & Ice website’s cookie notice which states: “By continuing to use our site, you agree to our cookies” — asking whether it’s passing data without waiting for the user to signal their consent.

(Relevant: In October Europe’s top court issued a ruling that active consent is necessary for tracking cookies, so you can’t drop cookies prior to a user giving consent for you to do so.)

The publisher responded:

You have to opt in and agree to the terms to use the website. You may opt out of cookies, which is covered in the terms. If you do not want the benefits of these advertising cookies, you may be able to opt-out by visiting: http://www.networkadvertising.org/optout_nonppii.asp.

If you don’t want any cookies, you can find extensions such as Ghostery or the browser itself to stop and refuse cookies. By doing so though some websites might not work properly.

I followed up again to point out that I’m not asking about the options to opt in or opt out but, rather, the behavior of the website if the visitor does not provide a consent response yet continues browsing — asking for confirmation Rock & Ice’s site interprets this state as consent and therefore sends data.

The publisher stopped responding at that point.

Earlier I had asked it to confirm whether its website shares visitor data with Rei.com? (As noted above, the two appeared with the same date on the list which suggests data may be being passed between them.) I did not get a respond to that question either.

What did you learn from their inclusion in the Off-Facebook Activity list? That the magazine appears to have a data-sharing arrangement with outdoor retailer Rei.com, given how the pair appeared at the same point in my list. However neither would confirm this when I asked

MatterHackers

What is it? A California-based retailer focused on 3D printing and digital manufacturing

Why did it appear in your Off-Facebook Activity list? I honestly have no idea. I have never to my knowledge visited their site prior to investigating why they should appear on my Off Site Activity list.

I remain pretty interested to know how/why they managed to track me. I can only surmise I clicked on some technology-related content in my Facebook feed, either intentionally or by accident.

What happened when you asked them about this? They first asked me for confirmation that they were on my list. After I had sent a screenshot, they followed up to say they would investigate. I pushed again after hearing nothing for several weeks. At this point they asked for additional information from the Off-Facebook Activity tool — namely more granular metrics, such as a time and date per event and some label information — to help with tracking down this particular data-exchange.

I had previously provided them with the date (as it appears in the screenshot) but it’s possible to download additional an additional level of information about data transfers which includes per event time/date-stamps and labels/tags, such as “VIEW_CONTENT” .

However, as noted above, I had previously selected and deleted one item off of my Off-Facebook Activity list, after which Facebook’s platform had immediately erased all entries and associated metrics. There was no obvious way I could recover access to that information.

“Without this information I would speculate that you viewed an article or product on our site — we publish a lot of ‘How To’ content related to 3D printing and other digital manufacturing technologies — this information could have then been captured by Facebook via Adroll for ad retargeting purposes,” a MatterHackers spokesman told me. “Operationally, we have no other data sharing mechanism with Facebook.”

Subsequently, the company confirmed it implements Facebook’s tracking pixel on every page of its website.

Of the pixel Facebook writes that it enables website owners to track “conversions” (i.e. website actions); create custom audiences which segment site visitors by criteria that Facebook can identify and match across its user-base, allowing for the site owner to target ads via Facebook’s platform at non-customers with a similar profile/criteria to existing customers that are browsing its site; and for creating dynamic ads where a template ad gets populated with product content based on tracking data for that particular visitor.

Regarding the legal base for the data sharing, MatterHackers had this to say: “MatterHackers is not an EU entity, nor do we conduct business in the EU and so have not undertaken GDPR compliance measures. CCPA [California’s Consumer Privacy Act] will likely apply to our business as of 2021 and we have begun the process of ensuring that our website will be in compliance with those regulations as of January 1st.”

I pointed out that GDPR is extraterritorial in scope — and can apply to non-EU based entities, such as if they’re monitoring individuals in the EU (as in this case).

Also likely relevant: A ruling last year by Europe’s top court found sites that embed third party plug-ins such as Facebook’s like button are jointly responsible for the initial data processing — and must either obtain informed consent from site visitors prior to data being transferred to Facebook, or be able to demonstrate a legitimate interest legal basis for processing this data.

Nonetheless it’s still not clear what legal base the company is relying on for implementing the tracking pixel and passing data on EU Facebook users.

When asked about this MatterHacker COO, Kevin Pope, told me:

While we appreciate the sentiment of GDPR, in this case the EU lacks the legal standing to pursue an enforcement action. I’m sure you can appreciate the potential negative consequences if any arbitrary country (or jurisdiction) were able to enforce legal penalties against any website simply for having visitors from that country. Techcrunch would have been fined to oblivion many times over by China or even Thailand (for covering the King in a negative light). In this way, the attempted overreach of the GDPR’s language sets a dangerous precedent.To provide a little more detail – MatterHackers, at the time of your visit, wouldn’t have known that you were from the EU until we cross-referenced your session with Facebook, who does know. At that point you would have been filtered from any advertising by us. MatterHackers makes money when our (U.S.) customers buy 3D printers or materials and then succeed at using them (hence the how-to articles), we don’t make any money selling advertising or data.Given that Facebook does legally exist in the EU and does have direct revenues from EU advertisers, it’s entirely appropriate that Facebook should comply with EU regulations. As a global solution, I believe more privacy settings options should be available to its users. However, given Facebook’s business model, I wouldn’t expect anything other than continued deflection (note the careful wording on their tool) and avoidance from them on this issue.

What did you learn from their inclusion in the Off-Facebook Activity List? I found out that an ecommerce company I had never heard of had been tracking me

Wallapop

What is it? A Barcelona-based peer-to-peer marketplace app that lets people list secondhand stuff for sale and/or to search for things to buy in their proximity. Users can meet in person to carry out a transaction paying in cash or there can be an option to pay via the platform and have an item posted

Why did it appear in your Off-Facebook Activity list? This was the only digital activity that appeared in the list that was something I could explain — figuring out I must have used a Facebook sign-in option when using the Wallapop app to buy/sell. I wouldn’t normally use Facebook sign-in but for trust-based marketplaces there may be user benefits to leveraging network effects.

What happened when you asked them about this? After my query was booted around a bit a PR company that works with Wallapop responded asking to talk through what information I was trying to ascertain.

After we chatted they sent this response — attributed to sources from Wallapop:

Same as it happens with other apps, wallapop can appear on our users’ Facebook Off Site Activity page if they have interacted in any way with the platform while they were logged in their Facebook accounts. Some interaction examples include logging in via Facebook, visiting our website or having both apps opened and logged.

As other apps do, wallapop only shares activity events with Facebook to optimize users’ ad experience. This includes if a user is registered in wallapop, if they have uploaded an item or if they have started a conversation. Under no circumstance wallapop shares with Facebook our users’ personal data (including sex, name, email address or telephone number).

At wallapop, we are thoroughly committed with the security of our community and we do a safe treatment of the data they choose to share with us, in compliance with EU’s General Data Protection Regulation. Under no circumstance these data are shared with third parties without explicit authorization.

I followed up to ask for further details about these “activity events” — asking whether, for instance, Wallapop shares messaging content with Facebook as well as letting the social network know which items a user is chatting about.

“Under no circumstance the content of our users’ messages is shared with Facebook,” the spokesperson told me. “What is shared is limited to the fact that a conversation has been initiated with another user in relation to a specific item, this is, activity events. Under no circumstance we would share our users’ personal information either.”

Of course the point is Facebook is able to link all app activity with the user ID it already has — so every piece of activity data being shared is personal data.

I also asked what legal base Wallapop relies on to share activity data with Facebook. They said the legal basis is “explicit consent given by users” at the point of signing up to use the app.

“Wallapop collects explicit consent from our users and at any time they can exercise their rights to their data, which include the modification of consent given in the first place,” they said.

“Users give their explicit consent by clicking in the corresponding box when they register in the app, where they also get the chance to opt out and not do it. If later on they want to change the consent they gave in first instance, they also have that option through the app. All the information is clearly available on our Privacy Policy, which is GDPR compliant.”

“At wallapop we take our community’s privacy and security very seriously and we follow recommendations from the Spanish Data Protection Agency,” it added

What did you learn from their inclusion in the Off-Facebook Activity list? Not much more than I would have already guessed — i.e. that using a Facebook sign-in option in a third party app grants the social media giant a high degree of visibility into your activity within another service.

In this case the Wallapop app registered the most activity events of all six of the listed apps, displaying 13 vs only one apiece for the others — so it gave a bit of a suggestive glimpse into the volume of third party app data that can be passed if you opt to open a Facebook login wormhole into a separate service.

(@EU_Commission)

(@EU_Commission)