Facebook is continuing to open up access to a data porting tool it launched in Ireland in December. The tool lets users of its network transfer photos and videos they have stored on its servers directly to another photo storage service, such as Google Photos, via encrypted transfer.

A Facebook spokesman confirmed to TechCrunch that access to the transfer tool is being rolled out today to the UK, the rest of the European Union and additional countries in Latin America and Africa.

Late last month Facebook also opened up access to multiple markets in APAC and LatAm, per the spokesman. The tech giant has previously said the tool will be available worldwide in the first half of 2020.

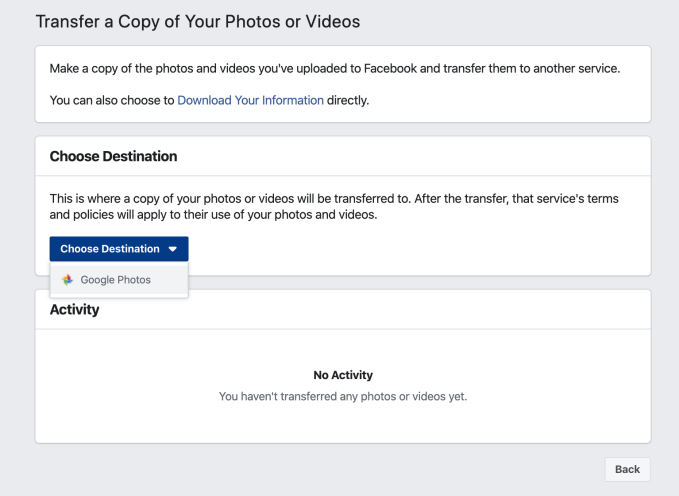

The setting to “transfer a copy of your photos and videos” is accessed via the Your Facebook Information settings menu.

The tool is based on code developed via Facebook’s participation in the Data Transfer Project (DTP) — a collaborative effort starting in 2018 and backed by the likes of Apple, Facebook, Google, Microsoft and Twitter — who committed to build a common framework using open source code for connecting any two online service providers in order to support “seamless, direct, user initiated portability of data between the two platforms”.

In recent years the dominance of tech giants has led to an increase in competition complaints — garnering the attention of policymakers and regulators.

In the EU, for instance, competition regulators are now eyeing the data practices of tech giants including Amazon, Facebook and Google. While, in the US, tech giants including Google, Facebook, Amazon, Apple and Microsoft are also facing antitrust scrutiny. And as more questions are being asked about antitrust big tech has been under pressure to respond — hence the collective push on portability.

Last September Facebook also released a white paper laying out its thinking on data portability which seeks to frame it as a challenge to privacy — in what looks like an attempt to lobby for a regulatory moat to limit portability of the personal data mountain it’s amassed on users.

At the same time, the release of a portability tool gives Facebook something to point regulators to when they come calling — even as the tools only allows users to port a very small portion of the personal data the service holds on them. Such tools are also only likely to be sought out by the minority of more tech savvy users.

Facebook’s transfer tool also currently only supports direct transfer to Google’s cloud storage — greasing a pipe for users to pass a copy of their facial biometrics from one tech giant to another.

We checked, and from our location in the EU, Google Photos is the only direct destination offered via Facebook’s drop-down menu thus far:

However the spokesman implied wider utility could be coming — saying the DTP project updated adapters for photos APIs from Smugmug (which owns Flickr); and added new integrations for music streaming service Deezer; decentralized social network Mastodon; and Tim Berners-Lee’s decentralization project Solid.

Though it’s not clear why there’s no option offered as yet within Facebook to port direct to any of these other services. Presumably additional development work is still required by the third party to implement the direct data transfer. (We’ve asked Facebook for more on this and will update if we get a response.)

The aim of the DTP is to develop a standardized version to make it easier for others to join without having to “recreate the wheel every time they want to build portability tools”, as the spokesman put it, adding: “We built this tool with the support of current DTP partners, and hope that even more companies and partners will join us in the future.”

He also emphasized that the code is open source and claimed it’s “fairly straightforward” for a company that wishes to plug its service into the framework especially if they already have a public API.

“They just need to write a DTP adapter against that public API,” he suggested.

“Now that the tool has launched, we look forward to working with even more experts and companies – especially startups and new platforms looking to provide an on-ramp for this type of service,” the spokesman added.