In a public health emergency that relies on people keeping an anti-social distance from each other to avoid spreading a highly contagious virus for which humans have no pre-existing immunity governments around the world have been quick to look to technology companies for help.

Background tracking is, after all, what many Internet giants’ ad-targeting business models rely on. While, in the US, telcos were recently exposed sharing highly granular location data for commercial ends.

Some of these privacy-hostile practices face ongoing challenges under existing data protection laws in Europe — and/or have at least attracted regulator attention in the US, which lacks a comprehensive digital privacy framework — but a pandemic is clearly an exceptional circumstance. So we’re seeing governments turn to the tech sector for help.

US president Donald Trump was reported last week to have summoned a number of tech companies to the White House to discuss how mobile location data could be used for tracking citizens.

In another development this month he announced Google was working on a nationwide coronavirus screening site — in fact it’s Verily, a different division of Alphabet. But concerns were quickly raised that the site requires users to sign in with a Google account, suggesting users’ health-related queries could be linked to other online activity the tech giant monetizes via ads. (Verily has said the data is stored separately and not linked to other Google products, although the privacy policy does allow data to be shared with third parties including Salesforce for customer service purposes.)

In the UK the government has also been reported to be in discussions with telcos about mapping mobile users’ movements during the crisis — though not at an individual level. It was reported to have held an early meeting with tech companies to ask what resources they could contribute to the fight against COVID-19.

Elsewhere in Europe, Italy — which remains the European nation worst hit by the virus — has reportedly sought anonymized data from Facebook and local telcos that aggregates users’ movement to help with contact tracing or other forms of monitoring.

While there are clear public health imperatives to ensure populations are following instructions to reduce social contact, the prospect of Western democracies making like China and actively monitoring citizens’ movements raises uneasy questions about the long term impact of such measures on civil liberties.

Plus, if governments seek to expand state surveillance powers by directly leaning on the private sector to keep tabs on citizens it risks cementing a commercial exploitation of privacy — at a time when there’s been substantial push-back over the background profiling of web users for behavioral ads.

“Unprecedented levels of surveillance, data exploitation, and misinformation are being tested across the world,” warns civil rights campaign group Privacy International, which is tracking what it dubs the “extraordinary measures” being taken during the pandemic.

A couple of examples include telcos in Israel sharing location data with state agencies for COVID-19 contact tracing and the UK government tabling emergency legislation that relaxes the rules around intercept warrants.

“Many of those measures are based on extraordinary powers, only to be used temporarily in emergencies. Others use exemptions in data protection laws to share data. Some may be effective and based on advice from epidemiologists, others will not be. But all of them must be temporary, necessary, and proportionate,” it adds. “It is essential to keep track of them. When the pandemic is over, such extraordinary measures must be put to an end and held to account.”

At the same time employers may feel under pressure to be monitoring their own staff to try to reduce COVID-19 risks right now — which raises questions about how they can contribute to a vital public health cause without overstepping any legal bounds.

We talked to two lawyers from Linklaters to get their perspective on the rules that wrap extraordinary steps such as tracking citizens’ movements and their health data, and how European and US data regulators are responding so far to the coronavirus crisis.

Bear in mind it’s a fast-moving situation — with some governments (including the UK and Israel) legislating to extend state surveillance powers during the pandemic.

The interviews below have been lighted edited for length and clarity

Europe and the UK

Dr Daniel Pauly, technology, media & telecommunications partner at Linklaters in Frankfurt

Data protection law has not been suspended. At least when it comes to Europe. So data protection law still applies — without any restrictions. This is the baseline on which we need to work and for which we need to start. Then we need to differentiate between what the government can do and what employers can do in particular.

It’s very important to understand that when we look at governments they do have the means to allow themselves a certain use of data. Because there are opening clauses, flexibility clauses, in particular in the GDPR, when it comes to public health concerns, cross-border threats.

By using the legislation process they may introduce further powers. To give you one example what the Germany government did to respond is they created a special law — the coronavirus notification regulation — we already have in place a law governing the use of personal data in respect of certain serious infections. And what they did is they simply added the coronavirus infection to that list, which now means that hospitals and doctors must notify the competent authority of any COVID-19 infection.

This is pretty far reaching. They need to transmit names, contact details, sex, date of birth and many other details to allow the competent authority to gather that data and to analyze that data.

Another important topic in that field is the use of telecommunications data — in particular mobile phone data. Efficient use of that data might be one of the reasons why they obviously were quite successful in China with reducing the threat from the virus.

In Europe the government may not simply use mobile phone data and movement data — they have to anonymize it first and this is what, in Germany and other European jurisdictions, happened — including the UK — that anonymized mobile phone data has been handed over to organizations who start analyzing that data to get a better view of how the people behave, how the people move and what they need to do in order to restrict further movement. Or to restrict public life. This is the view on the government at least in Europe and the UK.

Transparency obligations [related to government use of personal data] are stemming from the GDPR [General Data Protection Regulation]. When they would like to make use of mobile phone data this is the ePrivacy directive. This is not as transparent as the GDPR is and they did not succeed in replacing that piece of legislation by new regulation. So the ePrivacy directive gives again the various Member States, including the UK, the possibility to introduce further and more restrictive laws [for public health reasons].

[If Internet companies such as Google were to be asked by European governments to pass data on users for a coronavirus tracking purpose] it has to be taken into consideration that they have not included this in their records of processing activities — in their data protection notifications and information.

So it would be at least from a pure legal perspective it would be a huge step — and I’m wondering whether it would be feasible without the governments introducing special laws for that.

If [EU] governments would make use of private companies to provide them with data which has not been collected for such purposes — so that would be a huge step from the perspective of the GDPR at least. I’m not aware of something like this. I’ve certainly read there are discussions ongoing with Netflix to reduce the net traffic but I haven’t heard anything about making use of the data Google has.

I wouldn’t expect it in Europe — and particularly in Germany. Tracking people, tracking and monitoring what they are doing this is almost last resort — so I wouldn’t expect that in the next couple of weeks. And I hope then it’s over.

[So far], from my perspective, the European regulators have responded [to the coronavirus crisis] in a pretty reasonable manner by saying that, in particular, any response to the virus must be proportionate.

We still have that law in place and we need to consider that the data we’re talking about is health data — it’s the most protected data of all. Having said that there are some ways at least the GDPR is allowing the government and allowing employers to make use of that data. In particular when it comes to processing for substantial public interest. Or if it’s required for the purposes of preventive medicine or necessary for reasons of public interest.

So the legislator was wise enough to include clauses allowing the use of such data under certain circumstances and there are a number of supervisory authorities who already made public guidelines how to make use of these statutory permissions. And what they basically said was it always needs to be figured out on a case by case basis whether the data is really required in the specific case.

To give you an example, it was made clear that an employer may not ask an employee where he has been during his vacation — but he may ask have you been in any of the risk areas? And then the sufficient answer is yes or no. They do not need any further data. So it’s always [about approaching this] a smart way — by being smart you get the information you need; it’s not the flood gate suddenly opened.

You really need to look at the specific case and see how to get the data you need. Usually it’s a yes or no which is sufficient in the particular case.

The US

Caitlin Potratz Metcalf, senior U.S. associate at Linklaters and a Certified Information Privacy Professional (CIPP/US)

Even though you don’t have a structured privacy framework in the US — or one specific regulator that covers privacy — you’ve got some of the same issues. The FCC [Federal Communications Commission] will go after companies that take any action that is inconsistent with their privacy policies. And that would be misleading to consumers. Their initial focus is on consumer protection, not privacy, but in the last couple of years they’ve been wearing two hats. So there is a focus on privacy even though we don’t have a national privacy law [equivalent in scope to the EU’s GDPR] but it’s coming from a consumer protection point of view.

So, for example, the FCC back in February actually announced potential sanctions against four major telecoms companies int he US with respect to sharing data related to cell phone tracking — it wasn’t the geolocation in an app but actually pinging off cell towers — and sharing that data to third parties without proper safeguards. Because that wasn’t disclosed in their privacy policies.

They haven’t actually issued those fines but it was announced that they may pursue a $208M fine total against these four companies: AT&T, Verizon*, T-Mobile, Sprint… So they do take it very seriously about how that data is safeguarded, how it’s being shared. And the fact that we have a state of emergency doesn’t change that emphasis on consumer protection.

You’ll see the same is true for the Department of Health and Human Services (HHS) — that’s responsible for any medical or health data.

That is really limited towards entities that are covered entities under HIPAA [Health Insurance Portability and Accountability Act] or their business associates. So it doesn’t apply to everybody across the board. But if you are a hospital health plan provider, whether you’re an employer and you have a group health plan, an insurer, or a business associate supporting one of those covered entities then you have to comply with HIPAA to the extent you’re handling protected health information. And that’s a bit narrower than the definition of personal data that you’d have under GDPR.

So you’re really looking at identifying information for that patient: Their medical status, their birth date, address, things like that that might be very identifiable and related to the person. But you could share things that are more general. For example you have a middle aged man from this county who’s tested positive for COVID and is at XYZ facility being treated and his condition is stable. Or his condition is critical. So you could share that kind of level of detail — but not further.

And so HHS in February had issued a bullet stressing that you can’t set aside the privacy and security safeguards under HIPAA during an emergency. They stressed to all covered entities that you have to still comply with the law — sanctions are still in place. And to the extent that you do have to disclose some of the protected health information it has to be to the minimum extent necessary. And that can be disclosed either to other hospitals, to a regulator in order to help stem the spread of COVID and also in order to provide treatment to a patient. So they listed a couple of different exceptions how you can share that information but really stressing the minimum necessary.

The same would be true for an employer — like of a group health plan — if they’re trying to share information about employees but it’s going to be very narrow in what they can actually share. And they can’t just cite as an exception that it’s for the public health interest.. You don’t necessarily have to disclose what country they’ve been to it’s just have they been to a region that’s on a restricted list for travel. So it’s finding creative ways to relay the necessary information you need and if there’s anything less intrusive you’re required to go that route.

That said, just last week HHS also issued another bullet saying that they would waive HIPAA sanctions and penalties during the nationwide public health emergency. But it was only directed to hospitals — so it doesn’t apply to all covered entities.

They also issued another bulletin saying that they would lax restrictions on basically sharing data on using electronic means. So there’s very heightened restrictions on how you can share data electronically when it relates to medical and health information. And so this was allowing doctors to communicate by FaceTime or video chat and other methods that may not be encrypted or secure. Or communicate with patients etc. So they’re giving a waiver or just softening some of the restrictions related to transferring health data electronically.

So you can see it’s an evolving situation but they’ve still taken a very reserved and kind of conservative approach — really emphasizing that you do need to comply with your obligation to protect health data. So that’s where you see the strongest implementations. And then the FCC coming at it from a consumer protection point of view.

Going back to the point you made earlier about Google sharing data [with governments] — you could get there, it just depends on how their privacy policies are structured.

In terms of tracking individuals we don’t have a national statute like GDPR that would prevent that but it would also be very difficult to anonymize that data because it’s so tied to individuals — it’s like your DNA; you can map a person leaving home, going to work or school, going to a doctor’s office, coming back home — and it really does have very sensitive information and because of all the specific data points it means it’s very difficult to anonymize it and provide it in a format that wouldn’t violate someone’s privacy without their consent. And so while you may not need full consent in the US you would still need to have notice and transparency about the policies.

Then it would be slightly different if you’re a California resident — the degree that you need under the new California law [CCPA] to provide disclosures and give individuals the opportunity to opt out if you were to share their information. So in that case, where the telecoms companies are potentially going to be sued by the FCC for sharing data with third parties, that in particular would also violate the new California law if consumers weren’t given the opportunity to opt out of having their information sold.

So there’s a lot of different puzzle pieces that fit together since we have a patchwork quilt of data protection — depending on the different state and federal laws.

The government, I guess, could issue other mandates or regulations [to requisition telco tracking data for a COVID-related public health purpose] — I don’t know that they will. I would envisage more of a call to arms requesting support and assistance from the private sector. Not a mandate that you must share your data, given the way our government is structured. Unless things get incredibly dire I don’t really see a mandate to companies that they have to share certain data in order to be able to track patients.

[If Google makes use of health-related searches/queries to enrich user profiles it uses for commercial purposes] that in and of itself wouldn’t be protected health information.

Google is not a [HIPAA] covered entity. And depending on what type of support it’s providing for covered entities it may be in limited circumstances could be considered a business associate that could be subject to HIPAA but in the context of just collecting data on consumers it wouldn’t be governed by that.

So as long as it’s not doing anything outside the scope of what’s already in its privacy policies then it’s fine — so the fact that it’s collecting data based on searches that you run on Google that should be in the privacy policy anyway. It doesn’t need to be specific to the type of search that you’re running. So the fact that it’s looking up how to get COVID testing or treatment or what are the symptoms for COVID, things like that, that can all be tied to the data [it holds on users] and enriched. And that can also be shared and sold to third parties — unless you’re a California resident. They have a separate privacy policy for California residents… They just have to consistent with their privacy policy.

The interesting thing to me is maybe the approach that Asia has taken — where they have a lot more influence over the commercial sector and data tracking– and so you actually have the regulator stepping in and doing more tracking, not just private companies. But private companies are able to provide tracking information.

You see it actually with Uber. They’ve issued additional privacy notices to consumers — saying that to the extent we become aware of a passenger that has had COVID or a driver, we will notify people who have come into contact with that Uber over a given time period. They’re trying to take the initiative to do their own tracking to protect workers and consumers.

And they can do that — they just have to be careful about how much detail they share about personal information. Not naming names of who was impacted [but rather saying something like] ‘in the last 24 hours you may have ridden in an Uber that was impacted or known to have an infected individual in the Uber’.

[When it comes to telehealth platforms and privacy protections] it depends if they’re considered a business associate of a covered entity. So they may not be a covered entity themselves but if they are a business associate supporting a covered entity — for example a hospital or a clinic or insurers sharing that data and relying on a telehealth platform. In that context they would be governed by some of the same privacy and security regulations under HIPAA.

Some of them are slightly different for a business associate compared to a covered entity but generally you step in the shoes of the covered entity if you’re handling the covered entity’s data and have the same restrictions apply to you.

Aggregate data wouldn’t be considered protected health information — so they could [for example] share a symptom heat map that doesn’t identify specific individuals or patients and their health data.

[But] standalone telehealth apps that are collecting data directly from the consumer are not covered by HIPAA.

That’s actually a big loophole in terms of consumer protection, privacy protections related to health data. You have the same issue for all the health fitness apps — whether it’s your fitbit or other health apps or if you’re pregnant and you have an app that tracks your maternity or your period or things like that. Any of that data that’s collected is not protected.

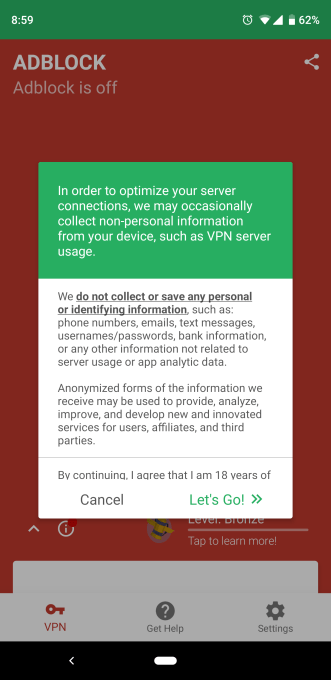

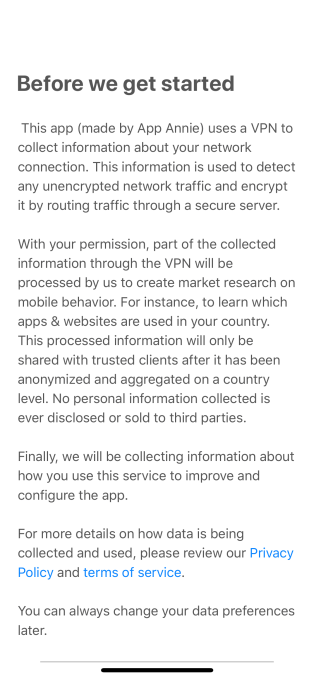

The only protections you have are whatever disclosures are in the privacy policies. And in them having to be transparent and act within that privacy policy. If they don’t they can face an enforcement action by the FCC but that is not regulated by the Department of Health and Human Services under HIPAA.

So it’s a very different approach than under GDPR which is much more comprehensive.

That’s not to say in the future we might see a tightening of restrictions on that but individuals are freely giving that information — and in theory should read the privacy policy that’s provided when you log into the app. But most users probably don’t read that and then that data can be shared with other third parties.

They could share it with a regulator, they could sell it to other third parties so long as they have the proper disclosure that they may sell your personal information or share it with third parties. It depends on how they’re privacy policy is crafted. So long as it covers those specific actions. And for California residents it’s a more specific test — there are more disclosures that are required.

For example the type of data that you’re collecting, the purpose that you’re collecting it for, how you intend to process that data, who you intend to share it with and why. So it’s tightened for California residents but for the rest of the US you just have to be consistent with your privacy policy and you aren’t required to have the same level of disclosures.

More sophisticated, larger companies, though, definitely are already complying with GDPR — or endeavouring to comply with the California law — and so they have more sophisticated, detailed privacy notices than are maybe required by law in the US. But they’re kind of operating on a global platform and trying to have a global privacy policy.

*Disclosure: Verizon is TechCrunch’s parent company

The launch comes alongside other COVID-19 responses from Instagram that include:

The launch comes alongside other COVID-19 responses from Instagram that include: With Co-Watching Instagram users can spill the tea and gossip about posts live and unfiltered over video chat. When people launch a video chat from the Direct inbox or a chat thread, they’ll see a “Posts” button that launches Co-Watching. They’ll be able to pick from their Liked, Saved, or Explore feeds and then reveal it to the video chat, with everyone’s windows lined up beneath the post.

With Co-Watching Instagram users can spill the tea and gossip about posts live and unfiltered over video chat. When people launch a video chat from the Direct inbox or a chat thread, they’ll see a “Posts” button that launches Co-Watching. They’ll be able to pick from their Liked, Saved, or Explore feeds and then reveal it to the video chat, with everyone’s windows lined up beneath the post.