k-ID's platform makes it easy for game devs to comply with child safety and data privacy regulations.

© 2024 TechCrunch. All rights reserved. For personal use only.

Product Management Confabulation

What Product Managers are talking about.

k-ID's platform makes it easy for game devs to comply with child safety and data privacy regulations.

© 2024 TechCrunch. All rights reserved. For personal use only.

Microsoft will pay $20 million to settle charges brought by the Federal Trade Commission accusing the tech giant of illegally collecting the personal information of children without their parents’ consent — and in some cases retaining it “for years.”

The federal consumer watchdog said Microsoft violated the Children’s Online Privacy Protection Act (COPPA), the federal law that governs the online privacy protections for children under the age of 13, which requires companies notify parents about the data they collect, obtain parental consent and delete the data when it’s no longer necessary.

The FTC said children signing up to Microsoft’s Xbox gaming service were asked to provide their personal information — including their name, email address, phone number and date of birth — which until 2019 included a pre-filled check box allowing Microsoft to share user information with advertisers. The FTC said Microsoft collected this data before asking for the parent to complete the account setup, but held onto children’s data even if the parent abandoned the sign-up process.

“Only after gathering that raft of personal data from children did Microsoft get parents involved in the process,” said FTC’s Lesley Fair in a corresponding blog post.

As a result, the FTC will require Microsoft to notify parents and obtain consent for accounts created before May 2021. Microsoft will also have to establish new systems to delete children’s personal information if it hasn’t obtained parental consent, and to ensure the data is deleted when it’s no longer needed.

Microsoft did not respond to a request for comment, but Xbox boss Dave McCarthy said in a blog post that the company “did not meet customer expectations” and is “committed to complying with the order to continue improving upon our safety measures.” McCarthy said the reason that Microsoft retained children’s data for longer was because of a “technical glitch,” and that the data was “never used, shared, or monetized.”

The FTC said this was its third COPPA-related enforcement in recent weeks, including a recent action it took against Amazon for keeping Alexa voice recordings “forever” and failing to honor parents’ deletion requests.

Microsoft to pay $20M settlement for illegally collecting children’s personal data by Zack Whittaker originally published on TechCrunch

Amazon will pay the FTC a $25 million penalty as well as “overhaul its deletion practices and implement stringent privacy safeguards” to avoid charges of violating the Children’s Online Privacy Protection Act to spruce up its AI.

Amazon’s voice interface Alexa has been in use in homes across the globe for years, and any parent who has one knows that kids love to play with it, make it tell jokes, even use it for its intended purpose, whatever that is. In fact it was so obviously useful to kids who can’t write or have disabilities that the FTC relaxed COPPA rules to accommodate reasonable usage: Certain service-specific analysis of kids’ data, like transcription, was allowed as long as it is not retained any longer than reasonably necessary.

It seems that Amazon may have taken a rather expansive view on the “reasonably necessary” timescale, keeping kids’ speech data more or less forever. As the FTC puts it:

Amazon retained children’s recordings indefinitely — unless a parent requested that this information be deleted, according to the complaint. And even when a parent sought to delete that information, the FTC said, Amazon failed to delete transcripts of what kids said from all its databases.

Geolocation data was also not deleted, a problem the company “repeatedly failed to fix.”

This has been going on for years — the FTC alleges that Amazon knew about it as early as 2018 but didn’t take action until September of the next year, after the agency gave them a helpful nudge.

That kind of timing usually indicates that a company would have continued with this practice forever. Apparently, due to “faulty fixes and process fiascos,” some of those practices did continue until 2022!

You may well ask, what is the point of having a bunch of recordings of kids talking to Alexa? Well, if you plan on having your voice interface talk to kids a lot, it sure helps to have a secret database of audio interactions that you can train your machine learning models on. That’s how the FTC said Amazon justified its retention of this data.

FTC Commissioners Bedoya and Slaughter, as well as Chair Khan, wrote a statement accompanying the settlement proposal and complaint to particularly call out this one point:

The Commission alleges that Amazon kept kids’ data indefinitely to further refine its voice recognition algorithm. Amazon is not alone in apparently seeking to amass data to refine its machine learning models; right now, with the advent of large language models, the tech industry as a whole is sprinting to do the same.

Today’s settlement sends a message to all those companies: Machine learning is no excuse to break the law. Claims from businesses that data must be indefinitely retained to improve algorithms do not override legal bans on indefinite retention of data. The data you use to improve your algorithms must be lawfully collected and lawfully retained. Companies would do well to heed this lesson.

So today we have the $25 million fine, which is of course less than negligible for a company Amazon’s size. It’s clearly complying with the other provisions of the proposed order that will likely give them a headache. The FTC says the order would:

This settlement and action is totally independent from the FTC’s other one announced today, with Amazon subsidiary Ring. There is a certain common thread of “failing to implement basic privacy and security protections,” though.

In a statement, Amazon said that “While we disagree with the FTC’s claims and deny violating the law, this settlement puts the matter behind us.” They also promise to “remove child profiles that have been inactive for more than 18 months,” which seems incredibly long to retain that data. I’ve followed up with questions about that duration and whether the data will be used for ML training and will update if I hear back.

Amazon settles with FTC for $25M after ‘flouting’ kids’ privacy and deletion requests by Devin Coldewey originally published on TechCrunch

California lawmakers have passed a bill that seeks to make apps and other online spaces safer for kids in the absence of robust federal standards. The bill, if signed into law, would impose a set of new protections for people under the age of 18 in California, potentially punishing tech companies with thousands in fines for every child affected by any violation.

The bill, the California Age-Appropriate Design Code Act, still needs to be signed by California Governor Gavin Newsom before becoming law. If signed, its provisions would go into effect on July 1, 2024, giving platforms an interval of time to come into compliance.

The new privacy rules would apply to social apps like Instagram, TikTok and YouTube — frequent targets of criticism for their mishandling of young users’ safety and mental health — but also to other businesses that offer “an online service, product, or feature likely to be accessed by children.” That broader definition would also extend the bill’s requirements to gaming and education platforms that kids might use, along with any other websites or services that don’t explicitly limit their use to adults.

The bill defines a child as anyone under the age of 18, pushing apps and other online products that might attract minors to enact more privacy protections for all under-18 users, not just the youngest ones. The federal law that carves out some privacy protections for children online, the Children’s Online Privacy Protection Act (COPPA), only extends its protections to children under age 13.

California Age-Appropriate … by TechCrunch

Among its requirements, the California children’s privacy legislation would prohibit companies from collecting any minor’s user data beyond what is absolutely necessary or leveraging children’s personal information in any way “materially detrimental to the physical health, mental health, or well-being of a child.” It would also require affected companies to default users under 18 to the strongest privacy settings, “including by disabling features that profile children using their previous behavior, browsing history, or assumptions of their similarity to other children, to offer detrimental material.”

The bill would also create a new working group dedicated to implementing its requirements comprised of members appointed by the governor and state agencies. The California Attorney General would be empowered to fine companies that violate its rules $2,500 per child affected for any violations deemed to be “negligent” and $7,500 for intentional violations.

“We are very encouraged by today’s bi-partisan passage of AB 2273, a monumental step toward protecting California kids online,” the children’s advocacy organization Common Sense said in a statement Tuesday. “Today’s action, authored by Assembly members Wicks, Cunningham and Petrie-Norris, sends an important signal about the need to make children’s online health and safety a greater priority for lawmakers and for our tech companies, particularly when it comes to websites that are accessed by young users.”

While there’s plenty of detail to be worked out still, the California bill could force the hand of tech companies that have historically prioritized explosive user growth and monetization above all — and dragging their feet when it comes to the less lucrative work of verifying the age of their users and protecting young people from online threats to safety and mental health. Inspired by the U.K. children’s privacy legislation known as the “Age Appropriate Design Code,” the current legislation could similarly force tech companies to improve their privacy standards for minors across the board rather than creating customized experiences for regionally specific user segments that fall under new legal protections.

The U.S. Federal Trade Commission (FTC) today announced a settlement of $150,000 with HyperBeard, the developer of a collection of children’s mobile games over violations of U.S. Children’s Online Privacy Protection Act Rule (COPPA Rule). The company’s applications had been downloaded more than 50 million times on a worldwide basis to date, according to data from app intelligence firm Sensor Tower.

A complaint filed by the Dept. of Justice on behalf of the FTC alleged that HyperBeard had violated COPPA by allowing third-party ad networks to collect personal information in the form of persistent identifiers to track users of the company’s child-directed apps. And it did so without notifying parents or obtaining verifiable parental consent, as is required. These ad networks then used the identifiers to target ads to children using HyperBeard’s games.

The company’s lineup included games like Axolochi, BunnyBuns, Chichens, Clawbert, Clawberta, KleptoCats, KleptoCats 2, KleptoDogs, MonkeyNauts, and NomNoms (not to be confused with toy craze Num Noms).

The FTC determined HyperBeard’s apps were marketed towards children because they used brightly colored, animated characters like cats, dogs, bunnies, chicks, monkeys, and other cartoon characters and were described in child-friendly terms like “super cute” and “silly.” The company also marketed its kids’ apps on a kids’ entertainment website, YayOMG, published children’s books, and licensed other products, including stuffed animals and block construction sets, based on its app characters.

Unbelievably, the company would post disclaimers to its marketing materials that these apps were not meant for children under 13.

Above: A disclaimer on the NomNoms game website.

In HyperBeard’s settlement with the FTC, the company has agreed to pay a $150,000 fine and to delete the personal information it illegally collected from children under the age of 13. The settlement had originally included a $4 million penalty, but the FTC suspended it over HyperBeard’s inability to pay the full amount. But that larger amount will become due if the company or its CEO, Alexander Kozachenko, are ever found to have misrepresented their finances.

HyperBeard is not the first tech company to be charged with COPPA violations. Two high-profile examples preceding it were YouTube and Musical.ly (TikTok)’s settlements of $170 million and $5.7 million, respectively, both in 2019. By comparison, HyperBeard’s fine seems minimal. However, its case is different from either video platform as the company itself was not handling the data collection – it was permitting ad networks to do so.

HyperBeard is not the first tech company to be charged with COPPA violations. Two high-profile examples preceding it were YouTube and Musical.ly (TikTok)’s settlements of $170 million and $5.7 million, respectively, both in 2019. By comparison, HyperBeard’s fine seems minimal. However, its case is different from either video platform as the company itself was not handling the data collection – it was permitting ad networks to do so.

The complaint explained that HyperBeard let third-party advertising networks serve ads and collect personal information, in the form of persistent identifiers, in order to serve behavioral ads — meaning, targeted ads based on users’ activity over time and across sites.

This requires parental consent, but companies have skirted this rule for years — or outright ignored it, like YouTube did.

The ad networks used in HyperBeard’s apps included AdColony, AdMob, AppLovin, Facebook Audience Network, Fyber, IronSource, Kiip, TapCore, TapJoy, Vungle, and UnityAds. Despite being notified by watchdogs and the FTC of the issue, HyperBeard didn’t alert any of the ad networks that its apps were directed towards kids and make changes.

The issues around the invasiveness of third-party ad networks and trackers — and their questionable data collection practices — have come in the spotlight thanks to in-depth reporting about app privacy issues, various privacy experiments, petitions against their use — and, more recently, as a counter-argument to Apple’s marketing of its iPhone as a privacy-conscious device.

Last year, these complaints finally led Apple to ban the use of third-party networks and trackers in any iOS apps aimed at kids.

HyperBeard’s install base was below 50 million at the time of the settlement, we understand. According to Sensor Tower, around 12 million of HyperBeard’s installs to date have come from its most popular title, Adorable Home, which only launched in January 2020. U.S. consumers so far have accounted for about 18% of the company’s total installs to date, followed by the Chinese App Store at 14%. So far, in 2020, Vietnam has emerged as leading the market with close to 24% of all installs since January, while the U.S. dropped to No. 7 overall, with a 7% share.

The FTC’s action against HyperBeard should serve as a warning to other app developers that simply saying an app is not meant for kids doesn’t exempt them from following COPPA guidelines, when it’s clear the app is targeting kids. In addition, app makers can and will be held liable for the data collection practices of third-party ad networks, even if the app itself isn’t storing kids’ personal data on its own servers.

“If your app or website is directed to kids, you’ve got to make sure parents are in the loop before you collect children’s personal information,” said Andrew Smith, Director of the FTC’s Bureau of Consumer Protection, in a statement about the settlement. “This includes allowing someone else, such as an ad network, to collect persistent identifiers, like advertising IDs or cookies, in order to serve behavioral advertising,” he said.

YouTube now officially limits the amount of data it and creators can collect on content intended for children, following promises made in November and a costly $170 million FTC fine in September. Considering how lucrative kids’ content is for the company, this could have serious financial ramifications for both it and its biggest creators.

The main change is, as announced in November, that for all content detected or marked as being for kids, viewers will be considered children no matter what. Even if you’re a verified, paying YouTube Premium customer (we know you’re out there) your data will be sanitized as if YouTube thinks you’re a 10-year-old kid.

There are plenty of reasons for this, most of them to do with avoiding liability. It’s just the safer path for the company to make the assumption that anyone viewing kids’ content is a kid — but it comes with unfortunate consequences.

Reduced data collection means no targeted ads. And targeted ads are much more valuable than ordinary ones. So this is effectively a huge revenue hit to anyone making children’s content — for instance YouTube’s current top-earning creator, Ryan Kaji (a kid himself).

It also limits the insights creators can have on their viewers, crucial information for anyone hoping to understand their demographics and improve their metrics. Engagement drivers like comments and notifications are also disabled, to channels’ detriment.

Google for its part says that it is “committed to helping creators navigate this new landscape and to supporting our ecosystem of family content.” How exactly it plans to do that isn’t clear; Many have already complained that the system is not clear and that this could be a death sentence for kids’ channels on YouTube.

Now that the policy is official we’ll probably soon hear exactly how it is impacting creators and what if anything Google actually does to mitigate that.

YouTube is asking the U.S. Federal Trade Commission for further clarification and better guidance to help video creators understand how to comply with the FTC’s guidelines set forth as part of YouTube’s settlement with the regulator over its violations of children’s privacy laws. The FTC in September imposed a historic fine of $170 million for YouTube’s violations of COPPA (the U.S. Children’s Online Privacy Protection Act). It additionally required YouTube creators to now properly identify any child-directed content on the platform.

To comply with the ruling, YouTube created a system where creators could either label their entire channel as child-directed, or they could identify only certain videos as being directed at children, as needed. Videos that are considered child-directed content would then be prohibited from collecting personal data from viewers. This limited creators’ ability to leverage Google’s highly profitable behavioral advertising technology on videos kids were likely to watch.

As a result, YouTube creators have been in an uproar since the ruling, arguing that it’s too difficult to tell the difference between what’s child-directed content and what’s not. Several popular categories of YouTube videos — like gaming, toy reviews, and family vlogging, for instance — fall under gray areas, where they’re watched by children and adults alike. But because the FTC’s ruling left creators held liable for any future violations, YouTube could only advise creators to consult a lawyer to help them work through the ruling’s impact on their own channels.

Today, YouTube says it’s asking the FTC to provide more clarity.

“Currently, the FTC’s guidance requires platforms must treat anyone watching primarily child-directed content as children under 13. This does not match what we see on YouTube, where adults watch favorite cartoons from their childhood or teachers look for content to share with their students,” noted YouTube in an announcement. “Creators of such videos have also conveyed the value of product features that wouldn’t be supported on their content. For example, creators have expressed the value of using comments to get helpful feedback from older viewers. This is why we support allowing platforms to treat adults as adults if there are measures in place to help confirm that the user is an adult viewing kids’ content,” the company said.

Specifically, YouTube wants the FTC to clarify what’s to be done when adults are watching kids’ content. It also wants to know what’s to be done about content that doesn’t intentionally target kids — like videos in the gaming, DIY and art space, for example — if those videos end up attracting a young audience. Are these also to be labeled “made for kids,” even though that’s not their intention?, YouTube asks.

The FTC had shared some guidance in November, which YouTube passed along to creators. But YouTube says it’s not enough as it doesn’t help creators to understand what’s to be done about this “mixed audience” content.

YouTube says it supports platforms treating adults who view primarily child-directed video content as adults, as long as there are measures in place to help confirm the user is an adult. It didn’t suggest what those measures would be, though possibly this could involve users logged in to an adult-owned Google account or perhaps an age-gate measure of some sort.

YouTube submitted its statements as a part of the FTC’s comment period on the agency’s review of the COPPA Rule, which has been extended until December 11, 2019. The FTC is giving commenters additional time to submit comments and an alternative mechanism to file them as the federal government’s Regulations.gov portal is temporarily inaccessible. Instead, commenters can submit their thoughts via email to the address secretary@ftc.gov, with the subject line “COPPA comment.” These must be submitted before 11:59 PM ET on Dec. 11, the FTC says.

YouTube’s announcement, however, pointed commenters to the FTC’s website, which isn’t working right now.

“We strongly support COPPA’s goal of providing robust protections for kids and their privacy. We also believe COPPA would benefit from updates and clarifications that better reflect how kids and families use technology today, while still allowing access to a wide range of content that helps them learn, grow and explore. We continue to engage on this issue with the FTC and other lawmakers (we previously participated in the FTC’s public workshop) and are committed to continue [sic] doing so,” said YouTube.

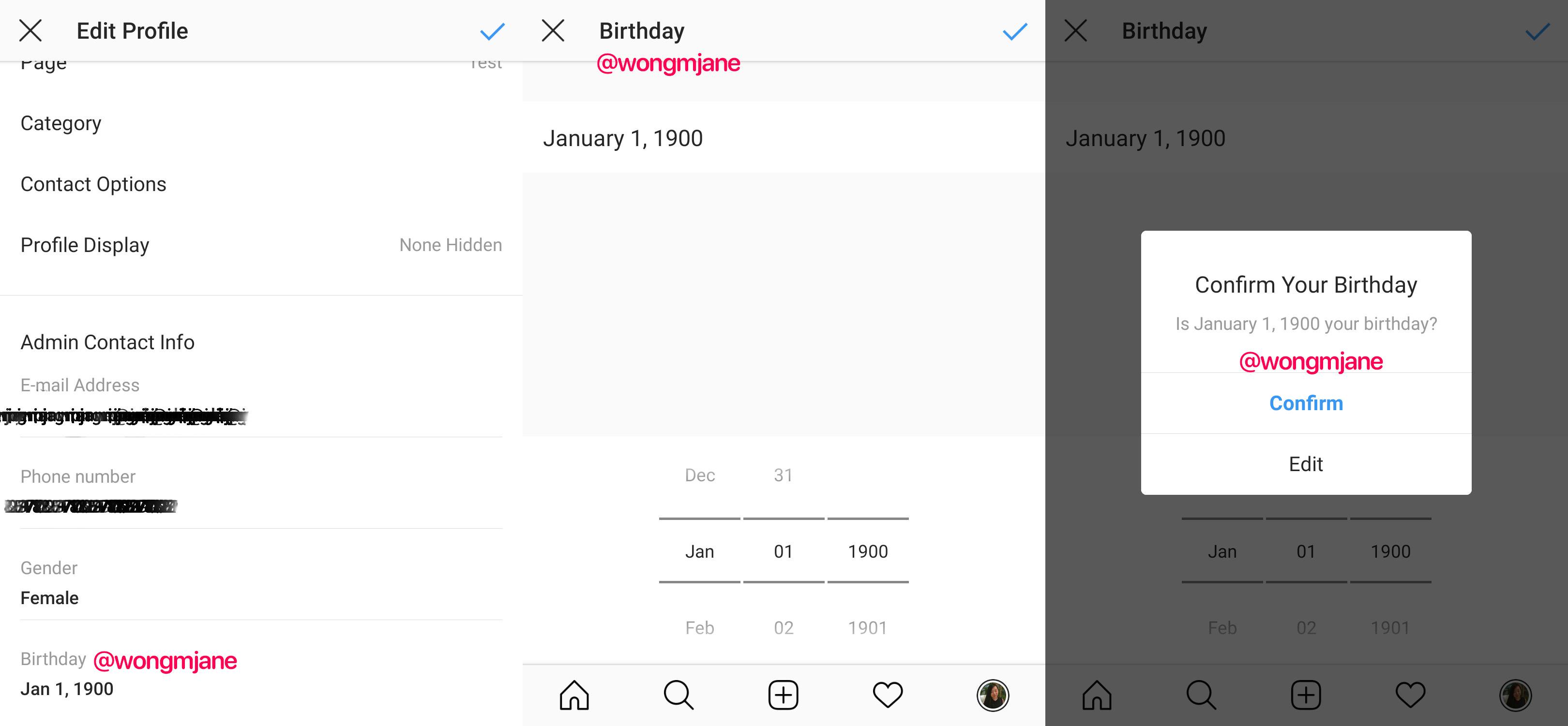

Instagram dodges child safety laws. By not asking users their age upon signup, it can feign ignorance about how old they are. That way, it can’t be held liable for $40,000 per violation of the Child Online Privacy Protection Act. The law bans online services from collecting personally identifiable information about kids under 13 without parental consent. Yet Instagram is surely stockpiling that sensitive info about underage users, shrouded by the excuse that it doesn’t know who’s who.

But here, ignorance isn’t bliss. It’s dangerous. User growth at all costs is no longer acceptable.

It’s time for Instagram to step up and assume responsibility for protecting children, even if that means excluding them. Instagram needs to ask users’ age at sign up, work to verify they volunteer their accurate birthdate by all practical means, and enforce COPPA by removing users it knows are under 13. If it wants to allow tweens on its app, it needs to build a safe, dedicated experience where the app doesn’t suck in COPPA-restricted personal info.

Instagram is woefully behind its peers. Both Snapchat and TikTok require you to enter your age as soon as you start the sign up process. This should really be the minimum regulatory standard, and lawmakers should close the loophole allowing services to skirt compliance by not asking. If users register for an account, they should be required to enter an age of 13 or older.

Instagram’s parent company Facebook has been asking for birthdate during account registration since its earliest days. Sure, it adds one extra step to sign up, and impedes its growth numbers by discouraging kids to get hooked early on the social network. But it also benefits Facebook’s business by letting it accurately age-target ads.

Most importantly, at least Facebook is making a baseline effort to keep out underage users. Of course, as kids do when they want something, some are going to lie about their age and say they’re old enough. Ideally, Facebook would go further and try to verify the accuracy of a user’s age using other available data, and Instagram should too.

Both Facebook and Instagram currently have moderators lock the accounts of any users they stumble across that they suspect are under 13. Users must upload government-issued proof of age to regain control. That policy only went into effect last year after UK’s Channel 4 reported a Facebook moderator was told to ignore seemingly underage users unless they explicitly declared they were too young or were reported for being under 13. An extreme approach would be to require this for all signups, though that might be expensive, slow, significantly hurt signup rates, and annoy of-age users.

Instagram is currently on the other end of the spectrum. Doing nothing around age-gating seems recklessly negligent. When asked for comment about how why it doesn’t ask users’ ages, how it stops underage users from joining, and if it’s in violation of COPPA, Instagram declined to comment. The fact that Instagram claims to not know users’ ages seems to be in direct contradiction to it offering marketers custom ad targeting by age such as reaching just those that are 13.

Luckily, this could all change soon.

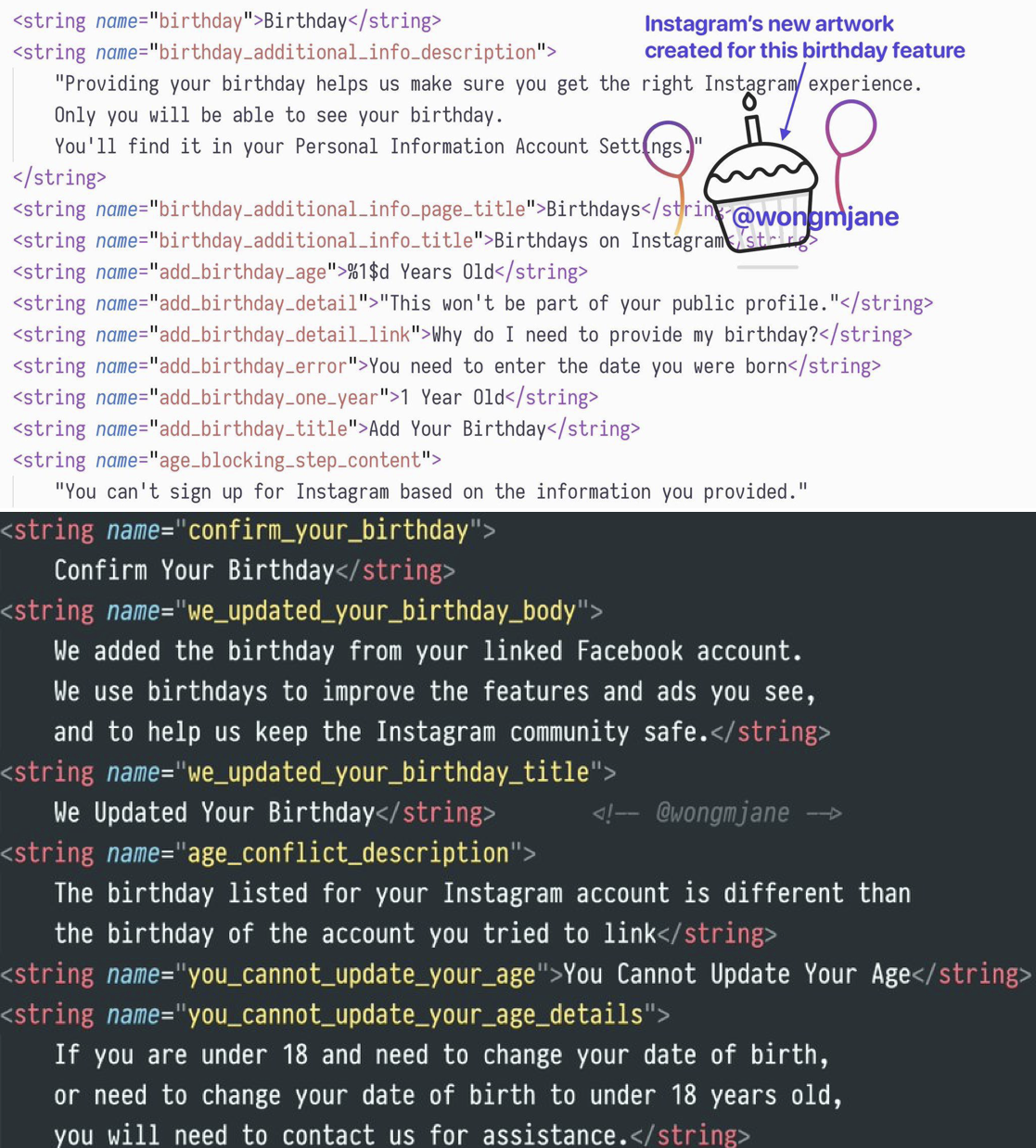

Mobile researcher and frequent TechCrunch tipster Jane Manchun Wong has spotted Instagram code inside its Android app that shows it’s prototyping an age-gating feature that rejects users under 13. It’s also tinkering with requiring your Instagram and Facebook birthdates to match. Instagram gave me a “no comment” when I asked about if these features would officially roll out to everyone.

Code in the app explains that “Providing your birthday helps us make sure you get the right Instagram experience. Only you will be able to see your birthday.” Beyond just deciding who to let in, Instagram could use this info to make sure users under 18 aren’t messaging with adult strangers, that users under 21 aren’t seeing ads for alcohol brands, and that potentially explicit content isn’t shown to minors.

Instagram’s inability to do any of this clashes with it and Facebook’s big talk this year about its commitment to safety. Instagram has worked to improve its approach to bullying, drug sales, self-harm, and election interference, yet there’s been not a word about age gating.

Meanwhile, underage users promote themselves on pages for hashtags like #12YearOld where it’s easy to find users who declare they’re that age right in their profile bio. It took me about 5 minutes to find creepy “You’re cute” comments from older men on seemingly underage girls’ photos. Clearly Instagram hasn’t been trying very hard to stop them from playing with the app.

I brought up the same unsettling situations on Musically, now known as TikTok, to its CEO Alex Zhu on stage at TechCrunch Disrupt in 2016. I grilled Zhu about letting 10-year-olds flaunt their bodies on his app. He tried to claim parents run all of these kids’ accounts, and got frustrated as we dug deeper into Musically’s failures here.

Thankfully, TikTok was eventually fined $5.7 million this year for violating COPPA and forced to change its ways. As part of its response, TikTok started showing an age gate to both new and existing users, removed all videos of users under 13, and restricted those users to a special TikTok Kids experience where they can’t post videos, comment, or provide any COPPA-restricted personal info.

If even a Chinese app social media app that Facebook CEO has warned threatens free speech with censorship is doing a better job protecting kids than Instagram, something’s gotta give. Instagram could follow suit, building a special section of its apps just for kids where they’re quarantined from conversing with older users that might prey on them.

Perhaps Facebook and Instagram’s hands-off approach stems from the fact that CEO Mark Zuckerberg doesn’t think the ban on under-13-year-olds should exist. Back in 2011, he said “That will be a fight we take on at some point . . . My philosophy is that for education you need to start at a really, really young age.” He’s put that into practice with Messenger Kids which lets 6 to 12-year-olds chat with their friends if parents approve.

The Facebook family of apps’ ad-driven business model and earnings depend on constant user growth that could be inhibited by stringent age gating. It surely doesn’t want to admit to parents it’s let kids slide into Instagram, that advertisers were paying to reach children too young to buy anything, and to Wall Street that it might not have 2.8 billion legal users across its apps as it claims.

But given Facebook and Instagram’s privacy scandals, addictive qualities, and impact on democracy, it seems like proper age-gating should be a priority as well as the subject of more regulatory scrutiny and public concern. Society has woken up to the harms of social media, yet Instagram erects no guards to keep kids from experiencing those ills for themselves. Until it makes an honest effort to stop kids from joining, the rest of Instagram’s safety initiatives ring hollow.

In YouTube CEO Susan Wojcicki quarterly letter, released today, the exec addresses a number of changes to YouTube policies, including the recent FTC-mandated rules for kids content that have alternately confused and infuriated video creators, as well as forthcoming policies around harassment and gaming videos, among other things.

On the latter, Wojcicki said the company was now in the process of developing a new harassment policy and was talking to creators about what needed to be addressed. She did not give an ETA for the rollout, but said creators would be posted when the changes were finalized.

YouTube also responded to creator concerns over policies around gaming videos that include violence.

“We’ve heard loud and clear that our policies need to differentiate between real-world violence and gaming violence,” Wojcicki said. “We have a policy update coming soon that will do just that. The new policy will have fewer restrictions for violence in gaming, but maintain our high bar to protect audiences from real-world violence.”

This topic was recently discussed at YouTube’s Gaming Creator Summit, as well.

The company also said it’s now working to match edgier content with advertisers who may be interested in it –like a marketer who wants to promote an R-rated movie, for example.

The letter briefly addressed the creator uproar over kids’ content, with promises of more clarity.

In September, YouTube reached a settlement with the Federal Trade Commission over its violation of the Children’s Online Privacy Protection Act (COPPA), which required it to pay a $170 million fine and set into place a series of new rules for creators to comply with. These rules require creators to mark videos that are directed at kids (or entire channels, if need be.). This, in turn, will limit data collection, put an end to personalized ads on kids’ content, disable comments, and reduce their revenues, creators say.

Creators will also lose out on a number of key YouTube features, The Verge recently reported, including click-through info cards, end screens, notification functions, and the community tab.

YouTube creators say they don’t have enough clarity around where to draw the line between content that’s made for kids and content that may attract kids. For example, family vlog channels and some gaming videos may appeal to kids and adults alike. And if the FTC decides a creator is in violation, they can be held liable for future COPPA violations now that YouTube’s new policy and content labeling system is in place. YouTube’s advice to creators on how to proceed? Consult a lawyer, it has said.

In today’s letter, Wojcicki acknowledges the fallout of these changes, but doesn’t offer any further clarity — only promises of updates to come.

“We know there are still many questions about how this is going to affect creators and we’ll provide updates as possible along the way,” Wojcicki said.

She also points to a long thread on the YouTube Community forum where many questions about the system are being answered — like the policy’s reach, what’s changing, how and when to mark content as being for kids or not, and more. The forum’s Q&A also addressed some of the questions that keep coming up about all-ages content, including some example scenarios. Creators, of course, have read through these materials and say they still don’t understand how to figure out if their video is for kids or not. (And clearly, they don’t want to err on the side of caution at the risk of reduced income.)

The being said, the rise of a kid-friendly YouTube has had a range of negative consequences. YouTube had to shut down comments after finding a ring of child predators on videos with kids, for instance. Parents roped in their kids to the “family business” before the children even knew what being public on the internet meant. Some young stars have been put to work more than should be legal due to the lack of child labor laws for online content. There’s even been child abuse at the hands of the parents. Children watching the vides, meanwhile, were being marketed to without their understanding, addicted to consumerism by toy unboxings and playtime videos, and targeted with personalized ads. Kid YouTube was overdue for a reigning in.

The letter addresses a few other key issues, as well, including the launch of the new Creator Studio and the latest on the EU’s copyright directive Article 17, which is now being translated into local law. Wojcicki cheers some of the changes to the policy, including the one that secures liability protections when YouTube makes its best efforts to match copyright material with rights owners.

And Wojcicki addresses the growing concerns over creator burnout, by reminding video creators to take a break and practice self-care — adding that it won’t harm their business by doing so. In fact, YouTube scoured its data from the past 6 years and found that on average, channels both large and small had more views when they returned than they had right before they left.

“If you need to take some time off, your fans will understand,” she said.

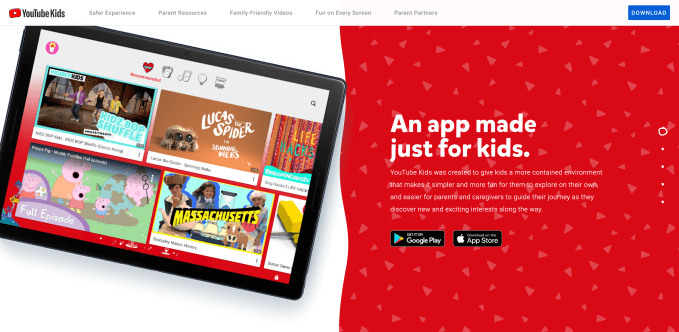

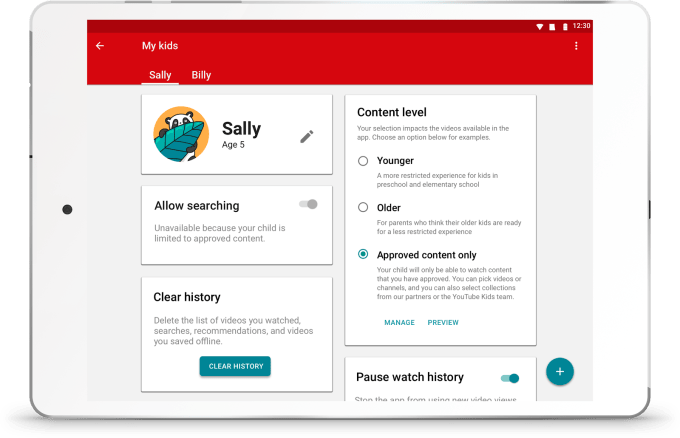

Ahead of the official announcement of an FTC settlement which could force YouTube to direct under-13-year-old users to a separate experience for YouTube’s kid-friendly content, the company has quietly announced plans to launch its YouTube Kids service on the web. Previously, parents would have to download the YouTube Kids app to a mobile device in order to access the filtered version of YouTube.

By bringing YouTube Kids to the web, the company is prepared for the likely outcome of an FTC settlement which would require the company to implement an age-gate on its site, then redirect under-13-year-olds to a separate kid-friendly experience.

In addition, YouTube Kids is gaining a new filter which will allow parents to set the content to being preschooler-appropriate.

The announcement, published to the YouTube Help forums, was first spotted by Android Police.

It’s unclear if YouTube was intentionally trying to keep these changes from being picked up on by a larger audience (or the press) by publishing the news to a forum instead of its official YouTube blog. (The company tells us it publishes a lot of news the forum site. Sure, okay. But with an FTC settlement looming, it seems an odd destination for such a key announcement.)

It’s also worth noting that, around the same time as the news was published, YouTube CEO Susan Wojcicki posted her quarterly update for YouTube creators.

The update is intended to keep creators abreast of what’s in store for YouTube and its community. But this quarter, her missive spoke solely about the value in being an open platform, and didn’t touch on anything related to kids content or the U.S. regulator’s investigation.

However, it’s precisely YouTube’s position on “openness” that concerns parents when it comes to their kids watching YouTube videos. The platform’s (almost) “anything goes” nature means kids can easily stumble upon content that’s too adult, controversial, hateful, fringe, or offensive.

The YouTube Kids app is meant to offer a safer destination, but YouTube isn’t manually reviewing each video that finds its way there. That has led to inappropriate and disturbing content slipping through the cracks on numerous occasions, and eroding parents’ trust.

Because many parents don’t believe YouTube Kids’ algorithms can filter content appropriately, the company last fall introduced the ability for parents to whitelist specific videos or channels in the Kids app. It also rolled out a feature that customized the app’s content for YouTube’s older users, ages 8 through 12. This added gaming content and music videos.

Now, YouTube is further breaking up its “Younger” content level filter, which was previously 8 and under, into two parts. Starting now, “Younger” applies to ages 5 through 7, while the new “Preschool” filter is for the age 4 and under group. The latter will focus on videos that promote “creativity, playfulness, learning, and exploration,” says YouTube.

Above: the content filter before

YouTube confirmed to TechCrunch that its forum announcement is accurate, but the company would not say when the YouTube Kids web version would go live, beyond “this week.”

The YouTube Kids changes are notable because they signal that YouTube is getting things in place before an FTC settlement announcement that will impact how the company handles kids content and its continued use by young children.

It’s possible that YouTube will be fined by the FTC for its violations of COPPA, as Musical.ly (TikTok) was earlier this year. One report, citing unnamed sources, says the FTC’s YouTube settlement has, in fact, already been finalized and includes a multimillion-dollar fine.

YouTube will also likely be required to implement an age-gate on its site and in its apps that will direct under-13-year-olds to the YouTube Kids platform instead of YouTube proper. The settlement may additionally require YouTube to stop targeting ads on videos aimed at children, as has been reported by Bloomberg.

We probably won’t see the FTC issuing a statement about its ruling ahead of this Labor Day weekend, but it may do so in advance of its October workshop focused on refining the COPPA regulation — an event that has the regulator looking for feedback on how to properly handle sites like YouTube.