Looking for a Thanksgiving dinner table conversation that isn’t politics or professional sports? Okay, let’s talk about killer robots. It’s a concept that long ago leapt from the pages of science fiction to reality, depending on how loose a definition you use for “robot.” Military drones abandoned Asimov’s First Law of Robotics — “A robot may not injure a human being or, through inaction, allow a human being to come to harm” — decades ago.

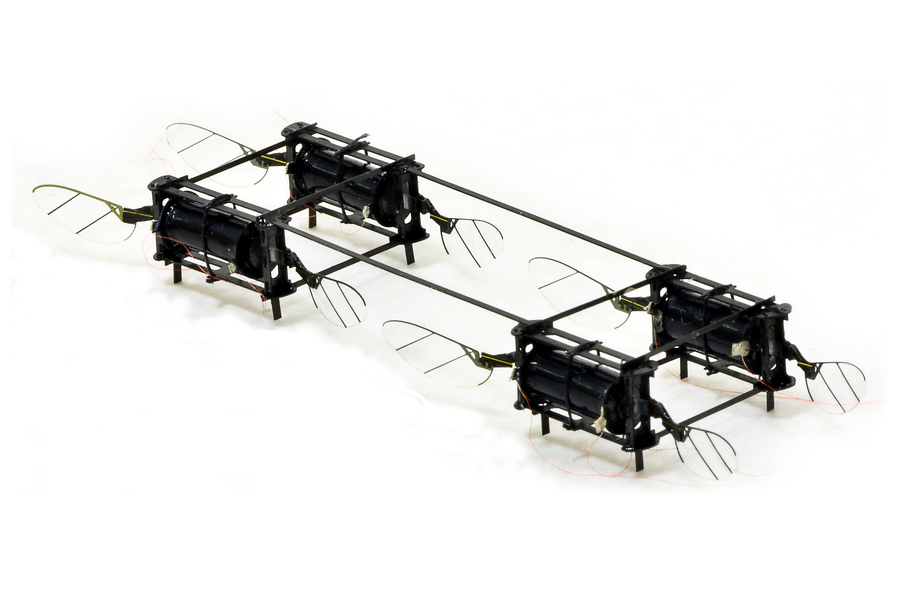

The topic has been simmering again of late due to the increasing prospect of killer robots in domestic law enforcement. One of the era’s best known robot makers, Boston Dynamics, raised some public policy red flags when it showcased footage of its Spot robot being deployed as part of Massachusetts State Police training exercises on our stage back in 2019.

The robots were not armed and instead were part of an exercise designed to determine how they might help keep officers out of harm’s way during a hostage or terrorist situation. But the prospect of deploying robots in scenarios where people’s lives are at immediate risk was enough to prompt an inquiry from the ACLU, which told TechCrunch:

We urgently need more transparency from government agencies, who should be upfront with the public about their plans to test and deploy new technologies. We also need statewide regulations to protect civil liberties, civil rights, and racial justice in the age of artificial intelligence.

Last year, meanwhile, the NYPD cut short a deal with Boston Dynamics following a strong public backlash, after images surfaced of Spot being deployed in response to a home invasion in the Bronx.

For its part, Boston Dynamics has been very vocal in its opposition to the weaponization of its robots. Last month, it signed an open letter, along with other leading firms Agility, ANYbotics, Clearpath Robotics and Open Robotics, condemning the action. It notes:

We believe that adding weapons to robots that are remotely or autonomously operated, widely available to the public, and capable of navigating to previously inaccessible locations where people live and work, raises new risks of harm and serious ethical issues. Weaponized applications of these newly-capable robots will also harm public trust in the technology in ways that damage the tremendous benefits they will bring to society.

The letter was believed to have been, in part, a response to Ghost Robotics’ work with the U.S. military. When images of one of its own robotic dogs showed on Twitter sporting an autonomous rifle, the Philadelphia firm told TechCrunch that it took an agnostic stance with regard to how the systems are employed by its military partners:

We don’t make the payloads. Are we going to promote and advertise any of these weapon systems? Probably not. That’s a tough one to answer. Because we’re selling to the military, we don’t know what they do with them. We’re not going to dictate to our government customers how they use the robots.

We do draw the line on where they’re sold. We only sell to U.S. and allied governments. We don’t even sell our robots to enterprise customers in adversarial markets. We get lots of inquiries about our robots in Russia and China. We don’t ship there, even for our enterprise customers.

Boston Dynamics and Ghost Robotics are currently embroiled in a lawsuit involving several patents.

This week, local police reporting site Mission Local surfaced renewed concern around killer robots – this time in San Francisco. The site notes that a policy proposal being reviewed by the city’s Board of Supervisors next week includes language about killer robots. The “Law Enforcement Equipment Policy” begins with an inventory of robots currently in the San Francisco Police Department’s possession.

There are 17 in all – 12 of which are functioning. They’re largely designed for bomb detection and disposal – which is to say that none are designed specifically for killing.

“The robots listed in this section shall not be utilized outside of training and simulations, criminal apprehensions, critical incidents, exigent circumstances, executing a warrant or during suspicious device assessments,” the policy notes. It then adds, more troublingly, “Robots will only be used as a deadly force option when risk of loss of life to members of the public or officers is imminent and outweighs any other force option available to SFPD.”

Effectively, according to the language, the robots can be used to kill in order to potentially save the lives of officers or the public. It seems innocuous enough in that context, perhaps. At the very least, it seems to fall within the legal definition of “justified” deadly force. But new concerns arise in what would appear to be a profound change to policy.

For starters, the use of a bomb disposal robot to kill a suspect is not without precedent. In July 2016, Dallas police officers did just that for what was believed to be the first time in U.S. history. “We saw no other option but to use our bomb robot and place a device on its extension for it to detonate where the suspect was,” police chief David Brown said at the time.

Second, it is easy to see how new precedent could be used in a CYA scenario, if a robot is intentionally or accidentally used in this manner. Third, and perhaps most alarmingly, one could imagine the language applying to the acquisition of a future robotic system not purely designed for explosive discovery and disposal.

Mission Local adds that SF’s Board of Supervisors Rules Committee chair Aaron Peskin attempted to insert the more Asimov-friendly line, “Robots shall not be used as a Use of Force against any person.” The SFPD apparently crossed out Peskin’s change and updated it to its current language.

The renewed conversation around killer robots in California comes, in part, due to Assembly Bill 481. Signed into law by Gov. Gavin Newsom in September of last year, the law is designed to make police action more transparent. That includes an inventory of military equipment utilized by law enforcement.

The 17 robots included in the San Francisco document are part of a longer list that also includes the Lenco BearCat armored vehicle, flash-bangs and 15 sub machine guns.

Last month, Oakland Police said it would not be seeking approval for armed remote robots. The department said in a statement:

The Oakland Police Department (OPD) is not adding armed remote vehicles to the department. OPD did take part in ad hoc committee discussions with the Oakland Police Commission and community members to explore all possible uses for the vehicle. However, after further discussions with the Chief and the Executive Team, the department decided it no longer wanted to explore that particular option.

Let’s talk about killer robots by Brian Heater originally published on TechCrunch

(@mosseri)

(@mosseri)