Welcome back to This Week in Apps, the weekly TechCrunch series that recaps the latest in mobile OS news, mobile applications and the overall app economy.

Global app spending reached $65 billion in the first half of 2022, up only slightly from the $64.4 billion during the same period in 2021, as hypergrowth fueled by the pandemic has slowed down. But overall, the app economy is continuing to grow, having produced a record number of downloads and consumer spending across both the iOS and Google Play stores combined in 2021, according to the latest year-end reports. Global spending across iOS and Google Play last year was $133 billion, and consumers downloaded 143.6 billion apps.

This Week in Apps offers a way to keep up with this fast-moving industry in one place with the latest from the world of apps, including news, updates, startup fundings, mergers and acquisitions, and much more.

Do you want This Week in Apps in your inbox every Saturday? Sign up here: techcrunch.com/newsletters

Top Stories

TikTok is getting a rating system

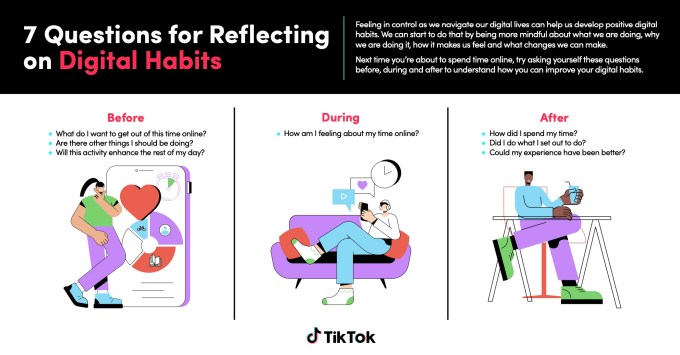

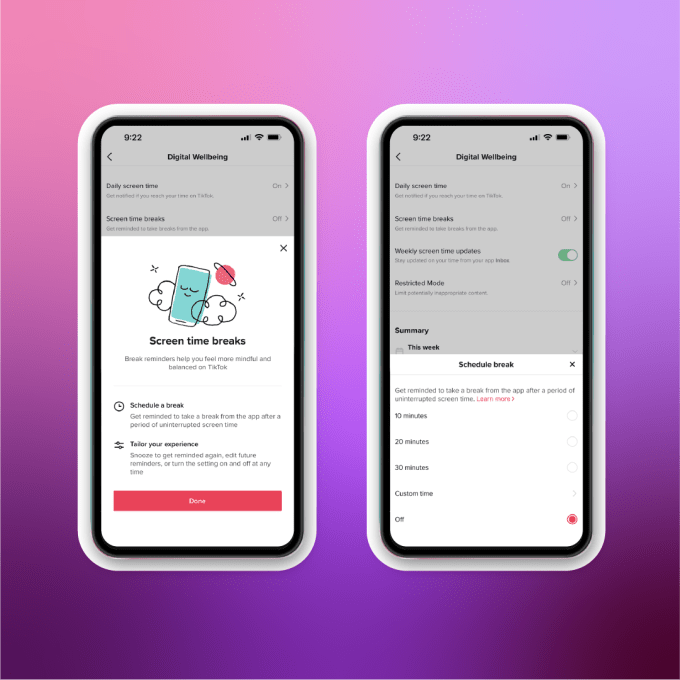

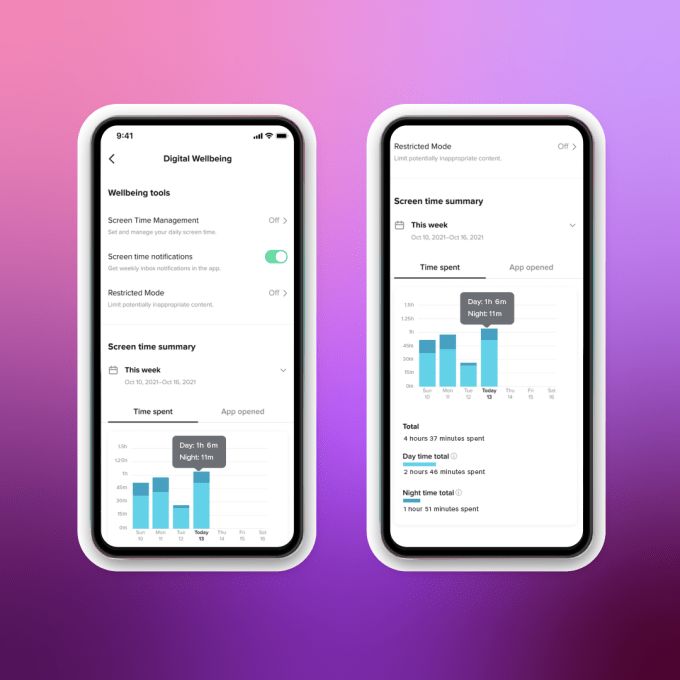

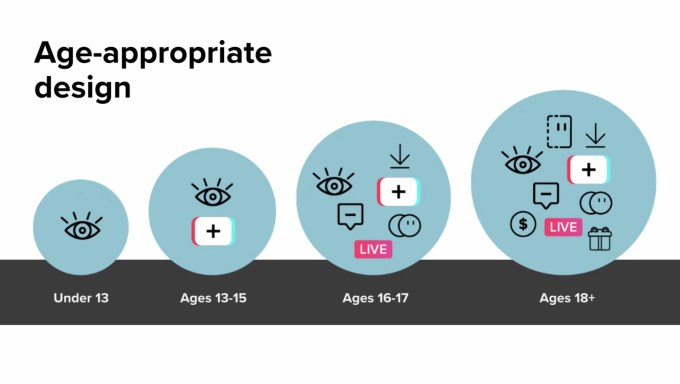

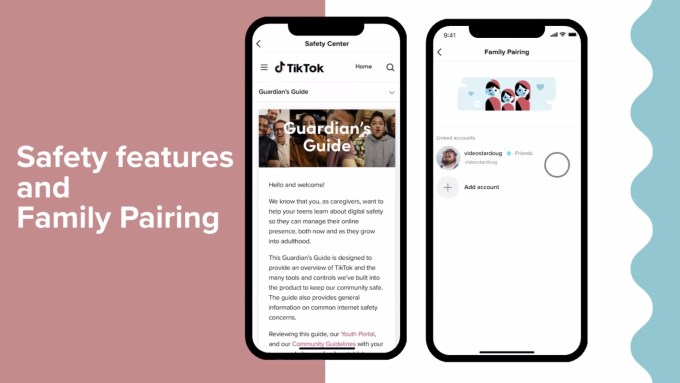

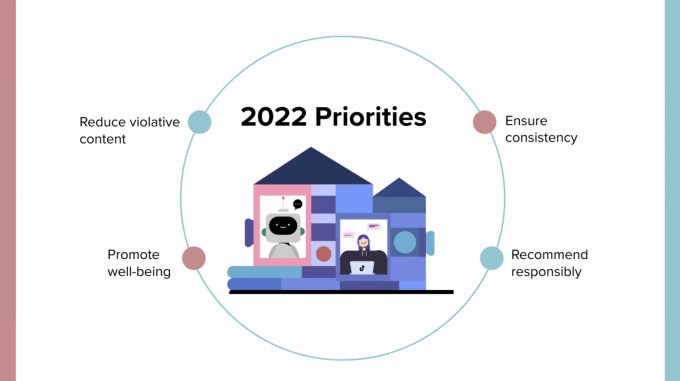

Some TikToks are too racy or mature for younger teens — a problem TikTok aims to address with the upcoming launch of a new content ratings system. The “Content Levels” system, as it will be called, is meant to provide a means of classifying content on the video app — similar to how movies, TV shows and video games today feature age ratings.

TikTok acknowledged some content on its app may contain “mature or complex themes that may reflect personal experiences or real-world events that are intended for older audiences.” It will work to assign these sorts of videos a “maturity score” that will block them from being viewed by younger users. Not all videos will be rated, however. The goal will be to rate videos that get flagged for review and those that are gaining virality. Initially, the system will focus on preventing inappropriate content from reaching users ages 13 to 17, TikTok says, but will become a broader system over time.

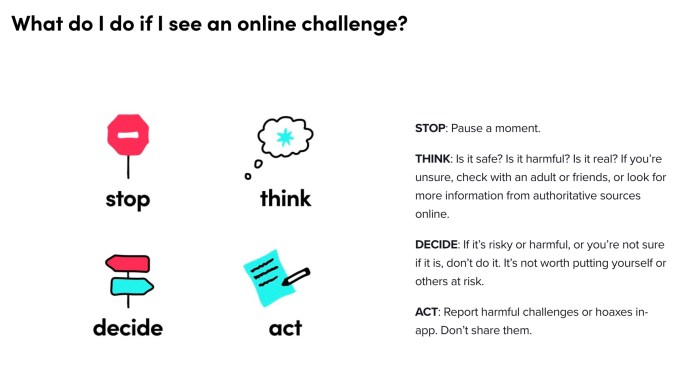

The launch follows a 2021 congressional inquiry into social apps, including TikTok and others, which focused on how their algorithmic recommendation systems could be promoting harmful content, like eating disorder content, to younger users. TikTok has also been making headlines for its promotion of dangerous and destructive viral stunts, like kids destroying public school bathrooms, shooting each other with pellet guns or jumping off milk crates, among other things.

TikTok, like other social apps, is in hot water over the potential negative impacts to minors using its service. But it’s under particular scrutiny since the reveal that parent company ByteDance — in China — was accessing U.S. TikTok user data. Alongside the maturity ratings, TikTok says it will also launch content filters that will let users block videos with hashtags or certain words from their feeds.

For all its ills, TikTok has more developed parental controls than its U.S. rivals and the launch of a content ratings system could push other apps reaching minors, like Instagram and Snapchat, to do the same.

Will he or won’t he? The Twitter deal heads to court

Elon wants out. The Tesla and SpaceX exec has got a serious case of buyer’s remorse. Musk offered to buy Twitter at $54.20 per share — it’s a weed joke! Get it? 420! — but the stock today is only trading at $36.29 per share. So it’s not so funny anymore. Now the exec is attempting to use some flimsy excuses about “bots” on the network in order to get out of the legal agreement. But Twitter just said, see you in court! (Well, in legalese, it said Musk’s termination was “invalid and wrongful.”) Twitter then delivered a few more jabs in a letter filed with the SEC, noting Musk “apparently believes that he — unlike every other party subject to Delaware contract law — is free to change his mind, trash the company, disrupt its operations, destroy stockholder value, and walk away.” Burn!

Sadly, caught in the chaos are Twitter’s advertisers, some of whom are exiting, and of course, the Twitter employees who often don’t know what’s going on, who will prevail or what Musk may do if the deal is forced through. (Vent here if you want!) And what does this mean for Twitter’s conference Chirp later this year, if the deal is still in limbo?

This has been such a weird and fraught acquisition since day one, with some poor folks at the SEC having to collate tweets of poop emoji and memes as investor alerts. It’s also one that makes a pretty good case as to why we should tax billionaires more — too much money turns large companies and people’s livelihoods into toys for their amusement, apparently.

Non-game revenue tops games for the first time on the U.S. App Store

Image Credits: TechCrunch

A major shift in the U.S. app economy has just taken place. In the second quarter of this year, U.S. consumer spending in non-game mobile apps surpassed spending in mobile games for the first time in May 2022, and the trend continued in June. This drove the total revenue generated by non-game apps higher for the quarter, reaching about $3.4 billion on the U.S. App Store, compared with $3.3 billion spent on mobile games.

After the shift in May, 50.3% of the spending was coming from non-game apps by June 2022, according to new findings in a report from app intelligence firm Sensor Tower. By comparison, games had accounted for more than two-thirds of total spending on the U.S. App Store just five years ago.

The trend was limited to the U.S. App Store and was not seen on Google Play, however. In Q2, games accounted for $2.3 billion in consumer spending on Google Play in the U.S., while non-game apps accounted for about $1 billion. Read more about the new data here.

Kids and teens now spend more time on TikTok than YouTube

A study of 400,000 families performed by parental control software maker Qustodio found that kids and teens ages 4-18 now spend more time watching videos on TikTok than they do watching YouTube — and that’s been the case since June 2020, in fact. That month, TikTok overtook YouTube for the first time, as this younger demographic began averaging 82 minutes per day on TikTok versus an average of 75 minutes per day on YouTube.

YouTube had still been ahead in 2019 as kids and teens were spending an average of 48 minutes on the platform on a global basis, compared with 38 minutes on TikTok. But with the shift in usage that took place in June 2020, TikTok came out on top for 2020 as a whole, with an average of 75 minutes per day, compared with 64 minutes for YouTube.

In the years since, TikTok has continued to dominate with younger users. By the end of 2021, kids and teens were watching an average of 91 minutes of TikTok per day compared with just 56 minutes per day spent watching YouTube, on a global basis.

Likely aware of this threat, YouTube launched its own short-form platform called Shorts, which it now claims has topped 1.5 billion logged-in monthly users. The company believes this will push users toward its long-form content — but so far, that hasn’t happened, it seems. Read the full report here.

TikTok is eating into Google Search and Maps, says Google

In a bit of an incredible reveal (if one that helps Google from an anticompetitive standpoint), a Google exec admitted that younger people’s use of TikTok and Instagram is actually impacting the company’s core products, like Search and Maps.

TechCrunch broke this news following comments made at Fortune’s Brainstorm Tech event this week.

“In our studies, something like almost 40% of young people, when they’re looking for a place for lunch, they don’t go to Google Maps or Search,” said Google SVP Prabhakar Raghavan, who runs Google’s Knowledge & Information organization. “They go to TikTok or Instagram.”

Google confirmed to us his comments were based on internal research that involved a survey of U.S. users, ages 18 to 24. The data has not yet been made public, we’re told, but may later be added to Google’s competition site, alongside other stats — like how 55% of product searches now begin on Amazon, for example.

Weekly News

Platforms: Apple

- The iOS 16 public beta has arrived. It’s here, it’s surprisingly functional, and it brings a number of great new features to iPhone users, including a customizable Lock Screen with support for new Lock Screen widgets, more granular Focus Mode features, an improved messaging experience with an Undo Send option, SMS filters, iCloud Shared Photo Library for families, CAPTHCA bypassing and this clever new image cutout feature that lets you “pick up” objects from photos and copy them into other apps. On iPadOS 16, there are a number of specialized features, including the new Stage Manager multitasking interface.

Apple’s new visual lookup feature. Image Credits: Apple

Platforms: Google

- Samsung rolled out its One UI 4.5 update for Galaxy Watches, which is powered by Wear OS 3.5. The update includes a full QWERTY keyboard, customizable watch faces and dual-SIM support, and will run on the Galaxy Watch4, the Galaxy Watch4 Classic and other models.

- Google expanded its Play Games for PC beta, which brings Android apps to Windows, to more regions, including Thailand and Australia.

- Google released the fourth and final Android 13 beta ahead of its official launch, which the company says is “just a few weeks away.” There were not many changes with this update, as Google already reached platform stability with Android 13 beta 3 last month.

E-commerce

- TikTok launched a new educational program targeting small businesses that want to learn how to use its platform to drive sales. The launch follows TikTok’s decision to pause the expansion of its Shop initiative. The program walks businesses through setting up an account, creating content and using TikTok ads products, and features coaching and tips from other SMBs.

- NYC fast delivery apps could face a shutdown if new bills proposed by New York’s City Council get approved. The city is concerned about the dark stores’ workers’ safety.

Augmented Reality

- Shopify showed off a wild internal experiment using Apple’s new RoomPlan API that allowed users to more easily reset their room in order to see how new furniture could work. The test lets you remove the furniture already in your room to create a lifelike digital twin of your room that can be overlaid in your real space using AR. Users could then swipe through new room sets to see how they’d look in their own space. Spotify said it has nothing in production related to this right now — but wow, someone should!

Fintech/Crypto

- FlickPlay, an AR social app that lets users unlock NFTs and display them in a wallet, was among those selected to participate in Disney’s 2022 startup accelerator, among others focused on AR, web3 and AI experiences.

Social

Image Credits: TechCrunch

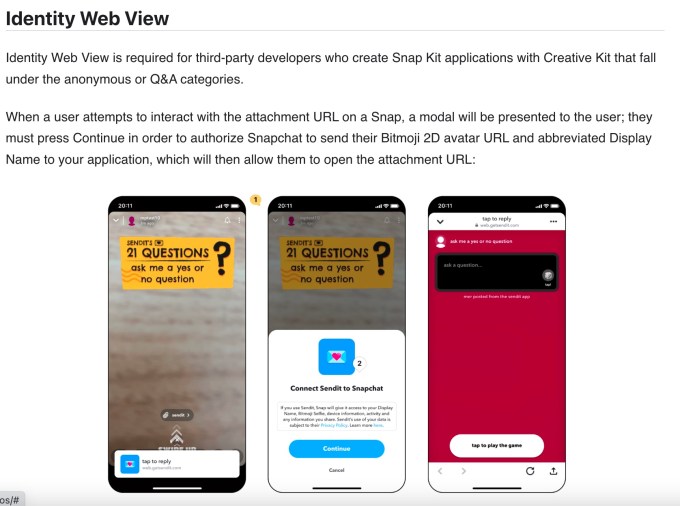

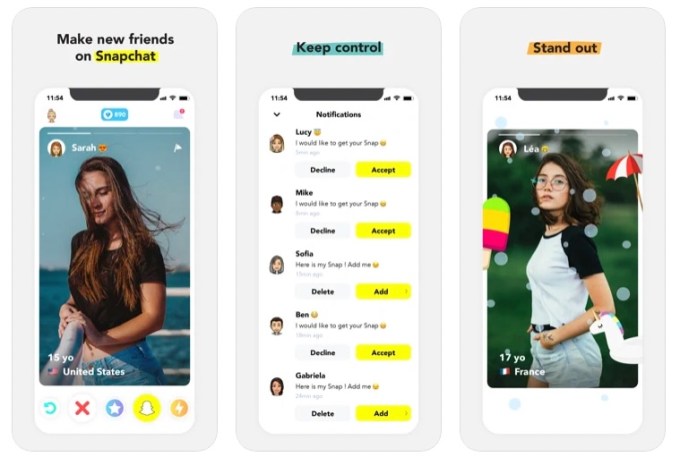

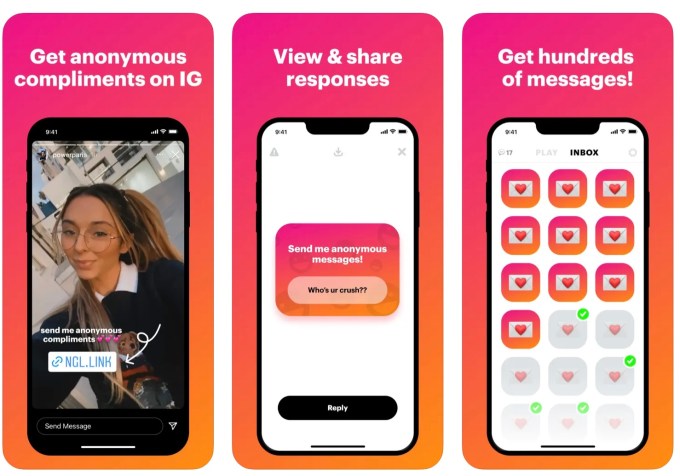

- Two anonymous social Q&A apps are heading to court. Sendit’s maker, Iconic Hearts, is suing rival NGL for stealing its proprietary business data in order to build what’s since become a top-ranked Q&A app on the App Store. Of note, the court filing reveals that the apps are using fake questions to engage their users — something many had already suspected.

- Reddit and GIPHY partner. Reddit is now allowing its safe-for-work and non-quarantined subreddits to enable GIPHY for use in the comments. Those moderators who don’t want the GIF comments will need to opt out. Previously GIFs in comments were available as a paid subscription perk (via Reddit’s Powerups), but most of these will now be available for free.

- TikTok’s head of global security stepped down. Someone had to pay for that security debacle which found that U.S. TikTok user data was being viewed in China. Global security head Roland Cloutier will be stepping down effective September 2 and will be replaced by Kim Albarella, who’s been appointed the interim head of TikTok’s Global Security Organization.

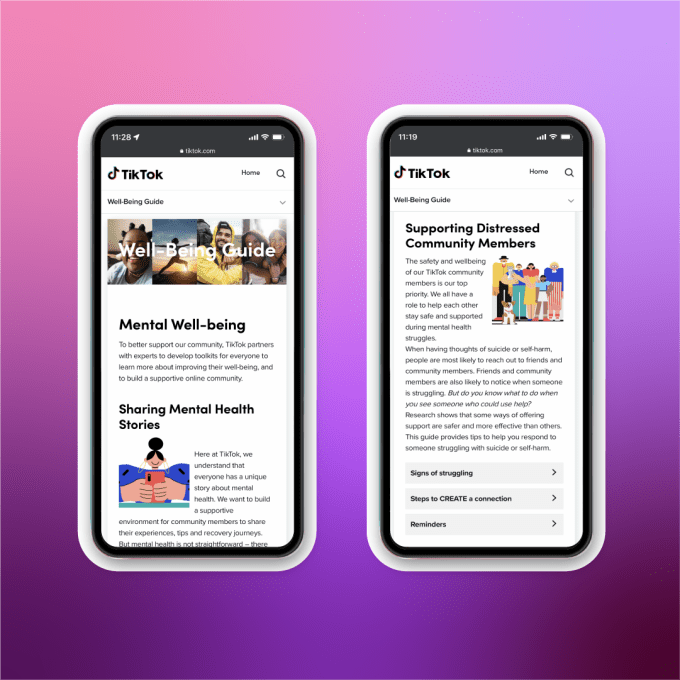

- A children’s rights group called out TikTok for age-appropriate design issues, ahead of TikTok’s launch of new safety features. The group’s research looked at various apps’ default settings and terms offered to minors, including also WhatsApp and Instagram — spanning 14 different countries — including the U.S., Brazil, Indonesia and the U.K. The report noted TikTok was defaulting 17-year-olds to public accounts outside of certain EU markets and the U.K., lacked terms in people’s first languages and wasn’t being transparent about age requirements, among other things.

- Instagram began testing a Live Producer tool that lets creators go live from their desktop using streaming software, like OBS, Streamyard and Streamlabs. Only a small group of participants currently has access to the tool, which opens up access to using additional cameras, external mics and graphics.

- Instagram also rolled out more features to its creator subscription test, including subscriber group chats, reels and posts for subscribers only, and a subscriber-only tab on a creator’s profile.

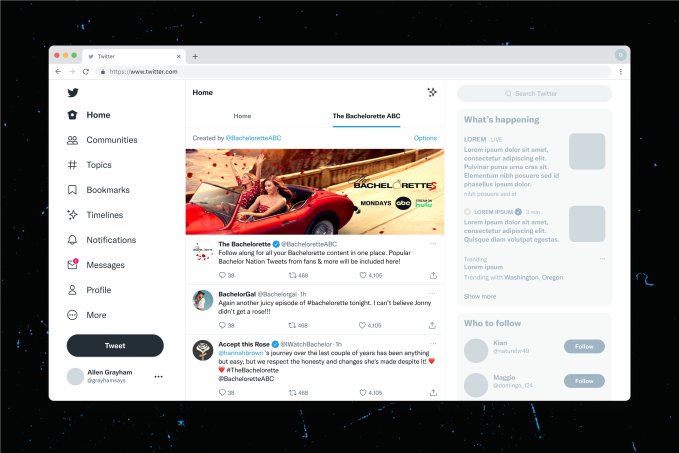

- Twitter is testing custom timelines built by developers around specific themes, starting with a custom timeline for The Bachelorette in the U.S. This is the latest product that attempts to allow users different views into Twitter, along with List, Topics, Communities and Trending. It’s also now testing a feature that reminds users to add image descriptions for accessibility.

- Facebook started testing a way for users to have up to five separate profiles tied to a single account. The company said this would allow users to take advantage of different profiles for interacting with specific groups — like a profile for use with friends and another one for coworkers.

- Activist investor Elliott Management told Pinterest that it has acquired a 9%+ stake in the company. The Pinterest stock jumped more than 15% after hours on the news.

Messaging

- WhatsApp rolled out the ability for users to react to messages using any emoji, instead of just the chosen six it had offered previously. The feature is one of several WhatsApp developed for its broader Communities update but is making available to all app users.

- Meta’s smartglasses, Ray-Ban Stories, now let users make calls, hear message readouts and send end-to-end encrypted messages with WhatsApp. The glasses already support Messenger and offer other features like photo-taking and video recording, listening to music and more.

Dating

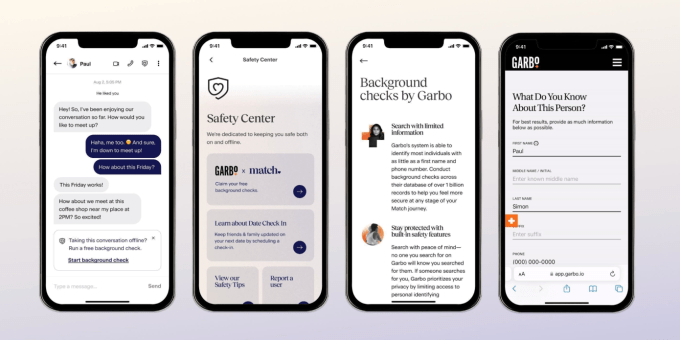

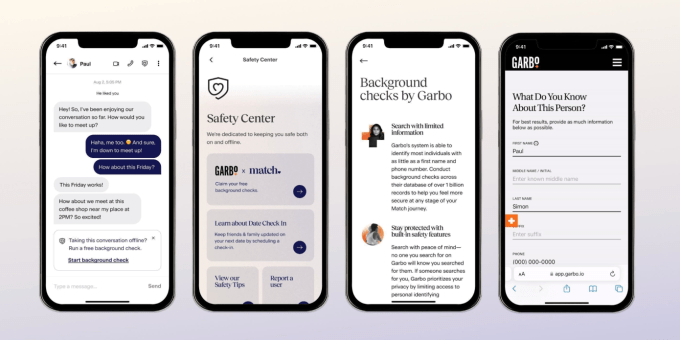

Image Credits: Match

- Match Group is expanding its use of free background checks across more of its dating apps. The feature, powered by Garbo, was first launched on Tinder earlier this year. It’s now available on other Match Group apps, including Match and Stir.

- Google has responded to Match Group’s antitrust lawsuit in a new court filing, which refers to Match’s original complaint as a “cynical attempt” to take advantage of Google Play’s distribution platform and other tools while attempting to sidestep Google’s fees. The two tech giants have been battling it out in court after Match sued Google this May over its alleged monopoly power in Android app payments. The companies have a temporary truce that sees Match setting aside its commissions in escrow while they await the court’s decision. If Google prevails, it wants to kick Match out of its app store altogether.

Streaming & Entertainment

- Truecaller is taking on Clubhouse — even though the hype has worn off over live audio. The caller ID app maker ventured into a new market with the launch of Open Doors, a live audio app that lets people communicate in real time. Unlike Clubhouse and others, the new app offers no rooms, invites, recording tools or extensive moderation features. It claims to only scan user contacts on the local device.

- Netflix inked a deal with Microsoft for its upcoming ad-supported plan. According to reports, Netflix appreciated Microsoft’s approach to privacy and ability to iterate quickly. (It also helped it wasn’t a streaming competitor, like Comcast’s NBCU or Roku.)

- Apple added a new perk for Apple Music subscribers, Apple Music Sessions, which gives listeners access to exclusive releases in spatial audio that have been recorded in Apple’s music studios around the world. The sessions began by featuring country artists, including Carrie Underwood and Tenille Townes.

Gaming

- Twitter’s H1 2022 report found there were roughly 1.5 billion tweets about gaming on its platform, up 36% year-over-year. Genshin Impact (No. 1) and Wordle (No. 2) were the most tweeted-about games.

Reading & News

- Upnext launched a read-it-later app and Pocket competitor for iOS, iPad and web. The app aims to differentiate itself by supporting anything users want to save, not just articles but also things like videos, podcasts, Twitter threads, PDFs and more. It then organizes this in a home screen that curates your collection with Daily Picks, and offers a swipe-based interface for archiving content.

Government & Policy

- TikTok this week paused a privacy policy change in Europe after a regulator inquiry over how the platform planned to stop asking users for consent to receive targeted ads.

- Confirming earlier reports, Kakao said it’s removing the external payment link from its KakaoTalk messaging app on the Play Store to come into compliance with Google’s terms, after being blocked from issuing updates. The move brought more attention to the policy and saw the regulator get involved in talks, which was likely the point of Kakao’s protest in the first place.

- After an FTC commissioner urged the U.S. to ban TikTok, rival Triller reported a surge in users. Triller had pivoted to focus more on entertainment and events as TikTok established itself as the top short form video platform in the U.S.

Funding and M&A

Match Group acquired the members-only dating app The League, which focuses on matching ambitious and career-focused professionals. The app has previously faced accusations it’s elitist, particularly because it screens and vets members after an application process instead of being open to all. Deal terms weren’t revealed.

Match Group acquired the members-only dating app The League, which focuses on matching ambitious and career-focused professionals. The app has previously faced accusations it’s elitist, particularly because it screens and vets members after an application process instead of being open to all. Deal terms weren’t revealed.

Spotify acquired the Wordle-inspired music-guessing game Heardle for an undisclosed sum. The company believes the deal could help support music discovery in its app and could help drive organic social sharing. Heardle’s website had 41 million visits last month.

Spotify acquired the Wordle-inspired music-guessing game Heardle for an undisclosed sum. The company believes the deal could help support music discovery in its app and could help drive organic social sharing. Heardle’s website had 41 million visits last month.

Tutoring marketplace and app Preply raised $50 million in Series C funding led by edtech-focused Owl Ventures. The startup has 32,000 tutors from 190 countries teaching over 50 languages, it says, and claims to have grown revenues and users 10x since 2019.

Tutoring marketplace and app Preply raised $50 million in Series C funding led by edtech-focused Owl Ventures. The startup has 32,000 tutors from 190 countries teaching over 50 languages, it says, and claims to have grown revenues and users 10x since 2019.

Fintech for kids GoHenry app acquired Pixbay to help it expand into Europe. The latter has 200,000 members across France and Spain. U.K.-based GoHenry has over 2 million users in the U.K. and U.S.

Fintech for kids GoHenry app acquired Pixbay to help it expand into Europe. The latter has 200,000 members across France and Spain. U.K.-based GoHenry has over 2 million users in the U.K. and U.S.

Japan’s SmartBank raised $20 million in Series A funding for its prepaid card and finance app. The round was led by Globis Capital Partners. The startup claims 100,000+ downloads so far and is aiming for 1 million by the end of next year.

Japan’s SmartBank raised $20 million in Series A funding for its prepaid card and finance app. The round was led by Globis Capital Partners. The startup claims 100,000+ downloads so far and is aiming for 1 million by the end of next year.

Israeli company ironSource is merging with the game development platform Unity Software, after the latter saw its share price fall over 70% in 2022 and have a market cap of under $12 billion. IronSource went public a year ago at an $11.1 billion valuation and is valued at $4.4 billion at the time of the merger. Silver Lake and Sequoia will invest $1 billion in Unity after the merger.

Israeli company ironSource is merging with the game development platform Unity Software, after the latter saw its share price fall over 70% in 2022 and have a market cap of under $12 billion. IronSource went public a year ago at an $11.1 billion valuation and is valued at $4.4 billion at the time of the merger. Silver Lake and Sequoia will invest $1 billion in Unity after the merger.

Consumer fintech startup Uprise raised $1.4 million in pre-seed funding from a range of investors. The company offers a website and app aimed at Gen Z users that takes in their full financial picture, including overlooked items like employer benefits, and offers recommendations.

Consumer fintech startup Uprise raised $1.4 million in pre-seed funding from a range of investors. The company offers a website and app aimed at Gen Z users that takes in their full financial picture, including overlooked items like employer benefits, and offers recommendations.

Indian fintech OneCard raised over $100 million in a Series D round of financing that values the business at over $1.4 billion. The company offers a metal credit card controlled by an app that also offers contactless payments. The startup has over 250,000 customers.

Indian fintech OneCard raised over $100 million in a Series D round of financing that values the business at over $1.4 billion. The company offers a metal credit card controlled by an app that also offers contactless payments. The startup has over 250,000 customers.

Stori, a Mexican fintech offering credit cards controlled by an app, raised $50 million in equity at a $1.2 billion valuation and another $100 million in debt financing. BAI Capital, GIC and GGV Capital co-led the equity portion of the deal. The company claims to have seen 20x revenue growth in 2021, but doesn’t share internal metrics.

Stori, a Mexican fintech offering credit cards controlled by an app, raised $50 million in equity at a $1.2 billion valuation and another $100 million in debt financing. BAI Capital, GIC and GGV Capital co-led the equity portion of the deal. The company claims to have seen 20x revenue growth in 2021, but doesn’t share internal metrics.

U.K. stock trading app Lightyear raised $25 million in Series A funding led by Lightspeed. The startup said it’s launching in 19 European countries, including Germany and France.

U.K. stock trading app Lightyear raised $25 million in Series A funding led by Lightspeed. The startup said it’s launching in 19 European countries, including Germany and France.

Downloads

Linktree launches a native app

Linktree, a website that allows individuals, including online creators, to manage a list of links they can feature in their social media bios via a Linktree URL, launched its first mobile app this week. The new app for iOS and Android lets users create a Linktree from their phone, add and manage their links, customize their design and more. Users can also track analytics, sales and payments, among other things. (You can read more about the new app here on TechCrunch.)

from the NGL team.” This is meant to indicate the message is not from a friend, but from the app itself. (Arguably, the wording could be clearer. Some users — particularly among its target market of young adults — could interpret this tag to mean the message is simply being delivered by the app.)

from the NGL team.” This is meant to indicate the message is not from a friend, but from the app itself. (Arguably, the wording could be clearer. Some users — particularly among its target market of young adults — could interpret this tag to mean the message is simply being delivered by the app.)

1/12

1/12

Match Group

Match Group  Tutoring marketplace and app Preply

Tutoring marketplace and app Preply