Following last month’s NBC News investigation into Pinterest that exposed how pedophiles had been using the service to curate image boards of young girls, the company on Tuesday announced further safety measures for its platform, including a new set of parental controls and updated age verification policies, among other things. However, the company also said that it would soon re-open some of its previously locked-down features for teens to allow them to once again message and share content with others.

The investigation had stained Pinterest’s carefully crafted reputation as one of the last safe and positive places on the internet. Instead, the report found that, much like any other social media platform, Pinterest was at risk of being abused by bad actors. The report found that grown men were using the site to create image boards of young girls, sexualizing innocuous photos of children doing things like gymnastics, dancing, sticking out their tongues, wearing bathing suits, and more. In addition, Pinterest’s recommendation engine was making it easier to find similar content as it would suggest more images like those the predators initially sought out.

Immediately after the report, two U.S. Senators reached out to Pinterest for answers about what was being done and to push for more safety measures. The company said it increased the number of human content moderators on its team and added new features that allow users to report content and accounts for “Nudity, pornography or sexualized content,” including those involving “intentional misuse involving minors.” Before, it had only allowed users to report spam or inappropriate cover images.

Pinterest also swept its platform to deactivate thousands of accounts — a move that may have accidentally disabled legitimate accounts in the process, according to some online complaints.

Now, the company is announcing even more safety controls are in the works.

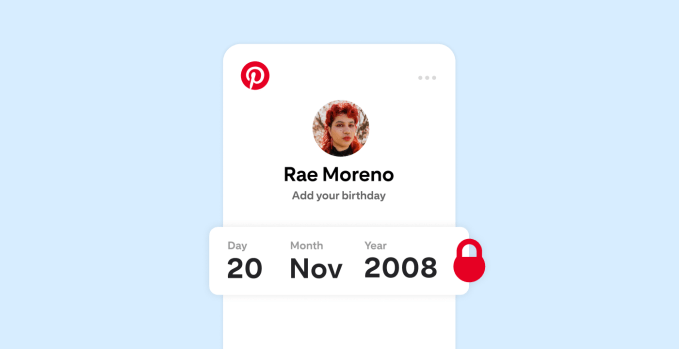

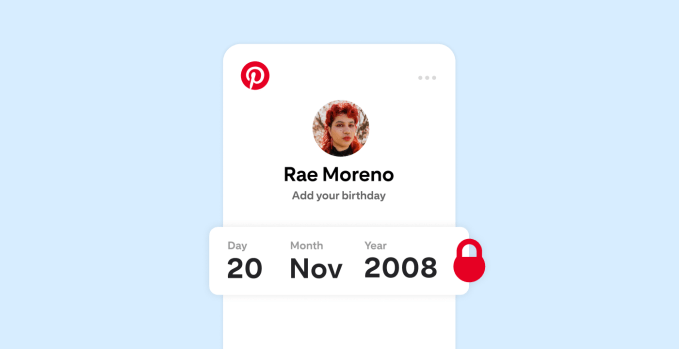

For starters, it says it will expand its age verification process. By the end of this month, if someone who entered their age as under 18 tries to edit their date of birth on the Pinterest app, the company will require them to send additional information to its third-party age verification partner. This process includes sending a government ID or birth certificate and may also require the users to take a selfie for an ID photo.

Image Credits: Pinterest

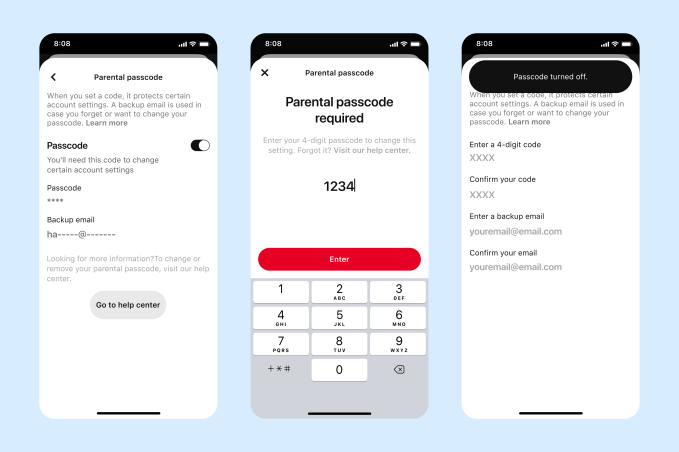

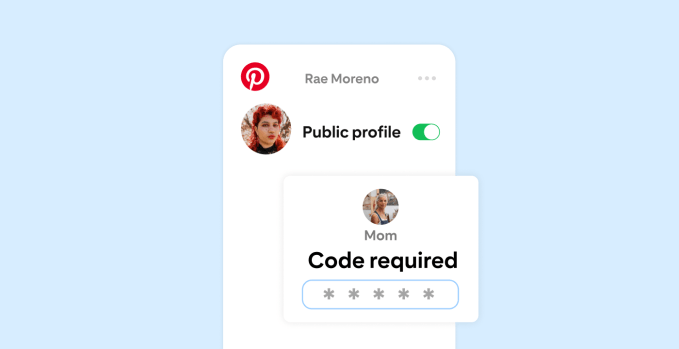

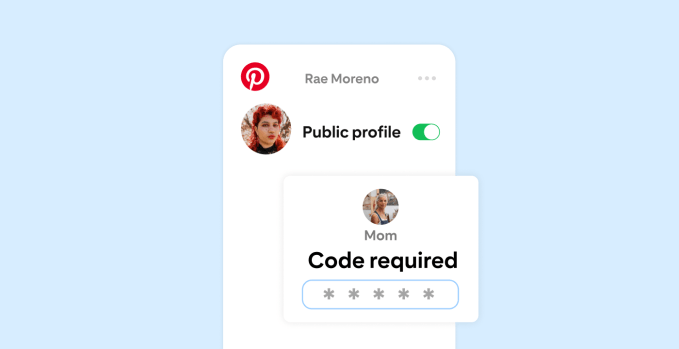

In addition, Pinterest announced it will soon offer more parental controls. Parents and guardians of children under the age of 18 will have the ability to require a passcode before their teen is allowed to change certain account settings. This would prevent a younger child from trying to change their account to an adult’s age — which matters because minors’ accounts have further protections.

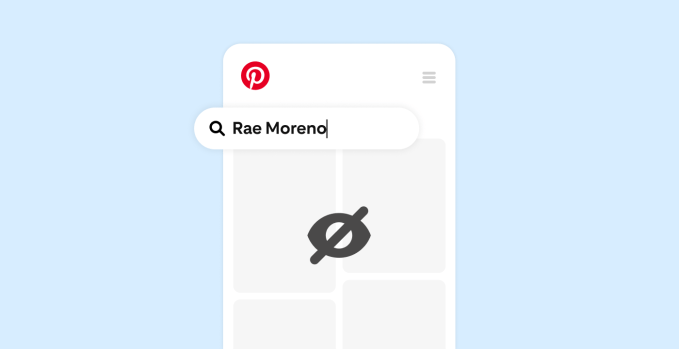

On Pinterest, teens under the age of 16 have accounts that are set private by default and aren’t discoverable by others on the service. In part, the reason why so many pedophiles had been able to discover the young girls’ photos is that they had been using accounts where their ages weren’t set accurately.

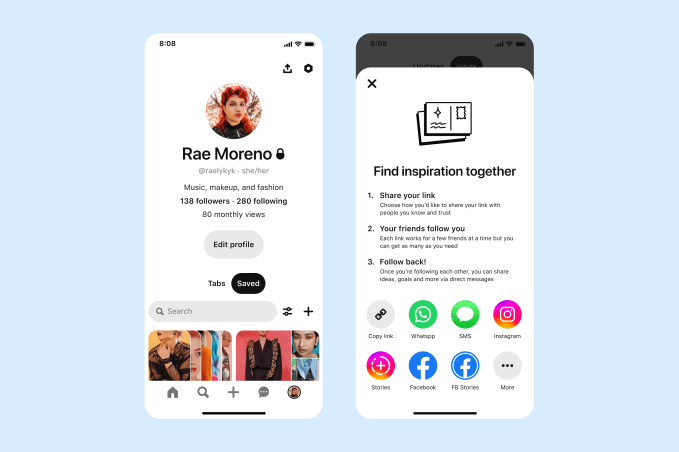

In addition, boards and Pins saved by teens under 16 aren’t visible or accessible to anyone but the user, either. Messaging features and group boards are also not available to this demographic. But Pinterest says that will soon change, noting in its Help Documentation that it’s currently “working on updating these features so you can safely connect with those you know.” In a blog post, the company explains that it will soon re-introduce the ability for teens to “share inspiration” with the people they know, “as long as they give them permission.”

Image Credits: Pinterest

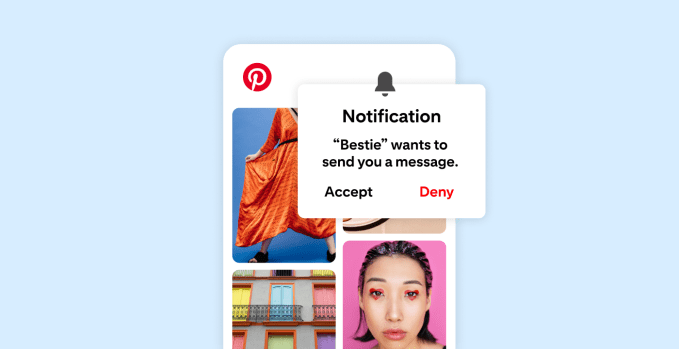

Pinterest further touted other teen safety measures in its post, including its ban on beauty filters, which have negative impacts on mental health, as well as its bans on weight loss ads and policies against body shaming.

Unfortunately, the problems Pinterest has been facing, which NBC News exposed, are not limited to its service. A lack of regulation over social media, including outdated children’s online safety laws, has led to a sort of free-for-all in the U.S. where companies are creating their own policies and making up their own rules. Financially, there’s little incentive to do anything that would make it harder for people to sign up, use and stay engaged with their platforms, due to their ad-supported natures.

But despite hauling in tech execs for numerous briefings over the years, Congress has failed to pass legislation to regulate social media. This has led to some states now even crafting their own laws, as Utah just did, which is complicating matters further.

In the meantime, parents and families are dealing with the consequences. Like anywhere else on the internet, when children’s images are posted publicly online, they can attract a dangerous audience and platforms historically have not done enough around minor safety.

Recently, there’s been heightened awareness of this problem thanks to work from creators like TikTok’s Mom.Uncharted, aka Sarah Adams, whose viral videos have helped to explain how online predators are leveraging creator content, including the work of family vloggers, for dangerous and repulsive purposes. She and others who cover the topic have been calling for parents to pull back on posting public images of their children online and stop supporting creators who share photos and videos of young children.

Young people who were forced by their parents to create online content before the age of consent are also pushing for regulations that would protect future generations. Congress has yet to act here, either.

Image Credits: Pinterest

But on Pinterest, it’s not necessarily parents who are publishing the kids’ photos — Pinterest is often used by children, which parents have tended not to mind, believing in the site’s “safe” reputation.

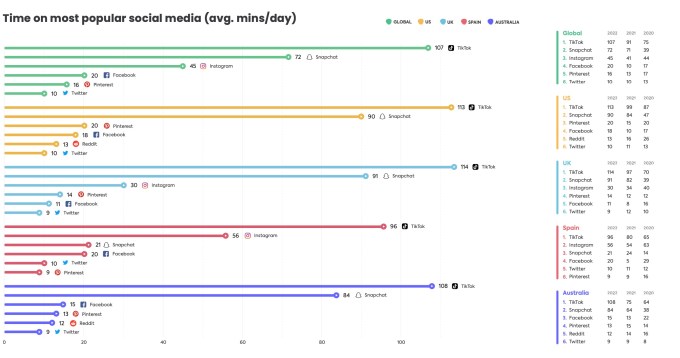

Today, some 450 million people use Pinterest every month, and 60% are women according to Pinterest’s official statistics. Millennials are the largest age group while the second largest is women ages 18-24. In theory, Pinterest’s policies should restrict its use to users 13 and up. However, the real demographics around Pinterest usage may not be captured in online statistics as kids often lie about their age to use apps and websites intended for an older audience. In an annual study of kids’ and teens’ app usage by parental control software maker Qustodio, for instance, Pinterest was the No. 3 social media platform in the U.S. in terms of time spent. Globally, it was No. 5.

“Our mission is to bring everyone the inspiration to create a life they love, and it is our guiding light in how we have created Pinterest, developed our products, and shaped our policies,” Pinterest stressed in the announcement. “As part of this on-going work, we’ll continue to focus on ways that we can keep teens safe,” it promised.

After an investigation exposes its dangers, Pinterest announces new safety tools and parental controls by Sarah Perez originally published on TechCrunch