How to submit a guest column to TechCrunch

Sharing hot takes, conventional wisdom and infographics won’t help you gain media traction.

Online audiences want expertise and opinions that are supported by facts and relevant experience. No one builds credibility by being the loudest or first to comment.

To level the playing field for people who want to share their knowledge and opinions, TechCrunch operates a guest contributor program. This isn’t sponsored content: Each submission is evaluated on its merits. The program’s overall goal is to showcase a diverse range of perspectives on tech-related issues.

Guest contributions fall into two categories:

- TechCrunch+: Strategies and tactics for building and scaling startups.

- TechCrunch Opinion: Editorials about tech-related topics in the public interest.

The most common reasons we turn down submissions

- The article was too promotional.

- The author addressed a general trend or a basic best practice that’s already well understood.

- The post consisted of conventional “thought leadership,” but contained few personal or actionable insights.

Before submitting a guest column, please review our site to see if we’ve published something similar.

How to submit a TechCrunch+ guest column

TechCrunch+ guest columns offer actionable ideas that readers can use to make better decisions while building and scaling startups.

We’re not looking for general thought leadership, basic best practices or general overviews of new tech or individual sectors. To meet our standards, TC+ guest posts must have a clear business focus and share strategies and tactics that will produce quantifiable results.

Ideally, TechCrunch+ guest articles offer information that readers can put to work inside their own companies and test for themselves — the more specific, the better. Great topics include advanced how-to guides on fundraising, entrepreneurship, growth, product management, recruiting, or in-depth discussions of where tech industry sectors are heading.

We’re only interested in columns that offer actionable advice written by authors who have direct experience in the topic they’re covering.

Focus

- TechCrunch+ contributors have a lot of leeway to explore their topics. Since we’re writing for informed audiences, we encourage more length than may be normal on other sites — 1,000–1,500 words is typical, but we are flexible.

- We do not accept guest posts that are based in whole or in part on interviews.

- We encourage contributors to mention their companies in passing to establish their credentials, but we don’t accept posts in which they center their company’s products/services as a preferred solution.

Review process

- An editor reviews all submissions, but due to the high volume of emails we receive, we are not able to reply to everyone who contacts us.

- Please email drafts to guestcolumns@techcrunch.com as .TXT., .DOC, or .PDF attachments; shared Google documents; or in the body of your email.

- We accept detailed outlines or completed drafts for consideration.

- Only submit images you own or have the right to use (.JPEG, .GIF, .PNG, or .TIFF at least 1,000px wide).

Syndication

- Authors are free to republish the full article anywhere they please one week after we run the story, as long as they link to and mention the original article.

- We do not republish articles that have been published elsewhere first.

Paywall

- We place the TechCrunch+ paywall after the first few hundred words of the article body, so please provide a preview of the key information from the article in that space.

- After we’ve published, we’ll share a link and a PDF with the complete text. Reprints are available for purchase via a third party.

Deadline

- There is not one unless both parties agree to something. We’ll typically schedule the article to run within a week or so once it nears the final stages of production.

Conflicts of interest

- We want readers to use our information to make big decisions, which means they must be able to trust what we are providing them. So, we minimize conflicts of interest where possible and are fully transparent where they occur.

- Please note anywhere you do have potential conflicts. In fact, we’ve found that writing about your own experiences or decisions helps reinforce your credibility.

Generative AI

- We do not accept contributions that include text or images produced via generative artificial intelligence.

How to submit a TechCrunch Opinion guest column

We publish TechCrunch Opinion guest columns without a paywall because they address technology-related topics in the public interest.

Our goal is to provide readers material written by authors who represent a broad array of diverse opinions and lived experiences. Topics for these columns must be timely, convincingly in the public interest, and not something that our staff could write about directly.

Beyond stating the author’s opinion, these columns should give readers a better understanding of the subject under discussion and help them understand how it’s relevant to their interests.

Focus

- Guest contributors have a lot of leeway to explore their topics. Since we’re writing for informed audiences, we encourage more length than may be normal on other sites.

- We are somewhat flexible, but most TechCrunch Opinion articles are 750–1,000 words.

- We do not accept guest editorials that are based in whole or in part on interviews.

Review process

- An editor reviews all submissions, but due to the high volume of emails we receive, we are not able to reply to everyone who contacts us.

- Please email drafts to opinions@techcrunch.com as .TXT., .DOC, or .PDF attachments; shared Google documents; or in the body of your email.

- We accept detailed outlines or completed drafts for consideration.

- Only submit images you own or have the right to use (.JPEG, .GIF, .PNG, or .TIFF at least 1,000px wide).

Editing

- If accepted, we will edit submissions for clarity and fact-check them prior to publication. If we have substantial edits or questions, we’ll contact contributors before publishing.

- We only publish information we can verify, so please provide links to primary sources.

Syndication

- Authors are free to republish the full article elsewhere one week after we run their post on TechCrunch.com, as long as they link back to and mention the original article.

- We do not republish articles that have been published elsewhere first.

Paywall

- We publish TechCrunch Opinion articles without a paywall. They’re always free to read.

Deadline

- We don’t assign deadlines unless both parties agree to something. We’ll typically schedule an article to run within a week or so after we accept it for publication.

Conflicts of interest

- We want readers to use our site to make major decisions more successfully, which means they need to be able to trust the information we are providing them.

- We will minimize conflicts of interest where possible and are fully transparent where they occur. If you have a personal or financial relationship with a topic you write about, please let us know.

- We’ve found that writing about your own experiences or decisions helps reinforce your credibility to our audience.

Generative AI

- We do not accept contributions that include text or images produced via generative artificial intelligence.

We don’t work with content marketers, SEO specialists or freelancers

TechCrunch’s guest contributor program does not accept submissions from freelance content writers, content marketers, bloggers, SEO specialists, or other people who distribute content on behalf of third parties, and we’ve never charged a fee for publishing guest articles.

If you’re interested in placing paid, sponsored content on TechCrunch, please complete this form and someone will be in touch if they are interested in working with you.

How to submit a freelance article

To pitch a freelance piece to TechCrunch, contact freelance@techcrunch.com.

How to submit a guest column to TechCrunch

Sharing hot takes, conventional wisdom and infographics won’t help you gain media traction.

Online audiences want expertise and opinions that are supported by facts and relevant experience. No one builds credibility by being the loudest or first to comment.

To level the playing field for people who want to share their knowledge and opinions, TechCrunch operates a guest contributor program. This isn’t sponsored content: Each submission is evaluated on its merits. The program’s overall goal is to showcase a diverse range of perspectives on tech-related issues.

Guest contributions fall into two categories:

- TechCrunch+: Strategies and tactics for building and scaling startups.

- TechCrunch Opinion: Editorials about tech-related topics in the public interest.

The most common reasons we turn down submissions

- The article was too promotional.

- The author addressed a general trend or a basic best practice that’s already well understood.

- The post consisted of conventional “thought leadership,” but contained few personal or actionable insights.

Before submitting a guest column, please review our site to see if we’ve published something similar.

How to submit a TechCrunch+ guest column

TechCrunch+ guest columns offer actionable ideas that readers can use to make better decisions while building and scaling startups.

We’re not looking for general thought leadership, basic best practices or general overviews of new tech or individual sectors. To meet our standards, TC+ guest posts must have a clear business focus and share strategies and tactics that will produce quantifiable results.

Ideally, TechCrunch+ guest articles offer information that readers can put to work inside their own companies and test for themselves — the more specific, the better. Great topics include advanced how-to guides on fundraising, entrepreneurship, growth, product management, recruiting, or in-depth discussions of where tech industry sectors are heading.

We’re only interested in columns that offer actionable advice written by authors who have direct experience in the topic they’re covering.

Focus

- TechCrunch+ contributors have a lot of leeway to explore their topics. Since we’re writing for informed audiences, we encourage more length than may be normal on other sites — 1,000–1,500 words is typical, but we are flexible.

- We do not accept guest posts that are based in whole or in part on interviews.

- We encourage contributors to mention their companies in passing to establish their credentials, but we don’t accept posts in which they center their company’s products/services as a preferred solution.

Review process

- An editor reviews all submissions, but due to the high volume of emails we receive, we are not able to reply to everyone who contacts us.

- Please email drafts to guestcolumns@techcrunch.com as .TXT., .DOC, or .PDF attachments; shared Google documents; or in the body of your email.

- We accept detailed outlines or completed drafts for consideration.

- Only submit images you own or have the right to use (.JPEG, .GIF, .PNG, or .TIFF at least 1,000px wide).

Syndication

- Authors are free to republish the full article anywhere they please one week after we run the story, as long as they link to and mention the original article.

- We do not republish articles that have been published elsewhere first.

Paywall

- We place the TechCrunch+ paywall after the first few hundred words of the article body, so please provide a preview of the key information from the article in that space.

- After we’ve published, we’ll share a link and a PDF with the complete text. Reprints are available for purchase via a third party.

Deadline

- There is not one unless both parties agree to something. We’ll typically schedule the article to run within a week or so once it nears the final stages of production.

Conflicts of interest

- We want readers to use our information to make big decisions, which means they must be able to trust what we are providing them. So, we minimize conflicts of interest where possible and are fully transparent where they occur.

- Please note anywhere you do have potential conflicts. In fact, we’ve found that writing about your own experiences or decisions helps reinforce your credibility.

Generative AI

- We do not accept contributions that include text or images produced via generative artificial intelligence.

How to submit a TechCrunch Opinion guest column

We publish TechCrunch Opinion guest columns without a paywall because they address technology-related topics in the public interest.

Our goal is to provide readers material written by authors who represent a broad array of diverse opinions and lived experiences. Topics for these columns must be timely, convincingly in the public interest, and not something that our staff could write about directly.

Beyond stating the author’s opinion, these columns should give readers a better understanding of the subject under discussion and help them understand how it’s relevant to their interests.

Focus

- Guest contributors have a lot of leeway to explore their topics. Since we’re writing for informed audiences, we encourage more length than may be normal on other sites.

- We are somewhat flexible, but most TechCrunch Opinion articles are 750–1,000 words.

- We do not accept guest editorials that are based in whole or in part on interviews.

Review process

- An editor reviews all submissions, but due to the high volume of emails we receive, we are not able to reply to everyone who contacts us.

- Please email drafts to opinions@techcrunch.com as .TXT., .DOC, or .PDF attachments; shared Google documents; or in the body of your email.

- We accept detailed outlines or completed drafts for consideration.

- Only submit images you own or have the right to use (.JPEG, .GIF, .PNG, or .TIFF at least 1,000px wide).

Editing

- If accepted, we will edit submissions for clarity and fact-check them prior to publication. If we have substantial edits or questions, we’ll contact contributors before publishing.

- We only publish information we can verify, so please provide links to primary sources.

Syndication

- Authors are free to republish the full article elsewhere one week after we run their post on TechCrunch.com, as long as they link back to and mention the original article.

- We do not republish articles that have been published elsewhere first.

Paywall

- We publish TechCrunch Opinion articles without a paywall. They’re always free to read.

Deadline

- We don’t assign deadlines unless both parties agree to something. We’ll typically schedule an article to run within a week or so after we accept it for publication.

Conflicts of interest

- We want readers to use our site to make major decisions more successfully, which means they need to be able to trust the information we are providing them.

- We will minimize conflicts of interest where possible and are fully transparent where they occur. If you have a personal or financial relationship with a topic you write about, please let us know.

- We’ve found that writing about your own experiences or decisions helps reinforce your credibility to our audience.

Generative AI

- We do not accept contributions that include text or images produced via generative artificial intelligence.

We don’t work with content marketers, SEO specialists or freelancers

TechCrunch’s guest contributor program does not accept submissions from freelance content writers, content marketers, bloggers, SEO specialists, or other people who distribute content on behalf of third parties, and we’ve never charged a fee for publishing guest articles.

If you’re interested in placing paid, sponsored content on TechCrunch, please complete this form and someone will be in touch if they are interested in working with you.

How to submit a freelance article

To pitch a freelance piece to TechCrunch, contact freelance@techcrunch.com.

Pieter Abbeel and Ken Goldberg on generative AI applications

I’ll admit that I’ve tiptoed around the topic a bit in Actuator due to its sudden popularity (SEO gods be damned). I’ve been in this business long enough to instantly be suspicious of hype cycles. That said, I totally get it this time around.

While it’s true that various forms of machine learning and AI touch our lives every day, the emergence of ChatGPT and its ilk present something far more immediately obvious to the average person. Typing a few commands into a dialog box and getting an essay, a painting or a song is a magical experience – particularly for those who haven’t followed the minutiae of this stuff for years or decades.

If you’re reading this, you were probably aware of these notions prior to the last 12 months, but try to put yourself in the shoes of someone who sees a news story, visits a site and then seemingly out of nowhere, a machine is creating art. Your mind would be, in a word, blown. And rightfully so.

Over the past few months, we’ve covered a smattering of generative AI-related stories in Actuator. Take last week’s video of Agility using generative AI to tell Digit what to do with a simple verbal cue. I’ve also begun speaking to founders and researchers about potential applications in the space. The sentiment shifted quite quickly from “this is neat” to “this could be genuinely useful.”

Learning has, of course, been a giant topic in robotics for decades now. It also, fittingly, seems to be where much of the potential generative AI research is heading. What if, say, a robot could predict all potential outcomes based on learning? Or how about eliminating a lot of excess coding by simply telling a robot what you want it to do? Exciting, right?

When I’m fascinated by a topic in the space, I always do the same thing: find much smarter people to berate with questions. It’s gotten me far in life. This time out, our lucky guests are a pair of UC Berkeley professors who have taught me a ton about the space over the years (I recommend getting a few for your rolodex).

Pieter Abbeel is the Director of the Berkeley Robot Learning Lab and co-founder/President/Chief Scientist of Covariant, which uses AI and rich data sets to train picking robots. Along with helping Abbeel run BAIR (Berkeley AI Research Lab), Ken Goldberg is the school’s William S. Floyd Jr. Distinguished Chair in Engineering and the Chief Scientist and co-founder of Ambi Robotics, which uses AI and robotics to tackle package sorting.

Pieter Abbeel

Image Credits: TechCrunch

Let’s start with the broad question of how you see generative AI fitting into the broader world of robotics.

There are two big trends happening at the same time. There is a trend of foundation models and a trend of generative AI. They are very intertwined, but they’re distinct. A foundation model is a model that is trained on a lot of data, including data that is only tangentially possibly related to what you care about. But it’s still related. But by doing so, it gets better at the things you care about. Essentially, all generative models are foundation models, but there are foundation models that are not generative AI models, because they do something else. The Covariant Brain is a foundation model, the way it’s set up right now. Since day one, back in 2017, we’ve been training all the items that we could possibly run across. But in any given deployment, we only care about, for example, electrical supplies, or we only care about apparel, or we only care about groceries only.

It’s a shift in paradigm. Traditionally, people would have said, ‘Oh, if you’re going to do groceries, groceries, groceries, groceries. That’s all your training, a neural network based on groceries. That’s not what we’ve been doing. It’s all about chasing the long tail of edge cases. The more things you’ve seen, the better you can make sense of an edge case. The reason it works is because the neural networks become so big. If you know networks are small, all this tangentially related stuff is going to perturb your knowledge of the most important stuff. But there’s so much they can keep absorbing. It’s like a massive sponge keeps absorbing things. You’re not hurting anything by putting in this extra stuff. You’re actually helping a little bit more by doing that.

It’s all about learning, right? It’s a big thing everyone is trying to crack right now. The broader foundation model is to train it on as large a dataset as possible.

Yes, but the key is not just large. It’s very diverse. I’m not just doing only groceries. I’m gonna pick groceries, but I’m also training on all the other stuff that I might pick at another warehouse into the same foundation model to have a general understanding of all objects, which is a better way to learn not only about groceries. You never know what will pop up in the mix of those groceries. There’s always going to be a new item. You never have everything covered. So, you need to generalize to new items. Your chance of generalizing to new items well, the probability is higher if you’ve covered a very wide spectrum of other things.

The larger the neural network, the more it understands the world, broadly speaking.

Yeah. That really is the key. That is what’s going to unlock AI-powered robotics applications, whether it’s picking or self-driving and so forth – it’s the ability to absorb so much. But if we switch gears and think about generative AI specifically, there are thing you can imagine it playing a role in. If you think of generative, what does it mean compared to previous generations of AI? At its core, it means it’s generative data. But how is that different from generating labels? If I give it an image and it says “cat,” that’s also generating data. It’s just that it’s able to generate more data. Again, that relates to the neural network. The neural networks are larger, which allows them not only to analyze larger things, but to generate larger things in a consistent way.

In robotics, there are a few angles. One is building a deeper understanding of the world. Instead of me saying, “I’m going to label data to teach the neural network,” I can say, “I’m going to record a video of what happens,” and my generative model needs to predict the next frame, the next frame, the next frame. And by forcing it to understand how to predict the future, I’m forcing it to understand how the world works.

Oftentimes when I talk to people about the different forms of learning, it’s almost discussed as though they’re in conflict with each other, but in this case, it’s two different kinds of learning effectively working in tandem.

Yes. And again, because the networks are so large, we train neural networks to predict future frames. By doing that, in addition to training them to output the optimal actions for a certain task, they actually learn to output the actions much quicker, from far less data. You’re giving it two tasks and it learns how to do the one task, because the two tasks are related. Predicting the next frame is such a difficult thinking exercise, you force it to think through so much more to predict actions that it predicts actions much, much faster.

In terms of a practical real-world application – say, in an industrial setting, learning to screw something in – how does learning to predict the next thing inform its action?

This is a work in progress. But the idea is that there are different ways of teaching a robot. You can program it. You can give it demonstrations. It can learn from reinforcement, where it learns from its own trial and error. Programming has seen its limitations. It’s not really going beyond what we’ve seen for a long time in car factories.

Let’s say I was a self-driving car. If my robot can predict the future at all times, it can do two things. The first is having a deep understanding of the world and with a little extra learning, pick the right action. In addition, it has another option. If it wants to do a lot of work in the moment, it can simulate scenarios. It can also simulate the traffic around it. That’s where this is headed.

These are all of the possible outcomes I can see. This is the best outcome, I’m going to do that.

Correct. There are other things we can do in generative AI with robotics. Google has had some results, where what they said is, what if we bolt some things together. One of the big challenges with robotics has been high-level reasoning. There are two challenges: 1. how do you do the actual motor skill and 2. what should you actually do. If someone asked you, ‘make me scrambled eggs,’ what does that even mean? And that’s where generative AI models come in handy in a different way. They’re pre-trained. The simplest version just uses language. Making scrambled eggs, you can break that down into:

- Go get the eggs from the fridge

- Get the frying pan

- Get the butter.

The robot can go to the fridge. It might ask what to do with the fridge, and then the model says:

- Go to the fridge

- Take the thing out of the fridge

The whole thing in robotics has traditionally been logic or task planning, and people who have to program it in somehow have to describe the world in terms of logical statements that somehow come after each other, and so forth. The language models kind of seem to take care of it in a beautiful way. That’s unexpected to many people.

Ken Goldberg

Image Credits: Kimberly White (opens in a new window) / Getty Images

How do you see generative AI’s potential in robotics?

The core concept here is the transformer. The transformer network is very interesting, because it looks at sequences. It’s able to essentially get very good at predicting the next item. It’s astoundingly good at that. It works for words, because we only have a relatively small number of words in the English language. At best, the Oxford English Dictionary I think has about half a million. But you can get by with far fewer than that. And you have plenty of examples, because every string of text gives you an example of words and how they’re put together. It’s a beautiful sweet spot. You can show it a lot of examples, and you have relatively few choices to make at every step. It turned out it can predict that extremely well.

The same is true to sequences of sounds, so this can also be used for audio processing and prediction. You can train it very similar. Instead of words, you have sequences of phonemes coming in. You give it a lot of strings of music or voice, and then it will be able to predict the next sound signal or phoneme. It can also be used for images. You have a string of images and it can be used to predict the next image.

Pieter was talking about using video to predict what’s going to happen in the next frame. It was effectively like having the robot think in video.

Kind of, yeah. If you can now predict the next video, the next thing you can add in there is the control. If I add my control in there, I can predict what comes out if I do action A or B. I can look at all of my actions and pick the action that gets me closer to what I want to see. Now I want to get it to the next level, which is where I look at the next scene and have voxels. I have these three-dimensional volumes. I want to train it on those and say, “here’s my current volume, and here’s the volume I want to have. What actions do I need to perform to get me there?”

When you’re talking about volumes, you mean where the robot exists in space?

Yeah, or even what’s happening in front of you. If you want to clean up the dishes in front of you, the volume is where all those dishes are. Then you say, “what I want is a clear table with none of those dishes on it.” That’s the volume I want to get to, so now I have to find the sequence of actions that will get from the initial state, which is what I’m looking at now, to the final state, which is where I have no dishes anymore.

Based on videos the robot has been trained on, it can extrapolate what to do.

In principle, but not from videos of people. That’s problematic. Those videos are shot from an odd angle. You don’t know what motions they’re doing. It’s hard for the robot to know that. What you do is essentially have the robot self-learn by having the camera. The robot tries things out and learns over time.

A lot of the applications I’m hearing about are based around language commands. You say something, the robot is able to determine what you mean and execute it in real-time.

That’s a different thing. We now have a tool that can handle language very well. And what’s cool about it is that it gives you access to the semantics of a scene. A very well known paper from Google did the following: You have a robot and you say “I just spilled something, and I need help to clean it up.” Typically the robot wouldn’t know what to do with that, but now you have language. You run that into ChatGPT and it generates: “get a sponge. Get a napkin. Get a cloth. Look for the spilled can, make sure it can pick that up.” All of that stuff can come out. What they do is exactly that: They take all of that output and they say, “is there a sponge around? Let me look for a sponge.”

The connection between semantic of the world – a spill and a sponge – ChatGPT is very good at that. That fills a gap that we’ve always had. It’s called the Open World Problem. Before that, we had to program in every single thing it was going to encounter. Now we have another source that can make these connections that we couldn’t make before. That’s very cool. We have a project on that’s called Language Embedded Radiance Field. It’s brand new. It’s how to use that language to figure out where to pick things up. We say, “here’s a cup. Pick it up by the handle,” and it seems to be able to identify where the handle is. It’s really interesting.

You’re obviously very smart people and you know a lot about generative AI, so I’m curious where the surprise comes in.

We always get surprised when these systems do things that we didn’t anticipate. That’s when robotics is at its best, when you give it a setup, and it suddenly does something.

It does the right thing for once.

Exactly! That’s always a surprise in robotics!

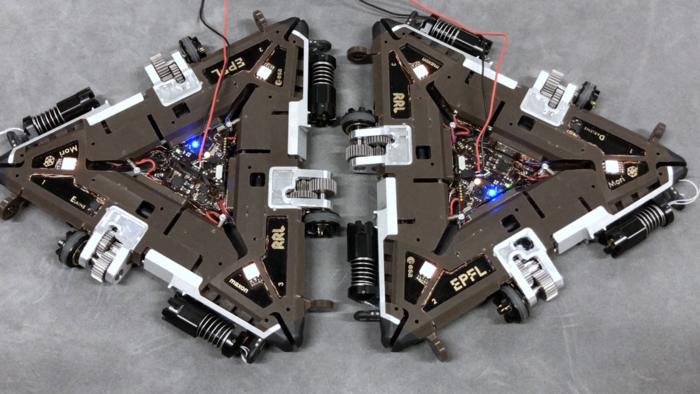

Postscript

One more bit of generative AI before we move on for the week. Researchers at Switzerland’s EPFL University are highlighting robots making robots. I’m immediately reminded of RepRap, which gave rise to the desktop 3D printing space. Launched in 2005, the project started with the mission of creating “humanity’s first general-purpose, self-replicating manufacturing machine.” Effectively, the goal was to create a 3D printer that could 3D-print itself.

For this project, the researchers used ChatGPT to generate the design for a product-picking robot. The team suggests language models “could change the way we design robots, while enriching and simplifying the process.”

Computational Robot Design & Fabrication Lab head Josie Hughes adds, “Even though Chat-GPT is a language model and its code generation is text-based, it provided significant insights and intuition for physical design, and showed great potential as a sounding board to stimulate human creativity.”

Image Credits: EPFL

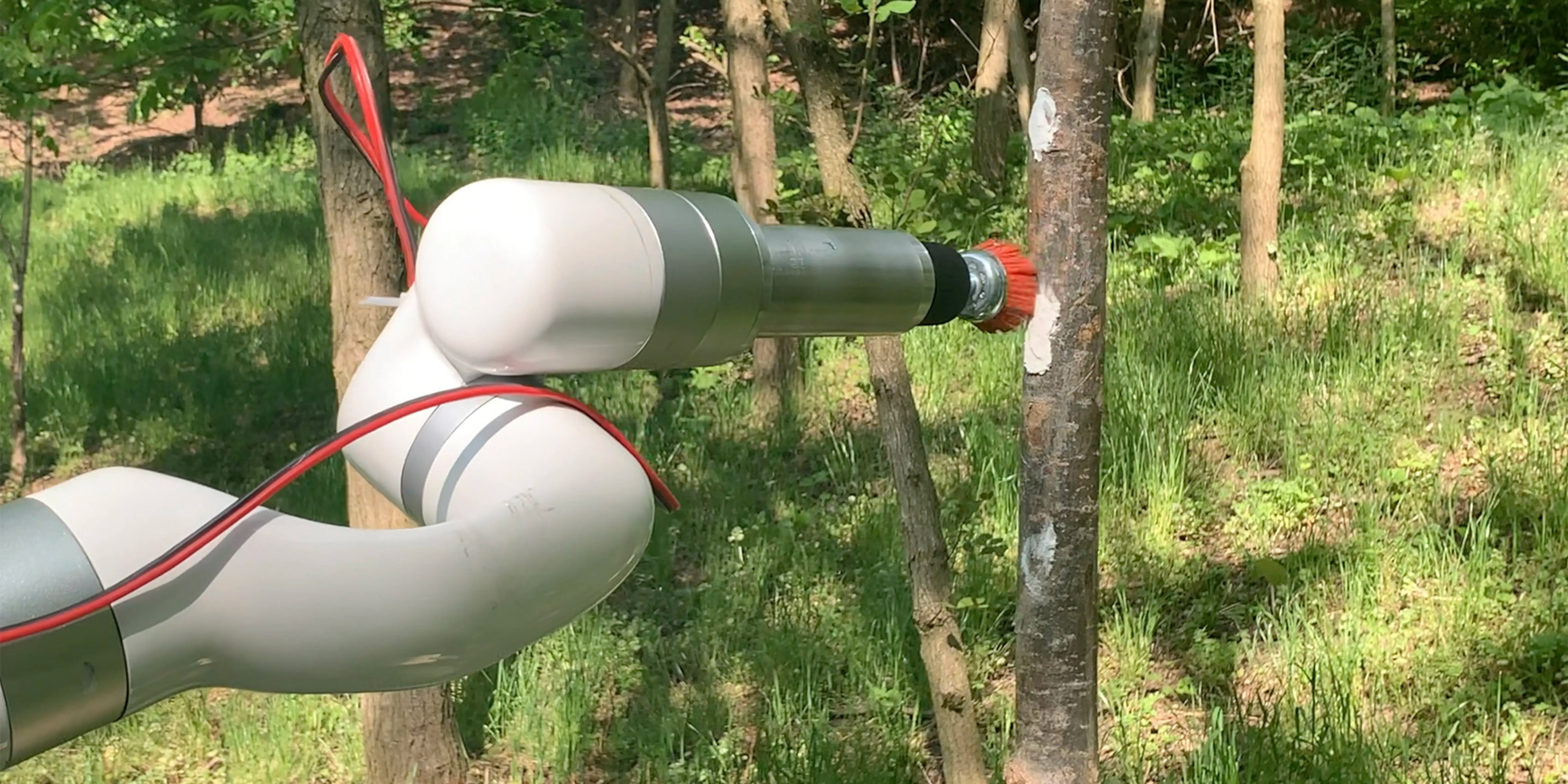

Some light lanternfly extermination

An interesting pair of research with some common DNA also crossed my desk this week. Anyone who’s seen a spotted lanternfly in person knows how beautiful they can be. The China native insect flutters around on wings that flash sharp swaths of red and blue. Anyone who’s seen a spotted lanternfly on the Eastern U.S. seaboard, however, knows that they’re invasive species. Here in New York, there’s a statewide imperative to destroy the buggers on command.

The CMU Robotics Institute designed TartanPest as part of Farm-ng’s Farm Robotics Challenge. The system features a robotic arm mounted atop a Farm-ng tractor, which is designed to spot and spray masses of lanternfly eggs – destroying the bugs before they hatch. The robot, “uses a deep learning model refined on an augmented image data set created from 700 images of spotted lanternfly egg masses from iNaturalist to identify them and scrape them off surfaces.

For the record, nowhere in Asimov’s robotics laws are lanternflies mentioned.

Image Credits: CMU

Reforestation

Meanwhile, ABB this week showcased what it calls “the world’s most remote robot.” A product of a collaboration with the nonprofit group JungleKeepers, the system effectively uses an ABB arm to automate seed collection, planting and watering in a bid to promote reforestation.

There’s a big open question around efficacy and scalability, and this is certainly a nice PR play from the automation giant, but if this thing can make even small progress amid rapid deforestation, I’m all for it.

Image Credits: ABB

Sweaters for robots

One more CMU project that I missed a couple of weeks back. RobotSweater is not a robotic sweater, but rather a robot in a sweater (SweaterRobot might have been more apt). Regardless, the system uses knitted textiles as touch-sensitive skin. Per the school:

Once knitted, the fabric can be used to help the robot “feel” when a human touches it, particularly in an industrial setting where safety is paramount. Current solutions for detecting human-robot interaction in industry look like shields and use very rigid materials that Liu notes can’t cover the robot’s entire body because some parts need to deform.

Once attached to the robot (in this case, an industrial arm), the e-textile can sense the force, direction and distribution of touch, sensitivities that could help these systems more safely work alongside people.

“In their research, the team demonstrated that pushing on a companion robot outfitted in RobotSweater told it which way to move or what direction to turn its head,” CMU says. “When used on a robot arm, RobotSweater allowed a push from a person’s hand to guide the arm’s movement, while grabbing the arm told it to open or close its gripper.”

Image Credits: CMU

Robotic origami

Capping off an extremely research heavy edition of Actuator – and returning once again to Switzerland’s EPFL – is Mori3. The little robot is made up of a pair of triangles that can form into different shapes.

“Our aim with Mori3 is to create a modular, origami-like robot that can be assembled and disassembled at will depending on the environment and task at hand,” Reconfigurable Robotics Lab director, Jamie Paik. “Mori3 can change its size, shape and function.”

The system recalls a lot of fascinating work concurrently happening in the oft-intersecting fields of modular and origami robotics. The systems communicate with one another and attach to form complex shapes. The team is targeting space travel as a primary application for this emerging technology. Their small, flat design make them much easier to pack on a shuttle than a preassembled bot. And let’s be honest, no one wants to spend a ton of time piecing together robots like Ikea furniture after blasting off.

“Polygonal and polymorphic robots that connect to one another to create articulated structures can be used effectively for a variety of applications,” says Paik. “Of course, a general-purpose robot like Mori3 will be less effective than specialized robots in certain areas. That said, Mori3’s biggest selling point is its versatility.”

Image Credits: EPFL

3…2…1…we have Actuator.

Pieter Abbeel and Ken Goldberg on generative AI applications by Brian Heater originally published on TechCrunch

TechCrunch+’s holiday reading list

It’s Memorial Day here in the United States, which means that the flow of tech news is slower than normal. This might leave you feeling bereft, adrift without the usual 3,239 individual items that happen daily in startup land. But have no fear, for TechCrunch+ is here with a quick rewind of our work from May.

Consider this a roll call of some of our favorite journalism from the last few weeks, packaged in a simple format for your quick perusal and ingestion. To the lists!

- News you wished you had read 10 years ago: Haje has been a busy bee this month, writing about how to write the perfect cold email, how to hire early employees, how to get warm intros to investors (he’s hot then he’s cold, etc.), and even more on the warm intro front. We also have notes from a founder on how they bootstrapped to $40 million ARR and key metrics from an investor for cybersecurity product managers (cybersecurity is a topic Alex has been working on as well). Oh, and if you want to get your board meeting done in an hour, read this.

TechCrunch+’s holiday reading list by Miranda Halpern originally published on TechCrunch

Say hello to the TechCrunch+ Cyber Monday sale!

To celebrate Cyber Monday, TechCrunch+ is having a sale! Until November 30th, take 25% off a subscription to secure access to all our work.

TechCrunch+ is TechCrunch’s founder-focused analytical arm. We cover the trends behind the news, dig into venture capital numbers, report on how startups are executing today, and share advice and insight from tech operators. We’d love for you to join us.

You may have noticed more TechCrunch+ material on TechCrunch proper in the last few quarters. That’s thanks to our recently expanded staff. TechCrunch+ is obsessed with venture capital, climate, crypto, and reporting on who gets to build new companies.

We’re working to cover the startup market from a host of perspectives. Our goal is to better understand, to quote something dear to my heart, the numbers and nuance behind the headlines.

We have big plans for 2023, including more reporters, more reporting, and more repartee. TechCrunch+ is a big tent — and one that we want to expand even further. So take advantage of our biggest sale of the year! We’re already hard at work to ensure you stay super informed about startups, tech, and venture.

Hugs, and happy holidays from myself and the TechCrunch+ crew,

Alex Wilhelm, EiC, TC+

Say hello to the TechCrunch+ Cyber Monday sale! by Alex Wilhelm originally published on TechCrunch

A scale story: TechCrunch’s parent company links up with Taboola

Folks often ask if Crunchbase and TechCrunch are still the same company (nope). Many express surprise that AOL was once this publication’s sole parent (yep). The saga of Who Owns TechCrunch is actually somewhat interesting. Various corporate developments over the last decade saw TechCrunch trade hands several times, including our most recent ejection from Verizon (long story) into the arms of private equity (shorter story).

Today we’re part of a reconstituted Yahoo, an entity that combines its historical assets — sans Alibaba — with AOL and other properties including this publication. I bring all that up because our parent company is in the news today. So much so that we’re pushing the value of a public company sharply higher by dint of our partnering with it, and taking a sizable stake in its equity at the same time.

The Exchange explores startups, markets and money.

Read it every morning on TechCrunch+ or get The Exchange newsletter every Saturday.

Because my employer is about to own just under a fourth of Taboola, I want to rewind the clock a bit today and recall how we wound up in a world where both Taboola and Outbrain — online advertising companies that you are familiar with, and have at times collected criticism — are public companies.

This should be lightweight and fun. Frankly, before today, I had never read a Taboola or Outbrain earnings report. We will explore together! Into the numbers!

This should be lightweight and fun. Frankly, before today, I had never read a Taboola or Outbrain earnings report. We will explore together! Into the numbers!

A merger that didn’t

A scale story: TechCrunch’s parent company links up with Taboola by Alex Wilhelm originally published on TechCrunch

Connect with Mayfield, JETRO, Toptal and more at TechCrunch Disrupt

Mark your calendars, startup fans, because TechCrunch is returning — live and in person — to the beautiful City by the Bay to host our flagship event, TechCrunch Disrupt, on October 18–20 at the Moscone West Convention Center followed by an online recap event on October 21.

TC Disrupt is the grande dame of tech conferences for many reasons, and today we’d like to highlight some of the companies that you’ll be able to engage with at the event. Every year we’re fortunate to join forces with great companies that are committed to supporting early-stage startups. You’ll be able to take advantage of their resources and connections and even take away a chunk of knowledge from real-life case studies and startup educational content. Their participation elevates, engages and supports early-stage founders.

These companies also come to Disrupt to connect and explore opportunities with other companies within the startup ecosystem. They form alliances, forge partnerships, and look for potential investments, and sometimes they become a startup’s new client.

I go to TechCrunch Disrupt to find new and interesting companies, make new business connections and look for startups with investment potential. It’s an opportunity to expand my knowledge and inform my work. — Rachael Wilcox, creative producer, Volvo Cars.

Breakout sessions are a great feature at Disrupt that allow attendees to meet in smaller settings. Xsolla will be presenting “Expand to New Platforms and Take Your Games Direct-to-Consumer,” while Mayfield‘s session “Getting to Yes and What Happens Next: An Unfiltered Chat with a Top VC” is one not to miss. Wells Fargo will also be hosting a breakout session on “How Banks and Fintech Startups Can Effectively Co-Thrive”.

And for attendees who want to get even more face-to-face time, roundtables provide a perfect setting for small-group discussions. Mayfield will be hosting a session on “Saving the World: The Playbook for Building Planetary Health Unicorns” and will also be exploring “Why nine out of ten startups fail.” Virtual Gurus will be leading a roundtable as well.

What would Disrupt be without a signature satellite party – be on the lookout for an invite from Singapore Global Network to join them for a fun party.

Exhibiting on the show floor are Dolby.io, Justworks, Toptal, JetBrains, JETRO, Kapstan, NeoSoft, Remote Tech Services, Nano Nuclear Energy, Inc, and Remotebase, Dryvebox all of whom you’ll be able to connect with.

And what would Disrupt be without a party? Terra.do will be hosting a signature reception at the show.

TechCrunch Disrupt takes place in San Francisco on October 18–20 with an online day on October 21.

Is your company interested joining us at TechCrunch Disrupt? Contact our sponsorship sales team by filling out this form.

Connect with Mayfield, JETRO, Toptal and more at TechCrunch Disrupt by Lauren Simonds originally published on TechCrunch

Connect with Google Cloud for Startups, Blackstone Launchpad and more at TechCrunch Disrupt

Mark your calendars, startup fans, because TechCrunch is returning — live and in person — to the beautiful City by the Bay to host our flagship event, TechCrunch Disrupt, on October 18–20 at the Moscone West Convention Center followed by an online recap event on October 21.

TC Disrupt is the grande dame of tech conferences for many reasons, and today we’d like to highlight some of the companies that you’ll be able to engage with at the event. Every year we’re fortunate to join forces with great companies that are committed to supporting early-stage startups. You’ll be able to take advantage of their resources and connections and even take away a chunk of knowledge from real-life case studies and startup educational content. Their participation elevates, engages and supports early-stage founders.

These companies also come to Disrupt to connect and explore opportunities with other companies within the startup ecosystem. They form alliances, forge partnerships, and look for potential investments and sometimes they become a startup’s new client.

I go to TechCrunch Disrupt to find new and interesting companies, make new business connections and look for startups with investment potential. It’s an opportunity to expand my knowledge and inform my work. — Rachael Wilcox, creative producer, Volvo Cars.

Google Cloud for Startups is coming to Disrupt to talk about “How to Supercharge Growth, Utilize Cloud, and Reduce Burn.” Blackstone Launchpad joins us as the title sponsor for the Disrupt Student Pitch Competition. Joining the exhibit hall, we’ve got AppMaster, Codahead, and Eat The Code. Rounding out the Disrupt Breakout Stage, DevRev is coming to the show with a breakout session on “How to Achieve Product Market Fit (PMF),” and FIS will be hosting “Fintechs Walking the Line: Ecosystems, Symbiotic Relationships with Banks, and What’s Next.”

Disrupt wouldn’t be Disrupt without a signature roundtable, and InterSystems is coming in hot with two roundtables: “Breaking Into the Healthcare Monolith” and “What the Heck Is Interoperability Anyways?”

Fast Forward will be hosting a startup pavilion on the exhibit floor, and finally, Infobip will be expoing on the floor as well with some great interactive experiences.

TechCrunch Disrupt takes place in San Francisco on October 18–20 with an online day on October 21.

Is your company interested joining us at TechCrunch Disrupt? Contact our sponsorship sales team by filling out this form.

Connect with Google Cloud for Startups, Blackstone Launchpad and more at TechCrunch Disrupt by Lauren Simonds originally published on TechCrunch

Revolut confirms cyberattack exposed personal data of tens of thousands of users

Fintech startup Revolut has confirmed it was hit by a highly targeted cyberattack that allowed hackers to access the personal details of tens of thousands of customers.

Revolut spokesperson Michael Bodansky told TechCrunch that an “unauthorized third party obtained access to the details of a small percentage (0.16%) of our customers for a short period of time.” Revolut discovered the malicious access late on September 10 and isolated the attack by the following morning.

“We immediately identified and isolated the attack to effectively limit its impact and have contacted those customers affected,” Bodansky said. “Customers who have not received an email have not been impacted.”

Revolut, which has a banking license in Lithuania, wouldn’t say exactly how many customers were affected. Its website says the company has approximately 20 million customers; 0.16% would translate to about 32,000 customers. However, according to Revolut’s breach disclosure to the authorities in Lithuania, first spotted by Bleeping Computer, the company says 50,150 customers are impacted by the breach, including 20,687 customers in the European Economic Area and 379 Lithuanian citizens.

Revolut also declined to say what types of data were accessed but told TechCrunch that no funds were accessed or stolen in the incident. In a message sent to affected customers posted to Reddit, the company said that “no card details, PINs or passwords were accessed.” However, the breach disclosure states that hackers likely accessed partial card payment data, along with customers’ names, addresses, email addresses, and phone numbers.

The disclosure states that the threat actor used social engineering methods to gain access to the Revolut database, which typically involves persuading an employee to hand over sensitive information such as their password. This has become a popular tactic in recent attacks against a number of well-known companies, including Twilio, Mailchimp and Okta.

But Revolut warned that the breach appears to have triggered a phishing campaign, and urged customers to be careful when receiving any communication regarding the breach. The startup advised customers that it will not call or send SMS messages asking for login data or access codes.

As a precaution, Revolut has also formed a dedicated team tasked with monitoring customer accounts to make sure that both money and data are safe.

“We take incidents such as these incredibly seriously, and we would like to sincerely apologize to any customers who have been affected by this incident as the safety of our customers and their data is our top priority at Revolut,” Bodansky added.

Last year Revolut raised $800 million in fresh capital, valuing the startup at more than $33 billion.

Revolut confirms cyberattack exposed personal data of tens of thousands of users by Carly Page originally published on TechCrunch