The big social networks and video games have failed to prioritize user well-being over their own growth. As a result, society is losing the battle against bullying, predators, hate speech, misinformation and scammers. Typically when a whole class of tech companies have a dire problem they can’t cost-effectively solve themselves, a software-as-a-service emerges to fill the gap in web hosting, payment processing, etc. So along comes AntiToxin Technologies, a new Israeli startup that wants to help web giants fix their abuse troubles with its safety-as-a-service.

It all started on Minecraft. AntiToxin co-founder Ron Porat is cybersecurity expert who’d started popular ad blocker Shine. Yet right under his nose, one of his kids was being mercilessly bullied on the hit children’s game. If even those most internet-savvy parents were being surprised by online abuse, Porat realized the issue was bigger than could be addressed by victims trying to protect themselves. The platforms had to do more, research confirmed.

A recent Ofcom study found almost 80% of children had a potentially harmful online experience in the past year. Indeed, 23% said they’d been cyberbullied, and 28% of 12 to 15-year-olds said they’d received unwelcome friend or follow requests from strangers. A Ditch The Label study found of 12 to 20-year-olds who’d been bullied online, 42% were bullied on Instagram.

Unfortunately, the massive scale of the threat combined with a late start on policing by top apps makes progress tough without tremendous spending. Facebook tripled the headcount of its content moderation and security team, taking a noticeable hit to its profits, yet toxicity persists. Other mainstays like YouTube and Twitter have yet to make concrete commitments to safety spending or staffing, and the result is non-stop scandals of child exploitation and targeted harassment. Smaller companies like Snap or Fortnite-maker Epic Games may not have the money to develop sufficient safeguards in-house.

“The tech giants have proven time and time again we can’t rely on them. They’ve abdicated their responsibility. Parents need to realize this problem won’t be solved by these companies” says AntiToxin CEO Zohar Levkovitz, who previously sold his mobile ad company Amobee to Singtel for $321 million. “You need new players, new thinking, new technology. A company where ‘Safety’ is the product, not an after-thought. And that’s where we come-in.” The startup recently raised a multimillion-dollar seed round from Mangrove Capital Partners and is allegedly prepping for a double-digit millions Series A.

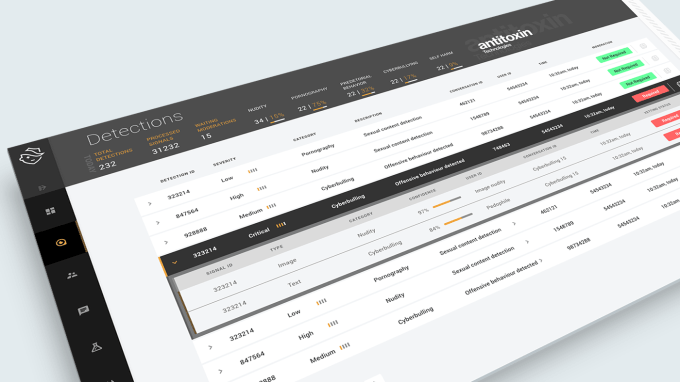

AntiToxin’s technology plugs into the backends of apps with social communities that either broadcast or message with each other and are thereby exposed to abuse. AntiToxin’s systems privately and securely crunch all the available signals regarding user behavior and policy violation reports, from text to videos to blocking. It then can flag a wide range of toxic actions and let the client decide whether to delete the activity, suspend the user responsible or how else to proceed based on their terms and local laws.

Through the use of artificial intelligence, including natural language processing, machine learning and computer vision, AntiToxin can identify the intent of behavior to determine if it’s malicious. For example, the company tells me it can distinguish between a married couple consensually exchanging nude photos on a messaging app versus an adult sending inappropriate imagery to a child. It also can determine if two teens are swearing at each other playfully as they compete in a video game or if one is verbally harassing the other. The company says that beats using static dictionary blacklists of forbidden words.

AntiToxin is under NDA, so it can’t reveal its client list, but claims recent media attention and looming regulation regarding online abuse has ramped up inbound interest. Eventually the company hopes to build better predictive software to identify users who’ve shown signs of increasingly worrisome behavior so their activity can be more closely moderated before they lash out. And it’s trying to build a “safety graph” that will help it identify bad actors across services so they can be broadly deplatformed similar to the way Facebook uses data on Instagram abuse to police connected WhatsApp accounts.

“We’re approaching this very human problem like a cybersecurity company, that is, everything is a Zero-Day for us” says Levkowitz, discussing how AntiToxin indexes new patterns of abuse it can then search for across its clients. “We’ve got intelligence unit alums, PhDs and data scientists creating anti-toxicity detection algorithms that the world is yearning for.” AntiToxin is already having an impact. TechCrunch commissioned it to investigate a tip about child sexual imagery on Microsoft’s Bing search engine. We discovered Bing was actually recommending child abuse image results to people who’d conducted innocent searches, leading Bing to make changes to clean up its act.

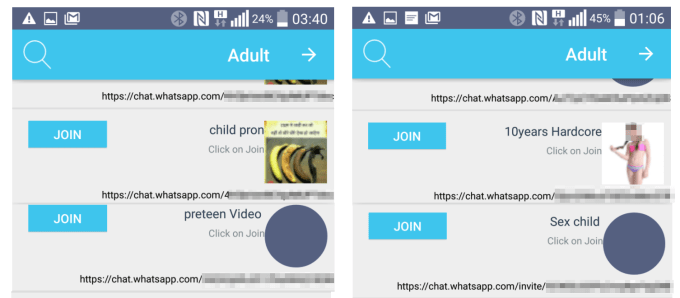

AntiToxin identified publicly listed WhatsApp Groups where child sexual abuse imagery was exchanged

One major threat to AntiToxin’s business is what’s often seen as boosting online safety: end-to-end encryption. AntiToxin claims that when companies like Facebook expand encryption, they’re purposefully hiding problematic content from themselves so they don’t have to police it.

Facebook claims it still can use metadata about connections on its already encrypted WhatApp network to suspend those who violate its policy. But AntiToxin provided research to TechCrunch for an investigation that found child sexual abuse imagery sharing groups were openly accessible and discoverable on WhatsApp — in part because encryption made them hard to hunt down for WhatsApp’s automated systems.

AntiToxin believes abuse would proliferate if encryption becomes a wider trend, and it claims the harm that it causes outweighs fears about companies or governments surveiling unencrypted transmissions. It’s a tough call. Political dissidents, whistleblowers and perhaps the whole concept of civil liberty rely on encryption. But parents may see sex offenders and bullies as a more dire concern that’s reinforced by platforms having no idea what people are saying inside chat threads.

What seems clear is that the status quo has got to go. Shaming, exclusion, sexism, grooming, impersonation and threats of violence have started to feel commonplace. A culture of cruelty breeds more cruelty. Tech’s success stories are being marred by horror stories from their users. Paying to pick up new weapons in the fight against toxicity seems like a reasonable investment to demand.

The problem is that this philosophy is hard to monetize for a social network that needs to maximize broadcasted content and engagement to score ad views. But it’s easy to monetize if you sell the phone and then let people be as private as they want on it. That’s why today at

The problem is that this philosophy is hard to monetize for a social network that needs to maximize broadcasted content and engagement to score ad views. But it’s easy to monetize if you sell the phone and then let people be as private as they want on it. That’s why today at