New research by the Oxford Internet Institute has found that social media manipulation is getting worse, with rising numbers of governments and political parties making cynical use of social media algorithms, automation and big data to manipulate public opinion at scale — with hugely worrying implications for democracy.

The report found that computational propaganda and social media manipulation have proliferated massively in recently years — now prevalent in more than double the number of countries (70) vs two years ago (28). An increase of 150%.

The research suggests that the spreading of fake news and toxic narratives has become the dysfunctional new ‘normal’ for political actors across the globe, thanks to social media’s global reach.

“Although propaganda has always been a part of political discourse, the deep and wide-ranging scope of these campaigns raise critical public interest concerns,” the report warns.

The researchers go on to dub the global uptake of computational propaganda tools and techniques a “critical threat” to democracies.

“The use of computational propaganda to shape public attitudes via social media has become mainstream, extending far beyond the actions of a few bad actors,” they add. “In an information environment characterized by high volumes of information and limited levels of user attention and trust, the tools and techniques of computational propaganda are becoming a common – and arguably essential – part of digital campaigning and public diplomacy.”

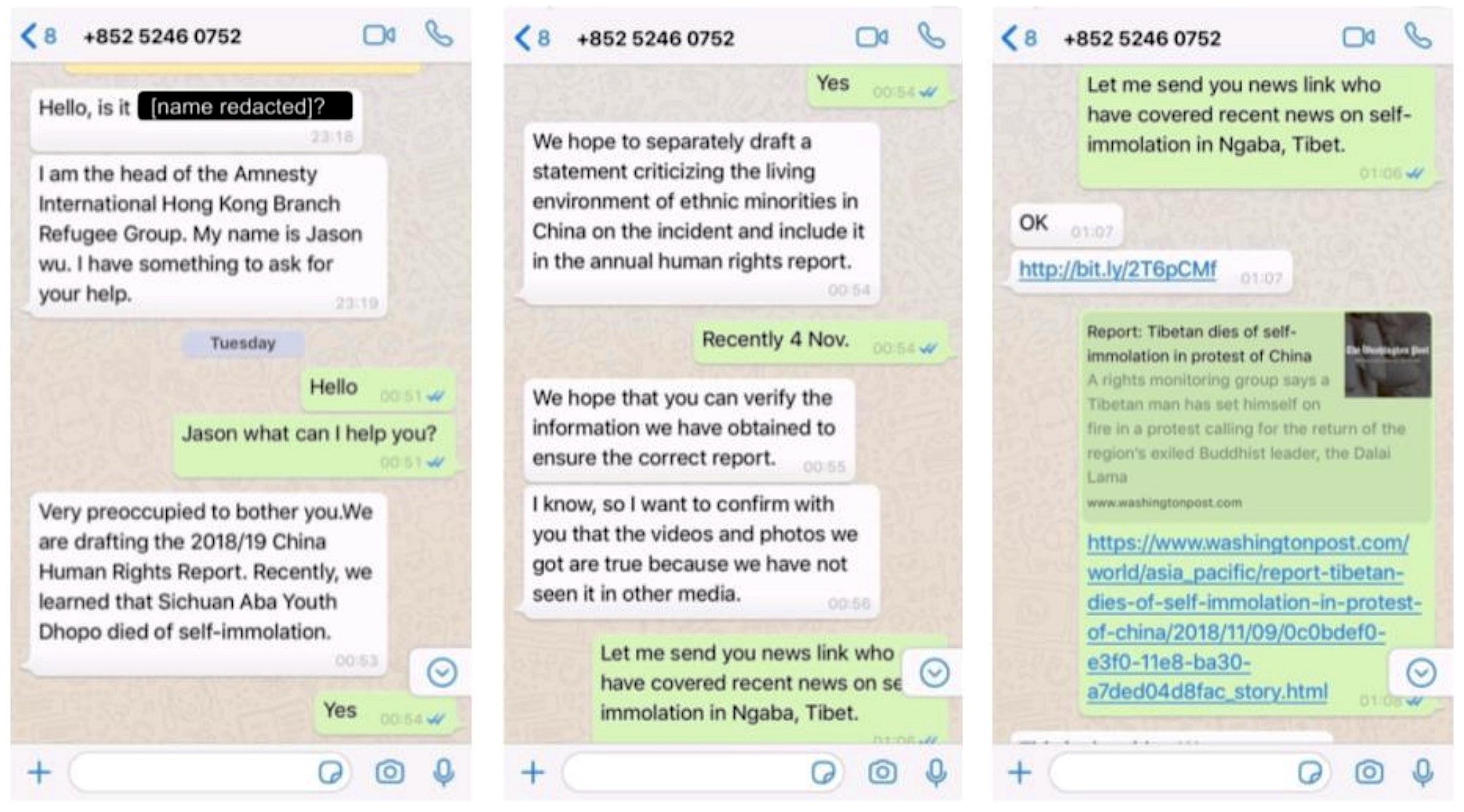

Techniques the researchers found being deployed by governments and political parties to spread political propaganda include the use of bots to amplify hate speech or other forms of manipulated content; the illegal harvesting of data or micro-targeting; and the use of armies of ‘trolls’ to bully or harass political dissidents or journalists online.

The researchers looked at computational propaganda activity in 70 countries around the world — including the US, the UK, Germany, China, Russia, India, Pakistan, Kenya, Rwanda, South Africa, Argentina, Brazil and Australia (see the end of this article for the full list) — finding organized social media manipulation in all of them.

So next time Facebook puts out another press release detailing a bit of “coordinated inauthentic behavior” it claims to have found and removed from its platform, it’s important to put it in context of the bigger picture. And the picture painted by this report suggests that such small-scale, selective discloses of propaganda-quashing successes sum to misleading Facebook PR vs the sheer scale of the problem.

The problem is massive, global and largely taking place through Facebook’s funnel, per the report.

Facebook remains the platform of choice for social media manipulation — with researchers finding evidence of formally organised political disops campaigns on its platform taking place in 56 countries.

We reached out to Facebook for a response to the report and the company sent us a laundry list of steps it says it’s been taking to combat election interference and coordinated inauthentic activity — including in areas such as voter suppression, political ad transparency and industry-civil society partnerships.

But it did not offer any explanation why all this apparent effort (just its summary of what it’s been doing exceeds 1,600 words) has so spectacularly failed to stem the rising tide of political fakes being amplified via Facebook.

Instead it sent us this statement: “Helping show people accurate information and protecting against harm is a major priority for us. We’ve developed smarter tools, greater transparency, and stronger partnerships to better identify emerging threats, stop bad actors, and reduce the spread of misinformation on Facebook, Instagram and WhatsApp. We also know that this work is never finished and we can’t do this alone. That’s why we are working with policymakers, academics, and outside experts to make sure we continue to improve.”

We followed up to ask why all its efforts have so far failed to reduce fake activity on its platform and will update this report with any response.

Returning to the report, the researchers say China has entered the global disinformation fray in a big way — using social media platforms to target international audiences with disinformation, something the country has long directed at its domestic population of course.

The report describes China as “a major player in the global disinformation order”.

It also warns that the use of computational propaganda techniques combined with tech-enabled surveillance is providing authoritarian regimes around the world with the means to extend their control of citizens’ lives.

“The co-option of social media technologies provides authoritarian regimes with a powerful tool to shape public discussions and spread propaganda online, while simultaneously surveilling, censoring, and restricting digital public spaces,” the researchers write.

Other key findings from the report include that both democracies and authoritarian states are making (il)liberal use of computational propaganda tools and techniques.

Per the report:

- In 45 democracies, politicians and political parties “have used computational propaganda tools by amassing fake followers or spreading manipulated media to garner voter support”

- In 26 authoritarian states, government entities “have used computational propaganda as a tool of information control to suppress public opinion and press freedom, discredit criticism and oppositional voices, and drown out political dissent”

The report also identifies seven “sophisticated state actors” — China, India, Iran, Pakistan, Russia, Saudi Arabia and Venezuela — using what it calls “cyber troops” (aka dedicated online workers whose job is to use computational propaganda tools to manipulate public opinion) to run foreign influence campaigns.

Foreign influence operations — which includes election interference — were found by the researchers to primarily be taking place on Facebook and Twitter.

We’ve reached out to Twitter for comment and will update this article with any response.

A year ago, when Twitter CEO Jack Dorsey was questioned by the Senate Intelligence Committee, he said it was considering labelling bot accounts on its platform — agreeing that “more context” around tweets and accounts would be a good thing, while also arguing that identifying automation that’s scripted to look like a human is difficult.

Instead of adding a ‘bot or not’ label, Twitter has just launched a ‘hide replies’ feature — which lets users screen individual replies to their tweets (requiring an affirmative action from viewers to unhide and be able to view any hidden replies). Twitter says this is intended at increasing civility on the platform. But there have been concerns the feature could be abused to help propaganda spreaders — i.e. by allowing them to suppress replies that debunk their junk.

The Oxford Internet Institute researchers found bot accounts are very widely used to spread political propaganda (80% of countries studied used them). However the use of human agents was even more prevalent (87% of countries).

Bot-human blended accounts, which combine automation with human curation in an attempt to fly under the BS detector radar, were much rarer: Identified in 11% of countries.

While hacked or stolen accounts were found being used in just 7% of countries.

In another key finding from the report, the researchers identified 25 countries working with private companies or strategic communications firms offering a computational propaganda as a service, noting that: “In some cases, like in Azerbaijan, Israel, Russia, Tajikistan, Uzbekistan, student or youth groups are hired by government agencies to use computational propaganda.”

Commenting on the report in a statement, professor Philip Howard, director of the Oxford Internet Institute, said: “The manipulation of public opinion over social media remains a critical threat to democracy, as computational propaganda becomes a pervasive part of everyday life. Government agencies and political parties around the world are using social media to spread disinformation and other forms of manipulated media. Although propaganda has always been a part of politics, the wide-ranging scope of these campaigns raises critical concerns for modern democracy.”

Samantha Bradshaw, researcher and lead author of the report, added: “The affordances of social networking technologies — algorithms, automation and big data — vastly changes the scale, scope, and precision of how information is transmitted in the digital age. Although social media was once heralded as a force for freedom and democracy, it has increasingly come under scrutiny for its role in amplifying disinformation, inciting violence, and lowering trust in the media and democratic institutions.”

Other findings from the report include that:

- 52 countries used “disinformation and media manipulation” to mislead users

- 47 countries used state sponsored trolls to attack political opponents or activists, up from 27 last year

Which backs up the widespread sense in some Western democracies that political discourse has been getting less truthful and more toxic for a number of years — given tactics that amplify disinformation and target harassment at political opponents are indeed thriving on social media, per the report.

Despite finding an alarming rise in the number of government actors across the globe who are misappropriating powerful social media platforms and other tech tools to influence public attitudes and try to disrupt elections, Howard said the researchers remain optimistic that social media can be “a force for good” — by “creating a space for public deliberation and democracy to flourish”.

“A strong democracy requires access to high quality information and an ability for citizens to come together to debate, discuss, deliberate, empathise and make concessions,” he said.

Clearly, though, there’s a stark risk of high quality information being drowned out by the tsunami of BS that’s being paid for by self-interested political actors. It’s also of course much cheaper to produce BS political propaganda than carry out investigative journalism.

Democracy needs a free press to function but the press itself is also under assault from online ad giants that have disrupted its business model by being able to spread and monetize any old junk content. If you want a perfect storm hammering democracy this most certainly is it.

It’s therefore imperative for democratic states to arm their citizens with education and awareness to enable them to think critically about the junk being pushed at them online. But as we’ve said before, there are no shortcuts to universal education.

Meanwhile regulation of social media platforms and/or the use of powerful computational tools and techniques for political purposes simply isn’t there. So there’s no hard check on voter manipulation.

Lawmakers have failed to keep up with the tech-fuelled times. Perhaps unsurprisingly, given how many political parties have their own hands in the data and ad-targeting cookie jar. (Concerned citizens are advised to practise good digital privacy hygiene to fight back against undemocratic attempts to hack public opinion. More privacy tips here.)

The researchers say their 2019 report, which is based on research work carried out between 2018 and 2019, draws upon a four-step methodology to identify evidence of globally organised manipulation campaigns — including a systematic content analysis of news articles on cyber troop activity and a secondary literature review of public archives and scientific reports, generating country specific case studies and expert consultations.

Here’s the full list of countries studied:

Angola, Argentina, Armenia, Australia, Austria, Azerbaijan, Bahrain, Bosnia & Herzegovina, Brazil, Cambodia, China, Colombia, Croatia, Cuba, Czech Republic, Ecuador, Egypt, Eritrea, Ethiopia, Georgia, Germany, Greece, Honduras, Guatemala, Hungary, India, Indonesia, Iran, Israel, Italy, Kazakhstan, Kenya, Kyrgyzstan, Macedonia, Malaysia, Malta, Mexico, Moldova, Myanmar, Netherlands, Nigeria, North Korea, Pakistan, Philippines, Poland, Qatar, Russia, Rwanda, Saudi Arabia, Serbia, South Africa, South Korea, Spain, Sri Lanka, Sweden, Syria, Taiwan, Tajikistan, Thailand, Tunisia, Turkey, Ukraine, United Arab Emirates, United Kingdom, United States, Uzbekistan, Venezuela, Vietnam, and Zimbabwe.