CloudZero, a cloud cost management startup, today announced that it raised $32 million in a Series B funding round led by Innovius Capital and Threshold Ventures.

Bringing the company’s total raised to over $52 million, the tranche will be put toward expanding CloudZero’s platform and scaling its go-to-market efforts, CEO Erik Peterson says — with an emphasis on savings insights and self-service analytics.

“The pandemic accelerated digital transformation for many companies, which increased software development and spend in the public cloud, while the broader slowdown in tech increased the focus on profitability and unit economics and drove a shift away from innovation at all costs,” Peterson told TechCrunch in an email interview. “CloudZero has benefited from both of these trends and with this recent fundraising is positioned to accelerate our growth as demand for our platform is at an all-time high.”

Peterson co-founded CloudZero with Matt Manger nearly ten years ago after identifying what he describes as an “intrinsic” connection between efficient architecture and cost-effective cloud solutions. The way Peterson sees it, every cloud engineering decision is essentially a buying one — yet the cost conversation often bypasses the engineers who drive these decisions.

To attempt to solve for this, CloudZero provides engineering teams with cost data in a unified dashboard, combining the data with different types of business- and system-level telemetry. All this is laid on top of cloud billing data, enabling CloudZero to answer questions such as how much an individual product or feature costs.

CloudZero’s tech relies on hourly cloud spend data to alert engineers of “abnormal” spend events (as identified by an AI algorithm). The platform ingests customers’ cloud, platform-as-service and software-as-a-service spend to normalize it into a common data model.

“Cloud adoption is now widespread across sectors, but digital businesses struggle with lack of visibility, control, and optimization of their cloud costs — which impacts their ability to manage cloud spending,” Peterson said. “With up-to-the-minute monitoring, our platform identifies cost drivers and anomalies instantly, enabling engineers to proactively address cost spikes or inefficiencies and avoid cloud waste.”

Whether CloudZero’s platform is actually “up-to-the-minute” and “instant” remains up for debate. But undeniably, there’s demand for cost-cutting cloud solutions.

According to a recent IDC survey, 55% of cloud buyers believe inflationary pressures over the past few months have negatively impacted their return on investment. In fact, those organizations claim that the cloud now makes up on average 32% of their IT budgets; a quarter of respondents say that they’re looking internally at where they can make cloud cost reductions.

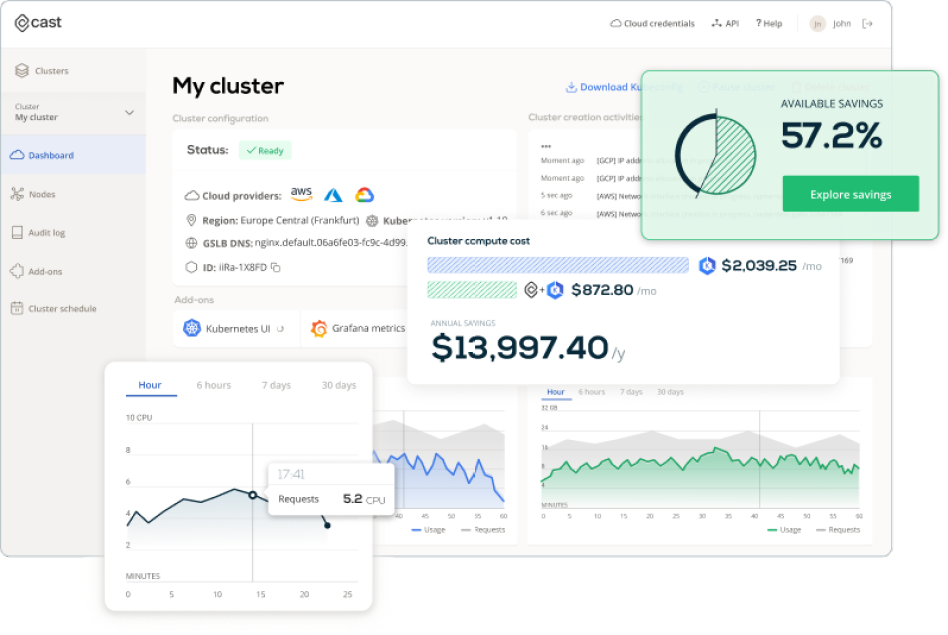

CloudZero doesn’t stand alone in the cloud cost optimization market, also known as FinOps — which isn’t exactly surprising given the huge market opportunity. There’s ProsperOps, which closed a $72 million investment in February for its software that automatically optimizes cloud resources to deliver savings. Other players include Xonai, Vantage, Cast AI and Zesty, as well as larger vendors like VMware and Apptio.

For its part, CloudZero has over 100 customers. Peterson wouldn’t disclose revenue, but said that it’s increased 10x since 2021.

Cloud cost management startup CloudZero lands $32M investment by Kyle Wiggers originally published on TechCrunch